Web scraping for sentiment analysis turns scattered online reactions into a single, readable signal.

Companies handle a constant flow of reviews, comments, posts, and articles. People respond to price changes, product updates, outages, and campaigns all day. Leadership teams need that emotional signal in a structured, comparable format, not in screenshots or random chat threads.

When teams run a sentiment analysis initiative, they usually follow one pattern:

- Define a straightforward business question → which decision needs sentiment input.

- Collect relevant text from the right sources → where those voices actually appear.

- Clean and normalize the data → remove noise so models see plain language and stable fields.

- Apply sentiment models → assign scores and labels to each record.

- Aggregate and visualize results → show trends that support real decisions.

In a typical web scraping sentiment analysis project, marketing leaders track brand mood after a launch. Product managers monitor feedback on features across app stores and marketplaces. Analysts link sentiment shifts to changes in traffic, sign-ups, or churn.

This guide walks through that workflow from one-off experiments to scalable, always-on pipelines.

You will see where web data actually helps, where sentiment models fall short, and which architectural patterns keep the system stable over time.

GroupBWT builds multi-source, multi-market sentiment pipelines that respect platform rules and legal constraints. Every section here remains rooted in that kind of practical delivery work, rather than theory. By the end, you can discuss this topic with both engineers and executives using one shared mental model.

What Is Sentiment Analysis And Why Businesses Use It

A company gains real clarity when it listens to people in real time instead of waiting for reports. Data becomes useful only when it helps you see what is actually happening, not what happened long ago.

— Dmytro Naumenko, CTO

Core Idea Of Sentiment Analysis

Sentiment analysis systems read text and assign an emotional label.

A simple model marks a sentence as positive, negative, or neutral. A richer model estimates emotions such as joy, frustration, or trust. Teams apply these labels to tweets, reviews, survey answers, and support messages.

Each label turns a subjective opinion into a signal that systems can count, segment, and track over time.

How Different Teams Use Sentiment

Different roles read sentiment for different decisions:

- Marketing leaders measure response to campaigns and brand initiatives → adjust budgets and messaging

- Product owners watch sentiment around specific features or product lines → refine roadmap and backlog.

- Customer experience teams monitor recurring complaints and praise across channels → update processes and scripts.

- Strategy and research teams observe sentiment at the category level → detect shifts in market mood and positioning.

Teams do not replace human judgment. They use sentiment as a quantitative layer that guides where to look and what to investigate first.

Main Types Of Sentiment Analysis

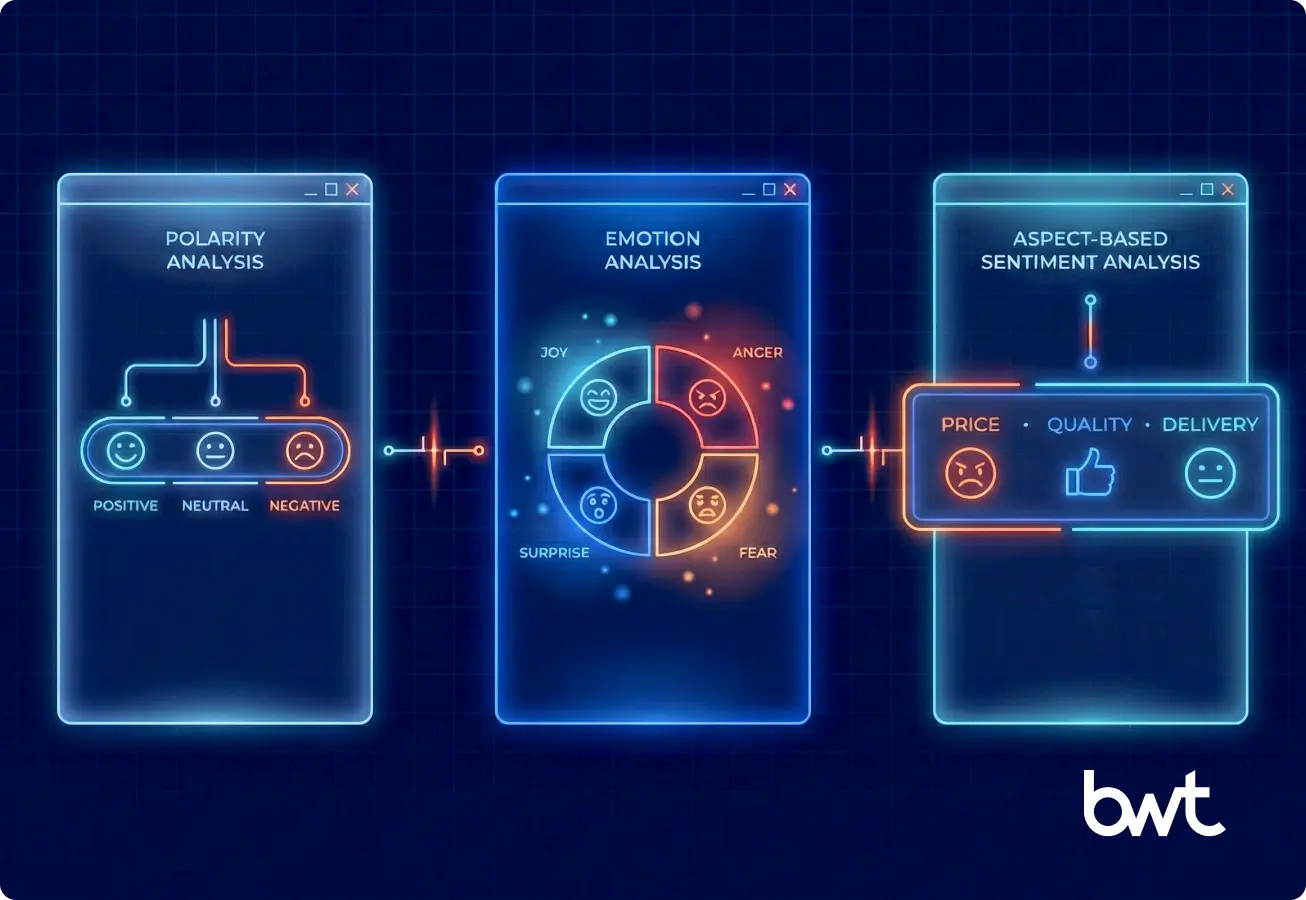

Teams usually work with three primary flavours:

| Sentiment Type | What It Measures | Typical Use |

| Polarity Analysis | Simulating anticipated share change from rival price/promo moves. | Quick health check for brands, products, or campaigns |

| Emotion Analysis | Specific emotional states such as joy, frustration, anger, trust, or surprise | Content testing, creative strategy, and tone evaluation |

| Aspect-Based Sentiment Analysis | Sentiment tied to a specific attribute within a text (e.g., service vs. price vs. quality in one review) | Feature-level insights, product decisions, and roadmap planning |

Models need clear targets and clean input so they can separate these aspects correctly.

Why Continuous Signals Matter

Analysts create real value when they treat sentiment as a live signal, not a quarterly report.

Teams combine data scraping and sentiment classification so models always see:

- New language and expressions → fewer misclassifications over time.

- New topics and competitors → sentiment encompasses the entire market, not just a narrow slice.

- New customer groups and regions → results reflect the real audience mix.

That tight loop between continuous collection and continuous interpretation turns sentiment into a reliable decision input, rather than a one-off research project.

The Role Of Web Scraping In Sentiment Analysis

Without automation, teams see only a small, delayed part of public opinion. With automation, they see volume, variety, and speed in one place. This section explains why web scraping sits at the core of any production sentiment initiative.

Why Manual Data Collection Is Not Enough

Manual sentiment work breaks down because every role sees a different fragment of the conversation. Marketing teams monitor one social feed and end up tracking the loudest or newest voices. Analysts export comments from survey tools and capture only participants’ responses. Product managers skim app store pages when time appears and see a narrow, random slice of feedback. Each specialist views a different moment, a different segment, and a different signal.

This fragmented view creates predictable gaps. Teams often miss regional trends when they focus on a single language or market. They lose comparative context when they watch only their own pages and ignore competitor sentiment. They discover harmful waves late because escalation is the first indicator that something has changed. They attempt to scale by adding more manual work, yet a higher volume results in slower speed and lower quality. A web scraping for sentiment analysis pipeline replaces this scattered effort with a repeatable, shared input for all teams.

How Web Scraping Helps Gather Real-Time Opinions From Multiple Sources

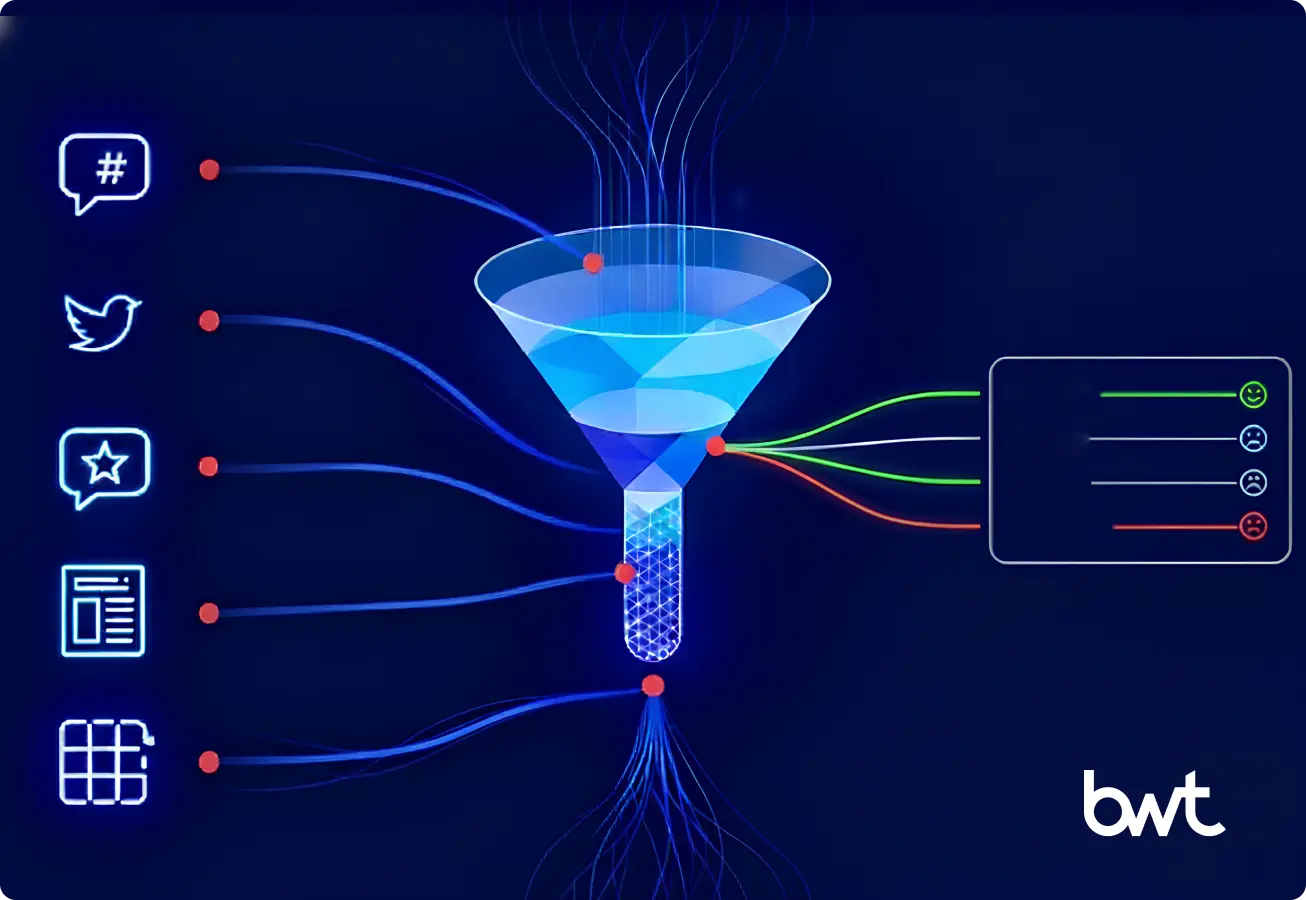

Scraping pipelines combine multiple channels into a single live feed. Teams that monitor broad market signals often combine scraping with insights on what big data is used for in business to interpret sentiment within a wider operational context.

They follow a simple loop:

- Workers or scheduled jobs reach out to selected platforms.

- They parse public content and extract only valid text and metadata.

- They push structured records into storage with timestamps and source tags.

- They repeat this cycle on a defined schedule.

Sentiment models then receive an updated stream of opinions, rather than a fixed export.

Scraping also consolidates channels into a single analytical view. Teams can track one product or brand across:

- X, LinkedIn, and other social media feeds.

- Trustpilot, G2, Yelp, and niche review platforms.

- App stores and booking platforms.

Decision makers view sentiment curves over time, sliced by:

- Platform.

- Language.

- Region.

- Product or feature.

A launch that performs well on social but poorly in reviews stands out early. Some projects require methods that safely bypass Cloudflare when platforms introduce additional layers that filter automated traffic, provided all activity stays within permitted rules. The team does not wait for quarterly reports or ad-hoc escalation.

Key Data Sources For Sentiment Analysis

The primary channels that supply text for sentiment analysis each correspond to specific business applications.

| Channel | Typical Content | Who Uses It Most |

| Social Media | Short reactions and campaign feedback | Marketing, Comms |

| Reviews | Detailed product and service opinions | Product, CX |

| Forums | Deep, domain-specific discussions | Product, Strategy |

| News | Coverage of brands, sectors, and events | Leadership, Finance |

Now, a closer look at each source.

Social Media

Social platforms deliver the fastest signals. People react to campaigns, news, and product changes within minutes. Scraping public posts and comments gives marketing and communications teams an early view of:

- Spikes in attention.

- Shifts in mood around specific topics or hashtags.

- Differences between regions or languages.

Analysts treat this stream as a high-frequency sentiment feed.

Review Sites

Review platforms provide slower but richer feedback. Customers explain what they liked or disliked about a purchase, not only whether they liked it. App stores, marketplaces, booking sites, and specialist review portals reveal:

- Product quality issues.

- Service problems.

- Gaps between expectation and reality.

Product and CX teams mine these texts for recurring themes that should drive backlog and training.

Forums And Community Boards

Forums and community boards hold deep, domain-focused conversations. Industry boards, subreddits, and Q&A platforms highlight:

- Expert opinions.

- Detailed problem descriptions

- Real-world workarounds and usage patterns.

Strategy and product teams use this layer to understand how knowledgeable users actually work with a solution, not only how they review it.

News Articles And Comment Sections

News articles and their comments surface sentiment around brands, sectors, and events. Financial analysts track sentiment in headlines and coverage about listed companies. Corporate communications teams monitor how outlets frame:

- Crises and incidents.

- Partnership announcements.

- Strategic moves

Scraping and analysing this content gives leaders a macro view: how the market speaks about the organisation and its environment.

Together, these sources create a picture that no single feed can provide. Scraping does not replace careful human review; yet it supplies the volume, variety, and continuity that sentiment systems need to remain reliable. Teams also evaluate sources through a compliance lens and align extraction with GDPR web scraping guidelines to maintain predictable, lawful operations.

How Web Scraping For Sentiment Analysis Works

A reliable scraping sentiment analysis pipeline follows a precise sequence.

This section breaks that sequence into five steps that any team can map to their own stack.

Step 1 – Define The Goal And Data Requirements

Every pipeline starts with a decision question, not with a scraper.

Typical questions look like:

- Brand health: “How does sentiment around our brand move week by week?”

- Launch impact: “Did sentiment change after our new pricing or feature release?”

- Competitive view: “How do people talk about our offer compared with the two main rivals?”

Once the question is fixed, teams define data requirements:

- Entities: Which brands, products, competitors, or campaigns to track?

- Time window: Which period to cover, and how fresh the data must be?

- Channels: Which platforms match the audience and use case?

- Languages and regions: Which markets should the decision reflect?

This step keeps the pipeline focused. Legal and governance owners confirm whether the target platform fits accepted use under is web scraping legal criteria before any new domain enters the sentiment workflow. It prevents “collect everything” behaviour that increases cost without improving insight.

Step 2 – Select Target Websites And Content Types

After the goal is clear, teams pick where to listen and what to collect.

Selection criteria:

- Relevance: Does this site or platform hold opinions from the target audience?

- Volume: Is there enough traffic to show clear trends?

- Accessibility: Does the platform offer an API, or is HTML scraping required?

- Policy fit: Do platform rules allow the planned level of data access?

Teams then decide which content types to include:

- Short texts: posts, comments, titles, short reviews.

- Long texts: full reviews, forum threads, news articles.

- Metadata: star ratings, timestamps, locations, product IDs, and user tags.

Careful selection here sets up clean mapping between business questions and actual text records. Strategic teams often complement this selection by reviewing competitive analysis and benchmarking data, which anchors sentiment trends within a broader category view.

Step 3 – Use Web Scraping Tools Or APIs

Now teams choose how to collect the identified content.

In many projects, they combine several web scraping tools for sentiment analysis:

- Code frameworks such as Scrapy, Requests + BeautifulSoup, or Selenium / Playwright

- Use when the team has engineers and needs control, scale, and custom logic.

- No-code scraping tools similar to Octoparse or ParseHub

- Use for quick pilots, low-volume jobs, or when non-technical staff manage data pulls

- Official APIs from platforms such as X, Reddit, or review providers

- Use when a policy requires API access and the API exposes the necessary fields.

Common patterns:

- Utilize APIs where they are sufficiently rich and within budget.

- Use HTML scraping when APIs are limited, missing, or do not expose public content that matters.

- Schedule scraping and API calls to ensure the pipeline respects rate limits and maintains platform stability. Retail and marketplace projects may require domain-specific methods, such as patterns developed during data scraping from Costco or similar large-catalogue environments.

At this step, the pipeline gains a repeatable intake layer rather than ad hoc exports. Commerce-led teams sometimes apply practices refined in web scraping Shopify projects to ensure stable access to structured product and review data.

Step 4 – Clean, Structure, And Store Collected Data

Raw scraped data rarely fits sentiment models as-is. Some pipelines enrich text with signals derived from AI data scraping workflows, which improve consistency before models perform sentiment extraction.

Teams run a data preparation stage with three goals:

- Clean text

- Remove navigation, ads, and boilerplate around the main content.

- Normalise whitespace, punctuation, emojis, and encodings.

- Filter spam or off-topic records when possible.

- Structure records

- Put each text item into a consistent schema:

- Text, source, created_at, language, entity, rating, and other fields.

- Keep identifiers that link records to products, campaigns, or regions.

- Put each text item into a consistent schema:

- Store data for reuse

- Write batches into tables, object storage, or a warehouse.

- Partition by date and source to ensure later queries remain efficient.

This stage determines how easily sentiment can be sliced by source, region, or product later. Good structure here saves effort in every downstream analysis. During early-stage experiments, teams often validate sources using no code web scraping tools before engineering formal ingestion jobs.

Step 5 – Apply NLP Models To Extract Sentiment

With text prepared, teams run NLP models to attach sentiment to each record.

Three standard model tiers:

- Lexicon-Based Tools

- Examples: VADER in NLTK, TextBlob.

- Fast to start, useful for simple polarity scores on short texts.

- Pre-Built Machine Learning Models

- Examples: Hugging Face sentiment pipelines, cloud NLP APIs.

- Better suited for context and nuance, ideal for production dashboards.

- Custom or Fine-Tuned Models

- Trained or adapted on domain-specific data.

- Useful when language, jargon, or context differs from generic datasets.

Typical workflow:

- Score each text item with the chosen model.

- Store sentiment label and score alongside the original record.

- Aggregate results by time, entity, region, or channel.

- Visualise trends in BI tools or custom dashboards.

A web scraping for sentiment analysis pipeline concludes this step with a table that lists who said what, where, when, and how they felt about it. In regulated markets, some organisations rely on controls proven in our scraping solution for tackling unfair competition to keep collection stable across sensitive domains.

Popular Web Scraping Tools For Sentiment Analysis

Teams rarely use a single tool for all their needs.

This section maps the prominent tool families to typical roles and tasks.

Web Scraping Frameworks And Libraries

Developers often start with code frameworks for flexibility and control.

| Tool Type | Typical Use | Who Uses It |

| Scrapy | Large crawls, complex sites, multi-page flows | Data engineers |

| Requests + BS4 | Simple pages, small jobs, fast prototypes | Developers, analysts |

| Selenium / Playwright | Dynamic sites, interactive flows, JS-heavy pages | Automation engineers |

Scrapy manages queues, retries, and parallelism within a single framework, which helps teams run large crawls efficiently. Teams that require broader coverage may integrate targeted crawlers with managed data extraction services to reach additional categories or regions without expanding internal load. Requests paired with BeautifulSoup support light jobs on stable pages. Selenium and Playwright emulate full browsers, unlocking content that depends on scripts or interactive flows.

No-Code Scraping Platforms

Teams without engineering resources often rely on tools like Octoparse or ParseHub. These platforms let users select elements on a page, define extraction logic visually, schedule jobs, and export the results. They work well for one-off research, recurring analyst-managed pulls, and early validation of new sources before engineers build full pipelines.

APIs and Platform Endpoints

Some platforms expose official APIs with structured data, stable schemas, and clear limits. Interfaces such as the X API, review-platform APIs, or YouTube comment endpoints provide authentication and predictable access patterns. When an API covers the necessary fields and permits analytical use, teams opt for this route because it reduces maintenance and avoids the fragility of HTML scraping.

NLP and Sentiment Libraries

Analysis layers rely on NLP stacks. spaCy and NLTK support tokenisation, language detection, and text cleaning.

- Classic tools like VADER and TextBlob are well-suited for handling polarity in short texts.

- Transformer models from the Hugging Face ecosystem enhance accuracy on complex language tasks and facilitate domain adaptation.

- Cloud NLP services from Google, AWS, or Azure offer managed sentiment endpoints for teams that want stable performance without maintaining model infrastructure.

These components power the step that turns raw text into reliable sentiment signals. Larger organisations with mature workflows often seek support from a web scraping company that operates multi-region infrastructure at scale.

Use Cases Of Sentiment Analysis Powered By Web Scraping

A focused web scraping for sentiment analysis setup supports several recurring decision areas.

This section highlights four patterns that appear across industries.

Brand Reputation Monitoring

Brand and communications teams track how people speak about the organisation over time.

Typical workflow:

- Scrape mentions of brand names, product names, and campaign tags from social media and reviews.

- Run sentiment on this combined stream.

- Track daily and weekly changes in positive, neutral, and negative volumes.

Decisions:

- When negative sentiment grows quickly, they review root causes and adjust messaging.

- When positive sentiment clusters around a theme, they reuse that angle in future campaigns.

- When sentiment differs across regions, they adapt local content and support responses.

Market Research And Competitor Analysis

A focused web scraping sentiment analysis approach helps teams see the broader market, not only their own brand.

Strategy and growth teams:

- Scrape discussions and reviews for their own products and those of their direct competitors.

- Run sentiment per brand, per feature, and per use case.

- Compare patterns across markets and channels.

They then answer questions such as:

- Which features draw the most positive feedback for each competitor?

- Which complaints repeat across the category and signal a market gap?

- How does sentiment move after each player launches a new offer or policy?

Insights from this work feed pricing, positioning, and product investment decisions. Analysts may combine sentiment findings with category signals extracted through web scraping for sentiment analysis to measure changes in perception across markets.

Financial Market Sentiment Prediction

Quant and research teams treat sentiment as one of several market signals.

They:

- Scrape news headlines, articles, and commentary around specific tickers or sectors.

- Collect forum and social discussions around those same tickers.

- Run sentiment models and aggregate results into time-series indicators.

Use cases:

- Build sentiment indices that supplement traditional market data.

- Flag sharp negative sentiment shifts around a company or sector.

- Study how sentiment patterns line up with price moves, volume, or volatility.

Sentiment does not replace financial analysis, yet it provides an additional angle on how the market reacts to events.

Customer Feedback And Product Review Analytics

Product and customer experience teams use scraping to expand feedback coverage beyond direct surveys.

They:

- Scrape app store reviews, marketplace feedback, booking site comments, and support portal threads.

- Attach sentiment to each record, along with product, version, or location tags.

- Group feedback by theme, severity, or frequency.

Decisions:

- Prioritise backlog items that link to high-intensity negative sentiment.

- Validate that recent changes improved sentiment around specific features.

- Identify topics where customers feel satisfied and highlight them in messaging.

Through this loop, sentiment from scraped channels flows back into roadmaps, service design, and training materials.

Common Challenges And Ethical Considerations

Even strong sentiment pipelines face friction when they scale across sites, regions, and teams. This section focuses on four areas where problems are most commonly encountered and how to manage them effectively.

Avoiding Over-Scraping And Respecting Robots.txt

Scrapers share every target site with real users. Teams that handle high-traffic domains refine rate strategies using practices described in what is big data used for in business to ensure workloads remain efficient at scale.

Teams require a stable, predictable technical foundation. Engineers start with robots.txt, read which paths the site permits, and shape their crawl scopes accordingly. They tune request rates per domain, add small random pauses between calls, and avoid traffic spikes that look like stress tests.

Large jobs are scheduled within planned windows and run over more extended periods, rather than in short bursts. Monitoring completes the picture. Teams watch status codes, timeouts, and structural changes in HTML.

A sudden rise in errors becomes a reason to slow down or stop, not to push harder. This discipline protects both the scraping infrastructure and the stability of target sites.

Handling Captcha And Anti-Bot Systems

Many platforms deploy controls that identify automated traffic and slow it. Teams plan for these defences instead of treating them as surprises. They utilize high-quality proxies in permitted regions and rotate IPs in patterns that mimic regular traffic, rather than aggressive scanning. When pages depend on JavaScript, they switch to browser automation tools such as Selenium or Playwright, allowing content to render as it would for a human visitor.

Where platforms provide documented APIs, teams favour those interfaces and work within published rate limits and scopes. They also define clear stop rules. If protection ramps up or friction grows, they reduce activity and review whether the source remains essential for the business goal. The aim is long-term stability, not technical one-upmanship.

Privacy, Consent, And Compliance With Website Terms

Public text still lives inside legal and ethical boundaries. Teams treat scraped data as part of their compliance posture, alongside any internal datasets. They identify fields that can point to specific individuals, such as names or contact details, and restrict access to that layer of information. They map storage locations, retention rules, and access patterns to the regulations of the regions they cover.

Before any new source enters a pipeline, legal or governance staff review the platform’s terms of service and confirm that the intended analysis fits permitted uses, including any reuse in dashboards or presentations. Internally, teams keep a register of sources, purposes, approvals, and significant changes in scope. The safest sentiment systems treat data access rules as a design input from day one, not as a late review item.

“The true challenge isn’t building the first scraper; it’s building the system to strip away all the digital noise, so the sentiment model only ever sees pure, reliable customer truth.”

— Alex Yudin, Head of Web Scraping Systems

Data Accuracy And Bias In Sentiment Detection

Models have their own limitations, so teams need structured checks to ensure reliable outputs. Language and context create the first risk. Generic models often misread slang, irony, or technical language. Teams respond by testing on real samples from each audience, then refining preprocessing steps or switching models where needed. Domain mismatch creates the second risk. Models trained on general web text may misinterpret medical, financial, or other specialist content. Fine-tuning on domain-specific data or selecting models built for similar text can reduce that gap. Source mix introduces bias as well. If one channel dominates the collection, its tone and demographics shape the overall signal.

Teams, therefore, diversify their sources and tag each record by origin so that analysts can see where sentiment originates. Finally, metrics themselves can mislead. A single score per entity may appear clean, but it hides nuance and uncertainty. Teams treat sentiment as a trend, inspect ranges and confidence, and review high-impact topics manually. Used in that way, sentiment does not dictate decisions. It helps leaders determine where to focus and which issues warrant further investigation.

Building A Scalable Sentiment Analysis Pipeline

Pilot projects often live in notebooks and ad-hoc scripts. Teams move from that stage to a durable service when they stabilize how data enters the system, how models evolve, and how the entire loop is monitored.

Data Ingestion And Preprocessing At Scale

Teams first stabilise how text arrives and how it gets prepared. They trigger scraping and API calls through schedulers or workflow tools, and split sources into separate jobs so a single failure does not block the entire flow. Scraping workers run in parallel across machines and regions, which keeps the collection predictable when the volume grows. Text cleaning and language detection sit in a shared library or service, so every source passes through the same preprocessing. Raw and cleaned data land in long-term storage with versioning and transparent partitions by date, source, and market. This layer maintains a stable scraping sentiment workflow as the number of sites, markets, and records increases.

Model Training And Evaluation Loop

Next, teams stabilize the modeling side so it can improve without disrupting production. They select one or two baseline sentiment models for key languages and agree on labels and metrics before deployment. Over time, they adapt these models with labelled examples from their own text streams, which align outputs with real customer language. Performance is regularly evaluated using fixed validation sets, and teams monitor for drift when products, campaigns, or topics change. New model versions roll out in small increments and are compared with the previous baseline before being fully released. This loop ensures consistent sentiment outputs while still allowing for gradual improvement.

Automation For Monitoring And Retraining

Finally, teams tie everything into an automated cycle. Workflow tools connect ingestion, cleaning, modeling, and aggregation into a single, visible pipeline, allowing operators to view failures and timings in one place. Dashboards in BI tools expose key sentiment indicators, and alerts highlight sharp, damaging spikes or unusual silence in essential channels. Rules define when to refresh or adjust models, for example, after quality metrics drop or topic distributions shift, and data preparation for retraining becomes a repeatable job instead of an ad-hoc task. Cost and performance are continually monitored, allowing teams to adjust schedules, sampling, and model complexity as needed. A scalable pipeline behaves like a small internal product with clear inputs, predictable outputs, and controlled change.

Turning Web Data Into Actionable Sentiment Insights

“Sentiment data is not a historical report; it’s an early warning system. We focus on providing fast, clear signals so product and marketing teams can adjust campaigns and roadmaps before the problem becomes a crisis.”

— Olesia Holovko, CMO

For leadership, the value becomes apparent when sentiment is directly linked to budget shifts, roadmap updates, and risk decisions. For delivery teams, the value becomes evident when they replace manual collection and ad-hoc scripts with clear, automated workflows.

Examples From Review-Driven Sentiment Work

A consumer goods company in the sleep products sector utilized multi-market review scraping to compare its products with those of direct competitors. The pipeline collected ratings from major marketplaces and local brand stores, tracked monthly review growth, and measured the share of positive feedback by region. The analysis revealed a stable lead in several key markets and enabled the team to support a “category leader” position with data, rather than claims.

A pharmaceutical manufacturer used a similar approach to prepare for market expansion. The system scraped reviews from large retailers and thematic sources, normalised the texts, and applied sentiment and topic models to identify pain points and reasons for brand switching. The outputs fed into pitch decks that improved sales conversations. Within a quarter, the client reported higher win rates and far less time spent on manual research.

These cases demonstrate how structured review data and sentiment models enable teams to validate brand strength, shape positioning, and prioritize actions across markets.

GroupBWT designs and operates these sentiment pipelines as multi-source, compliant systems. The team covers intake, modelling, and delivery into the tools your teams already use.

Used thoughtfully, web scraping and sentiment analysis let organisations listen at scale, understand what people feel, and turn that awareness into concrete, well-timed action.

FAQ

-

What business outcomes can we expect from sentiment analysis pipelines?

Teams usually measure impact in four places:

- Revenue and conversion. Marketing sees an uplift in campaign performance and lead quality.

- Retention. Product and CX reduce churn by reacting earlier to negative trends.

- Brand risk. Comms teams cut time-to-detection for issues that damage reputation.

- Operational efficiency. Analysts spend less time collecting data and more time on insight.

Leaders should request a before-and-after view on specific KPIs, such as conversion rate, churn, NPS, ticket volume, or crisis resolution time.

-

How long does it take to set up a scalable pipeline?

Most organisations move through three phases:

- Prototype (2–4 weeks). One or two sources, basic models, and manual dashboards.

- MVP (6–10 weeks). Stable collection, cleaning, and scoring for a defined market.

- Scale-up (3–6 months). Multi-country, multi-language governance, and integrations with BI or CRM.

Timeframes depend on data access, compliance review, and existing infrastructure.

-

Are there concrete examples of KPI improvements?

Typical patterns from production projects

- E-commerce and apps. Product teams cut negative review volume and improve rating averages.

- Telecom and utilities. CX teams shrink handling time and complaint queues on key topics.

- Finance and trading. Research teams incorporate sentiment signals to refine entry and exit timing.

Decision-makers should request case data that links sentiment work to measurable KPI shifts, rather than generic success stories.

-

What does ongoing maintenance usually require?

Costs fall into four buckets:

- Infrastructure. Compute, storage, proxies, and any managed NLP services.

- Engineering. Time for source changes, scraper updates, and monitoring.

- Data science. Time for model evaluation, adaptation, and retraining.

- Compliance and governance. Reviews of new sources and policy updates.

Total cost depends on the number of sources, refresh frequency, and coverage across markets and products.

-

How do we choose between in-house solutions, no-code solutions, and external vendors?

Typical patterns from production projects

- In-house builds are suitable when engineering capacity is available and data is strategic.

- No-code tools are ideal for small teams that need quick wins and limited scope.

- Specialist vendors fit when you need scale, compliance, and multi-market coverage.

Leaders should evaluate options against five key criteria: data coverage, compliance posture, integration effort, internal skills, and total cost of ownership over three years.