Most organisations still treat training data as a one-time input. They collect a set, train a model, deploy a pilot, and move on.

When data lacks ownership, structure, and refresh cycles, models drift, and behaviour degrades. Teams compensate with manual review, and this manual load grows linearly with every inconsistency in the underlying datasets.

This is why the guide moves from data issues to the infrastructure required to prevent them. The goal is simple: a stable, predictable, and trustworthy AI system built on controlled data.

“The systems we build unify messy sources, expose transformations end-to-end, and stay resilient as source platforms change. The result: operational certainty, not data points.”

— Eugene Yushenko, CEO

Recognizing why upstream data failures keep breaking AI and how GroupBWT fixes them is the first step toward building a self-correcting, resilient pipeline.

Understanding AI Training Data

Training data teaches a model how to read instructions, recognise patterns, and respond consistently. A model learns from examples, not from assumptions.

The quality of the data used to train artificial intelligence often shapes outcomes more strongly than model size or architecture. Strong datasets support accuracy, safety, and reliability.

What Qualifies as High-Quality Training Data

High-quality training data reflects real use cases. It covers typical flows and rare events, follows common naming standards across sources, and avoids duplicates and noise. This reduces confusion for the model and increases clarity during training.

Traits of Strong AI Training

| Trait | Description | Value to Model |

| Coverage | Includes common and rare cases | Stable behaviour |

| Structure | Uses consistent fields | Easier parsing |

| Metadata | Stores context and source | Better decisions |

| Cleanliness | No duplicates or broken text | Fewer errors |

| Traceability | Includes history and version | Safe updates |

Why AI Training Data Matters for Safety and Reliability

AI models interpret the world through the training data they are trained on.

Training data:

- Shapes how the model understands meaning

- Defines which behaviours the system treats as valid

- Limits, shortcuts, and biases in reasoning

- Stabilises responses across similar inputs

High-quality data for AI training reduces harmful mistakes in sensitive scenarios and cuts the time needed for corrections, audits, and incident reviews. Weak datasets have the opposite effect: more errors, more escalations, and lower trust.

Accuracy starts with controlled datasets. Balanced coverage across use cases leads to more predictable model behaviour.

GroupBWT and the Data Foundation Behind Predictable AI

GroupBWT designs and maintains end-to-end data pipelines that keep AI accurate, governable, and aligned with real operations.

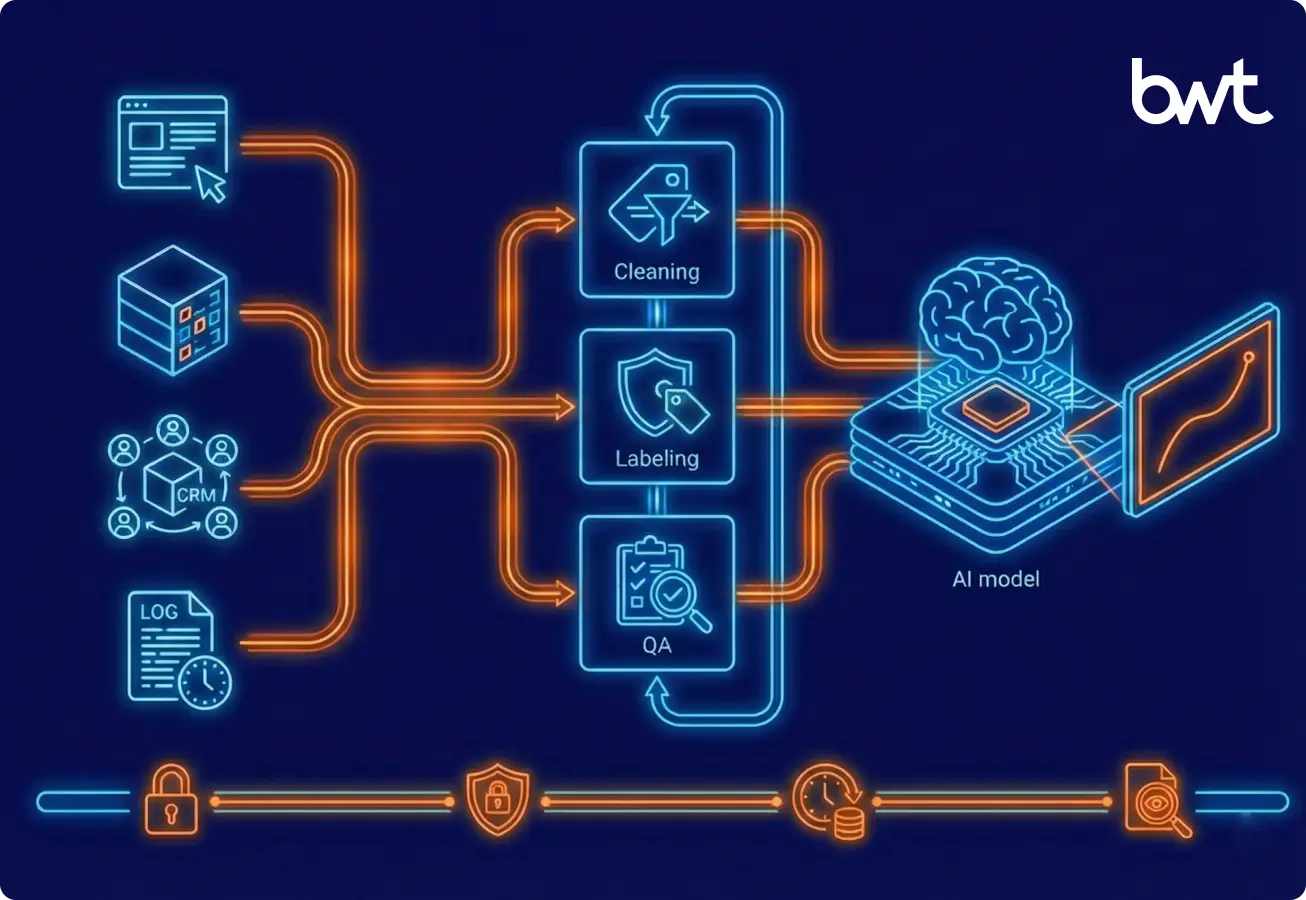

Sourcing and ingestion

We collect structured and unstructured data from the web, enterprise systems, APIs, and third-party providers.

Cleaning, normalisation, and enrichment

We convert raw inputs into consistent records. Teams remove duplicates, unify schemas, enrich metadata, and prepare slices for training, validation, and testing.

Human-guided labeling

We run manual, assisted, and automated annotation workflows. Clear guidelines and staged reviews preserve label quality across large volumes.

Quality and governance

We enforce access control, lineage tracking, redaction, region-based storage, and audit logging. These controls keep datasets compliant and traceable.

Deepening traceability

Lineage allows teams to answer critical operational questions:

- Which exact records influenced a model’s incorrect output?

- What transformation logic produced a specific feature?

- Which version of a dataset was used during training?

- Did masking, filtering, or cleaning steps introduce a gap?

A strong lineage system captures:

- original source,

- timestamps,

- transformations applied,

- versions of scripts and rules,

- annotator IDs,

- quality checks,

- final dataset version used for training.

Dataset productisation

We turn datasets into managed assets: versioned, documented, refreshable, and owned by clear teams. This prevents drift and avoids regressions after model updates.

Model-alignment support

We prepare domain-specific data for fine-tuning, retrieval systems, and custom models. Our approach helps companies choose the right path based on how their data behaves. Retrieval-augmented generation (RAG) reduces exposure because documents do not enter model weights. The system retrieves relevant passages at inference time, protecting source content during updates, limiting retention, and keeping sensitive material outside fine-tuned checkpoints.

Governance still matters: when teams run fine-tuning or feedback-based training, they must exclude restricted documents from the training set. Hybrid paths combine retrieval with selective fine-tuning when domains require both stability and specialised behaviour.

GroupBWT focuses on the part of the AI stack that carries long-term value: data. Models update fast. Controlled datasets preserve knowledge, stability, and trust.

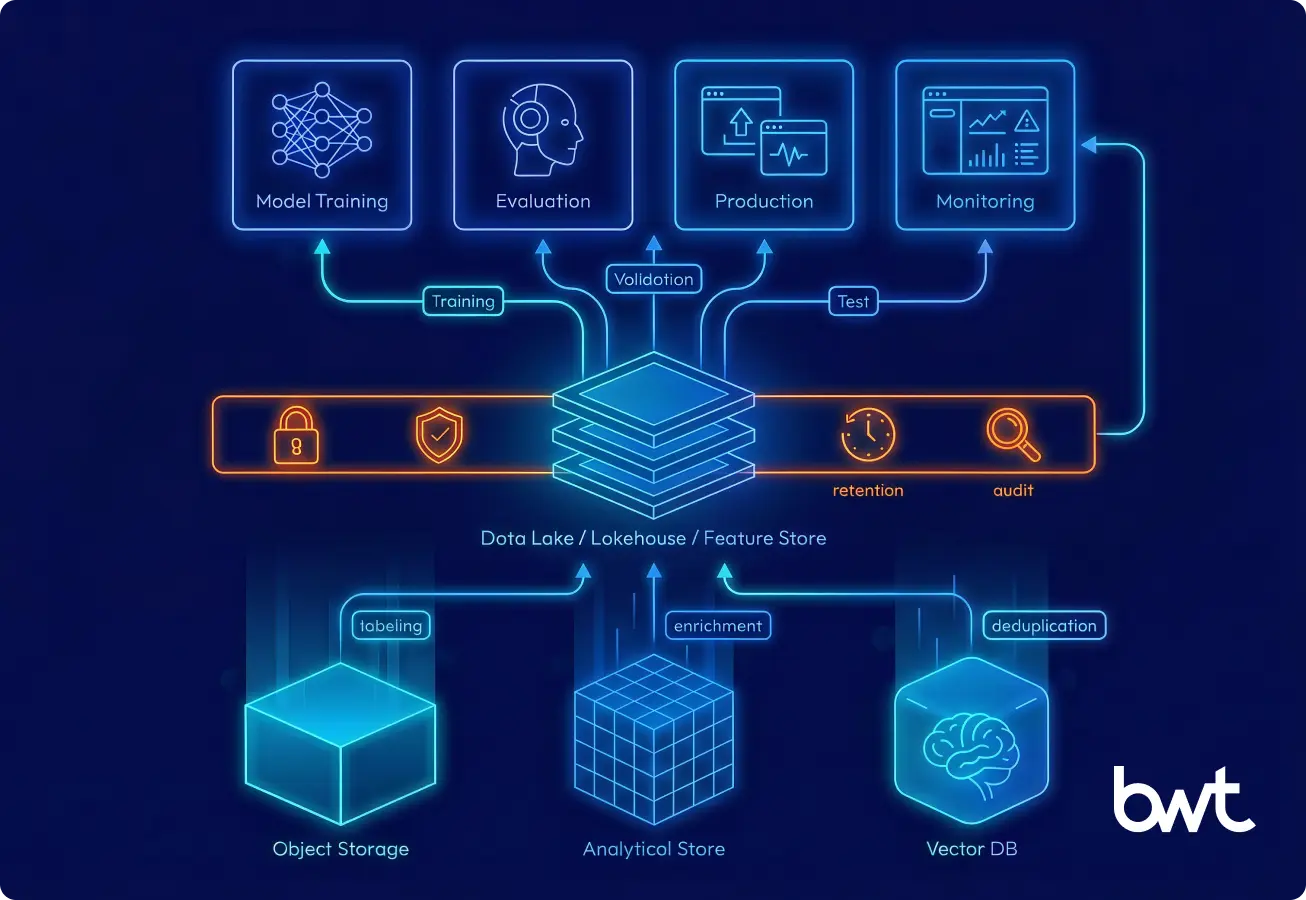

How Training, Validation, and Test Datasets Differ

Each dataset supports a distinct role in the lifecycle.

- The training set teaches patterns.

- The validation set guides adjustments and compares variants.

- The test set measures final performance and guards against overfitting.

This separation provides an honest evaluation instead of optimistic internal scoring.

Table: Dataset Roles

| Dataset | Purpose | Refresh Frequency |

| Training | Learning patterns | Regular |

| Validation | Tuning parameters | Regular |

| Test | Final accuracy check | Less frequent |

Clear separation between these datasets supports more precise evaluation and more stable deployments.

Feature Store, Training, and Production: Keeping Features Consistent Across the Pipeline

The best data solutions for AI model training integrate feature stores to ensure that the logic used during development matches exactly what is deployed in production

During training, the Feature Store provides:

- the same transformations every time,

- consistent entity IDs,

- the same time windows and aggregations,

- repeatable and versioned feature logic.

The model learns on stable, clean, and well-defined features.

During production, the Feature Store computes features with the same rules:

- identical preprocessing steps,

- identical joins and clear, versioned feature definitions,

- identical time windows,

- identical metadata fields.

This creates feature parity. The model sees the same structure it learned from.

No silent drift. No sudden behaviour changes. No hidden errors caused by different scripts in training and production.

A Feature Store makes the entire pipeline reproducible and keeps the model reliable over time.

“My strategy is to translate complex business needs into a cloud-native infrastructure that holds when traffic spikes, APIs drift, or new LLM models evolve. I ensure technical certainty from day one.”

— Dmytro Naumenko, CTO

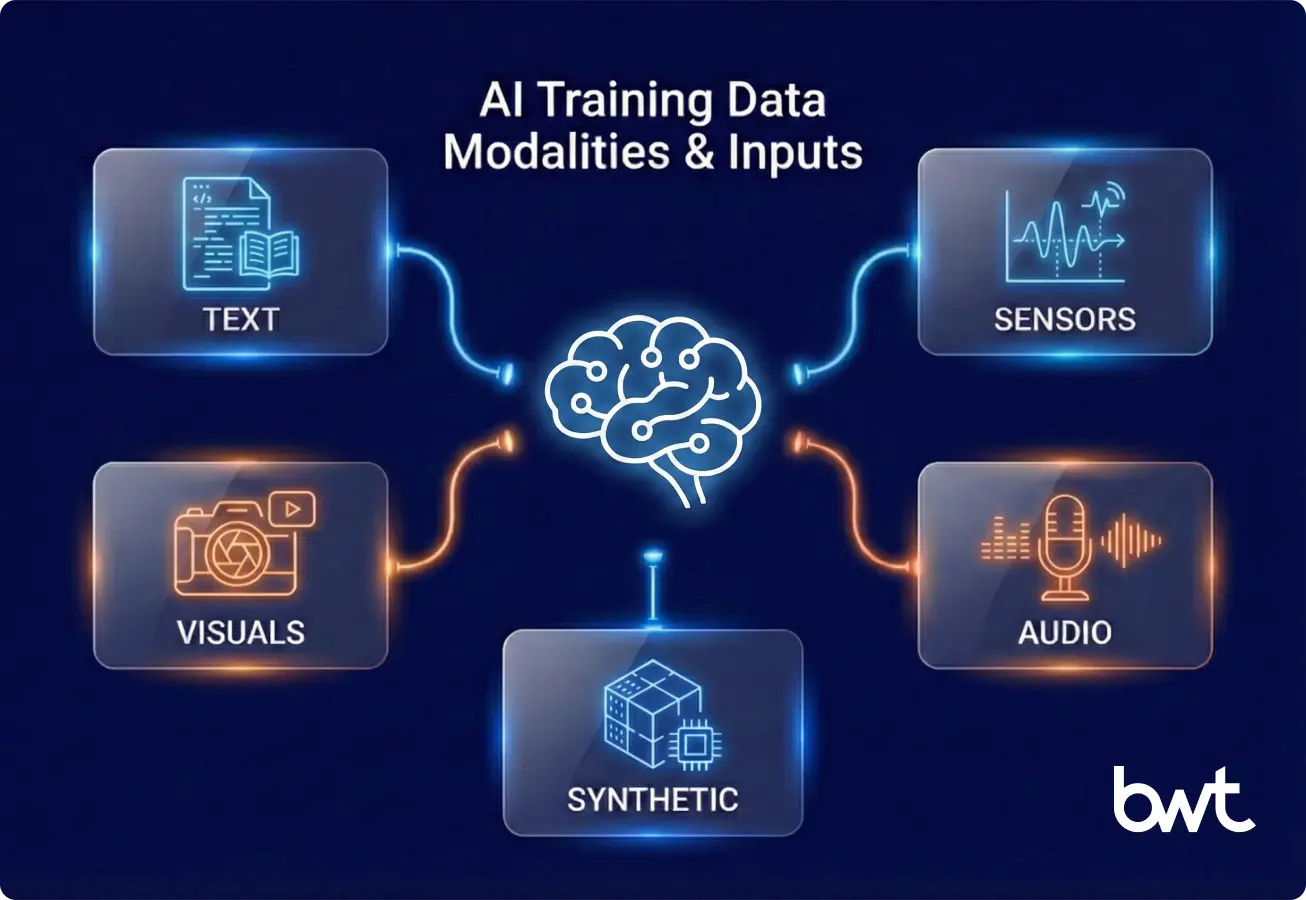

Types of Data Used for AI and Generative AI

AI systems learn from several kinds of information. Each format captures a different slice of reality. Clear structure and detailed context increase reliability and reduce surprises in production.

Overview of Data Types

Table: Data Types and Primary Use Cases

| Data type | Example sources | What models can do with it |

| Text | Emails, CRM notes, manuals | Search, routing, classification, summarisation |

| Images | Product photos, diagrams | Object detection, defect checks, visual search |

| Video | CCTV, shop-floor recordings | Motion tracking, action recognition, safety alerts |

| Sensor data | IoT streams, GPS, LiDAR | Mapping, route prediction, anomaly detection |

| Audio | Support calls, voice notes | Speech-to-text, sentiment, and intent extraction |

| Synthetic | Simulated records and scenarios | Edge-case coverage, privacy-safe experimentation |

Why These Data Types Matter for AI

AI models do not learn in the abstract. They learn from concrete traces of how a business operates. Each data type shapes a different capability. To capture these subtleties accurately, partnering with an experienced NLP service provider ensures that raw audio is processed correctly to enable semantic understanding.

Model quality depends less on volume and more on alignment. If training data reflects your products, customers, and workflows, AI assistants, copilots, and generative tools behave in ways that match real operations. If training data comes from unrelated contexts, models respond with plausible but unhelpful output.

Data type decisions also influence risk. Poorly governed text or audio data increases the risk of exposing confidential information. Weak metadata on images and sensor streams limits traceability. A clear view of data types helps leaders decide which datasets can safely feed AI systems and which require extra controls, anonymisation, or synthetic substitutes.

Text, Documents, and Unstructured Enterprise Content

Enterprise text usually represents the largest and most valuable pool of training data. It records decisions, policies, processes, and customer intent across the organisation.

Typical sources include:

- CRM notes and ticket comments.

- Email threads and chat logs.

- Support transcripts and knowledge articles.

- Product descriptions, manuals, and contracts.

This material teaches models how your company speaks, documents work, and frames problems. As a result, it improves:

- Enterprise search.

- Internal assistants and copilots.

- Knowledge retrieval and routing.

- Workflow and ticket automation.

The main problems arise before training begins. Content sits in many tools, formats, and languages.

Teams need to find it, clean it, and control who can use which parts.

Table: Challenges and Solutions for Text Data

| Challenge | Impact on models | Practical solution |

| Duplicate notes | Repeated patterns and skewed signals | Deduplication rules and filters |

| Mixed formatting | Parsing errors and lost context | Normalisation templates and schemas |

| Sensitive fields | Legal and compliance exposure | Redaction, masking, and access control |

Image, Video, and Sensor Datasets

Visual and physical-world data help models link digital records to real-world events. Such datasets support product recognition, route optimisation, people-flow analysis, inventory monitoring, and training data for generative models that create or modify images.

Typical inputs:

- Product and catalog photos.

- CCTV and shop-floor video.

- LiDAR point clouds from devices or vehicles.

- GPS tracks and IoT sensor streams.

These datasets support:

- Product and shelf recognition.

- People-flow and traffic analysis.

- Inventory checks and planogram control.

- Training data for image and video generation.

Metadata quality defines how useful these assets become. Accurate timestamps, device identifiers, camera angles, and location markers link every frame or reading to a real event in time and space. Without this context, models struggle to learn meaningful patterns.

Audio and Speech Data

Audio adds information that plain text often misses. Tone, hesitation, and emphasis reveal intent and emotional state.

Sources include:

- Recorded support and sales calls.

- Voice notes from field staff.

- Hotline and IVR recordings.

- Voice commands in products.

Companies use these datasets to:

- Transcribe calls.

- Measure sentiment and stress.

- Detect topics and escalation triggers.

- Extract actions and commitments from conversations.

Audio usually contains names, account details, and other personal identifiers. Many organisations treat it as restricted data. Effective handling requires strict access rules, retention limits, and storage policies that match regional regulations.

Synthetic and Real-World Data: When to Use Each

Most teams rely on a blend of real and synthetic data.

- Real data reflects actual user behaviour, natural language, and factual errors.

- Synthetic data covers rare situations and protects privacy in sensitive domains.

Teams generate synthetic data when they need to:

- Model scenarios that rarely occur in production, such as severe complaints, rare failures, or fraud patterns.

- Test systems under stress without exposing real customer records.

- Train and validate models where contracts or regulations limit the use of personal data.

Synthetic data expands coverage but never fully replicates fundamental interactions. Language may sound too clean, error patterns may compress, and rare behaviours may become over-represented. If teams rely on synthetic data without regular checks against real samples, they can introduce systematic bias into their models.

Table: Choosing Synthetic vs Real Data

| Scenario | Recommended source | Reason |

| Common user flows | Real data | Captures natural variety and mistakes |

| Rare edge cases | Synthetic | Scales unusual situations efficiently |

| Privacy-sensitive work | Synthetic | Limits the exposure of personal fields |

| New or evolving products | Mix | Combines realism with fast iteration |

Data Requirements for Generative AI and Large Language Models

High-performance Large Language Models rely on diverse training data for generative AI that covers varied tones, instructions, and structural patterns. Each record needs clear ownership, metadata, and definitions. A generative AI development company can help keep these properties generation-safe, avoid guesswork, and support audit needs.

Key requirements include:

- Varied tone and phrasing

- Clear internal instructions

- Diversity across domains

- Strong structural patterns

- Transparent licensing and provenance

- Aligned input-output examples

These conditions support predictable generation and reduce the need for later corrections.

How to Collect Data for AI Training

Data collection shapes the entire AI data pipeline. Correct sources improve accuracy and reduce risk. Non-compliant or low-quality sources lead to long-term exposure and costs.

Web Scraping and Automated Large-Scale Data Extraction

Many teams use it as a single data source for AI training when they need product listings, customer reviews, company profiles, price histories, or job posts.

A compliant app and web scraping pipeline:

- Follows robots.txt and similar controls

- Respects local regulations and sector rules

- Records data provenance

- Captures structured fields where possible

- Logs time, URL, and method

Scraping support from top companies for data extraction services helps with research, enrichment, competitive analysis, and training data that depend on live market signals.

Advanced methods, such as ChatGPT web scraping, can further enhance this process by intelligently parsing complex, unstructured web content into training-ready formats.

Utilizing automated data scraping AI tools ensures that these live signals are captured continuously, keeping the model’s knowledge base fresh and relevant.

Table: Scraping Use Cases

| Use Case | Example | Value for AI |

| Pricing | Retail sites | Train pricing and promo models |

| Text | News, reviews | Sentiment and topic analysis |

| Product data | Catalogues | Product match and classification |

Enterprise Data Ingestion: PDFs, Email, CRM, Customer Logs

Enterprise data reflects actual work. PDFs, CRM notes, billing records, support tickets, analytics logs, and policy documents carry domain-specific rules and decision logic.

Ingestion converts these formats into structured training data for AI models. That process often includes:

- Extracting text from PDFs and images

- Parsing email threads and headers

- Resolving entities such as customers and products

- Structuring logs and events

- Attaching metadata such as owner, region, and product line

These steps create controlled datasets that remain grounded in the business.

Public Datasets, APIs, and Open-Source Sources

Public datasets support prototypes, benchmarking, and discovery. Teams use them to run early experiments before internal data sets set the main direction.

APIs for news, finance, weather, open government records, and web text provide breadth. Over time, internal data for AI training becomes increasingly important because it encodes company-specific context and decision-making.

Most public datasets require extensive normalisation before they support downstream AI training. Schemas differ across sources, identifiers conflict, and metadata often arrives incomplete.

This is the stage where GroupBWT’s ingestion and unification pipelines operate: we convert broad, messy sources into structured, traceable slices that link to enterprise systems and training workflows.

Human-Generated and Crowdsourced Data

Human annotation adds clarity in areas where models hesitate. Typical tasks include classification, ranking, entity recognition, summarisation, and evaluation of model outputs.

These activities provide precise data training for AI. Human labelers design examples and corrections that raw logs cannot offer on their own.

Clear instructions and examples reduce label noise and preserve long-term model quality.

Data Acquisition from Third-Party Providers

Third-party providers deliver structured datasets that are costly to collect in-house. Examples include firmographics, product feeds, historical reviews, and complex graph datasets.

When evaluating data solutions for AI model training, teams verify coverage, quality, freshness, and licensing before committing to a provider and integrating the data into training pipelines.

Ensuring Compliance and Permissions During Data Collection for AI Training

Compliance defines which data you may use and under which conditions. Data must follow laws, terms of service, contracts, and internal policies.

Strong practices include:

- Documented legal basis for collection and use

- Separation of sensitive fields

- Regular audits and access logs

- Clear user notices where required

- Region-based storage when laws demand it

High-profile GDPR cases show that public visibility does not automatically grant a right to collect or reuse content for AI training.

Table: Compliance Controls

| Control | Purpose | Example |

| Consent tracking | Permission evidence | Email logs |

| Redaction | Remove identifiers | Names, emails |

| Access rules | Restrict data views | Role-based access |

Preparing and Labeling Data for AI Models

Preparation converts raw content into usable datasets. Labeling defines meaning. Together, these steps outline how to prepare data for training AI and lay the foundation for model behaviour. If you are starting from scratch, understanding the technical steps of how to make a dataset will help you avoid common pitfalls in structure and formatting.

Data Cleaning, Normalization, Deduplication

Data cleaning removes noise. Normalization enforces a standard structure. Deduplication keeps slices balanced.

Teams also standardise formats, add identifiers, and track every change with version control. These actions set the foundation for reliable data used for AI training.

Table: Before vs After

| Step | Before | After |

| Cleaning | HTML noise | Plain text |

| Normalisation | Mixed date formats | Unified schema |

| Deduplication | Duplicate records | Single record |

Annotation Approaches: Manual, Assisted, Automated

Teams usually follow three approaches:

- Manual labeling, where humans control every label and example

- Assisted labeling, where a model suggests labels and humans refine

- Automated labeling, where systems label large volumes with audits on samples

Each path shapes speed, cost, and consistency of data labeling for AI model training.

How to Set Labeling Guidelines for Consistency

Labeling guidelines align annotators and maintain the coherence of the training data. Good guidelines define:

- Labels and their meanings

- Positive and negative examples

- Borderline cases and how to treat them

- Rules for ambiguous data

- Revision and escalation policies

Clear standards ensure data used for AI training is consistent across large teams and long projects.

To prevent model drift and ensure consistent behavior, labeling data training for AI must follow precise guidelines that define positive examples and edge cases.

Human-in-the-Loop Training Pipelines

Human-in-the-loop (HITL) pipelines connect user feedback with future training.

A typical loop works as follows:

- Users interact with the model and edit outputs.

- Systems log corrections and decisions.

- Annotators review samples and create structured labels.

- Datasets are updated with new patterns and rules.

- The next model version learns from this refined set.

This practice aligns with the main steps for training AI models using human-labeled data.

Quality Control and Multi-Stage Validation Workflows

Quality control protects long-term accuracy. Mature teams combine sampling, double labeling, disagreement analysis, anomaly detection, and slice-level reviews.

These steps move QA into the pipeline instead of treating it as a final gate.

Reducing Data Bias and Balancing Datasets

Bias appears when some segments dominate, while others remain underrepresented. Teams monitor distributions, enrich missing slices, and adjust weighting.

These habits align with best practices for training AI on proprietary data and support fair and stable decisions.

Data Infrastructure for AI Training

Reliable AI systems need a predictable data environment. Data flows must stay searchable, traceable, and secure. This section describes how teams design storage and governance structures to support long-term AI work.

Choosing the Right Storage: Object Storage, Vector Databases, Hybrid Solutions

Storage strategy defines how teams access and reuse data for AI.

- Object storage holds raw documents, PDFs, images, and logs.

- Analytical stores retain structured tables for reporting and model preparation.

- Vector databases store embeddings for semantic search and retrieval-based systems.

A hybrid approach often works best because it balances cost, performance, and discoverability. Teams can move records between stages without breaking lineage.

Table: Storage Options and Purpose

| Storage Type | What It Stores | When It Helps |

| Object Storage | Raw files | Early ingestion, archival |

| Analytical Store | Structured tables | Cleaning, preparation |

| Vector DB | Embeddings | Retrieval and semantic tasks |

| Hybrid | Mix of all | Flexible pipelines |

Data Lakes, Data Lakehouse, and Feature Stores

Each layer plays a specific role in AI training data.

- The data lake serves as a repository for raw data.

- The lakehouse adds governance, schema, and repeatable analytics.

- The feature store holds curated, ready-to-use features for model training and inference.

Clear separation simplifies versioning and updates. When new data arrives, teams update each layer in a controlled way.

Security, Governance, and Compliance Requirements

Security controls access. Governance protects integrity. Together, they form the base of a controlled data environment.

Key practices include:

- Identity and role management

- Encryption in transit and at rest

- Regional data zones where they are required

- Retention and deletion policies

- Lineage records and change history

- Data catalogues and ownership records

These controls support legal reviews, customer questions, and internal risk checks. They also shorten approval cycles when teams need to use specific data.

Table: Core Governance Controls

| Area | Purpose | Example |

| Access | Limit exposure | Role-based permissions |

| Retention | Manage lifecycle | Time-based deletion |

| Lineage | Trace decisions | Dataset version logs |

| Quality | Assure consistency | Validation scripts |

Scalability Considerations for Large Training Datasets

Growing datasets stress compute, storage, and scheduling resources. Planning needs to cover:

- Dataset volume and growth rate

- Training frequency and retraining policies

- Parallel workloads and project load

- Artifact versioning and registry strategy

- Network throughput and data movement costs

A scalable pipeline prevents bottlenecks when new projects start and keeps AI training data expansion predictable. Establishing a solid big data pipeline architecture early on allows teams to handle these increasing volumes without refactoring their entire ingestion system.

How a Raw Support Log Becomes a Training Dataset for a Chatbot

A support log is entered into the pipeline as unstructured text. It contains timestamps, customer messages, agent notes, metadata, and sometimes attachments. In its raw form, it cannot be used for training.

- Ingestion pulls the log from the support system through an API. The pipeline normalises timestamps, extracts text from attachments, removes HTML noise, and stores the results as structured JSON.

- Cleaning and normalisation fix formatting issues, remove duplicates, unify date and text formats, and mask personal data such as names and email addresses. After this step, each support ticket becomes a clean, standardised record.

- Semantic structuring splits the conversation into message turns, identifies intent candidates, extracts sentiment, and adds metadata such as product type or language. This prepares the ticket for labeling.

- Labeling assigns the correct intent, issue type, recommended response, and policy flags. Assisted labeling speeds up the easy cases, while human annotators handle complex ones.

- Quality checks validate the intent-response pairs, detect conflicts, analyse edge cases, and record complete lineage: who labeled it, which rules were applied, and which version of the processing pipeline was used.

- Dataset splitting creates training, validation, and test datasets.

- Model training uses these structured examples to teach the chatbot how to answer. Evaluation checks accuracy, answer quality, policy compliance, escalation rate, and hallucination risks. This structured approach is critical when deploying AI for industries like healthcare or finance, where the margin for error in chatbot responses is virtually zero.

- Production monitoring tracks new phrasing, unusual patterns, user edits, and misclassifications. These signals return to the pipeline, generating new examples, creating a continuous improvement loop.

This example shows how a noisy support log becomes high-quality training material through structure, cleaning, labeling, and governance.

Training Proprietary AI Models with Internal Data

Internal data reflects the language, rules, and decisions unique to an organisation. When teams manage this data in a structured way, it becomes a core source of advantage.

How to Prepare Organisation-Specific Data for AI Models

Preparation follows several steps that align content with real tasks:

- Discover systems, owners, and data flows.

- Classify records by sensitivity and use case.

- Exclude restricted or unsafe fields.

- Clean and normalise formats.

- Add task-specific labels or slices.

- Register datasets in catalogues with clear ownership.

These steps generate training data that aligns with actual workflows and policies. Each dataset becomes a product with an owner, quality rules, and refresh cycles.

Handling Confidential, Regulated, or Restricted Data

Some internal content requires strict controls.

Teams:

- Isolate workloads in protected zones

- Assign permissions based on role and purpose

- Redact identifiers and sensitive attributes

- Log exports, joins, and access

- Enforce region-specific restrictions

These protections build trust with legal, compliance, and security teams and align data used for AI training with industry rules and partner contracts.

Privacy-Preserving Techniques: RAG, Masking, Synthetic Data

Privacy-preserving techniques reduce exposure while maintaining performance.

- Retrieval-augmented generation (RAG) pulls relevant text without adding sensitive documents to the model weights.

- Masking replaces names, identifiers, and attributes in training data.

- Synthetic data simulates behaviour when the direct use of real data poses a high risk.

A careful combination of these methods increases flexibility and reduces risk.

When to Fine-Tune, When to Use RAG, and When to Train from Scratch

The choice depends on task complexity, internal skills, and the availability of AI training data.

- Fine-tuning fits when a base model already understands the domain and needs alignment.

- RAG fits when knowledge changes often or must remain local.

- Training from scratch is appropriate when the domain differs significantly from public language or when the data is highly specialised and abundant.

Each path influences storage, refresh cycles, and governance demands.

Best Practices for Building AI Training Pipelines

A promising pipeline enables continuous updates rather than single projects. This improves quality, reduces manual intervention, and keeps models aligned with operations.

Workflow Automation

Automation schedules and coordinates key stages:

- Regular ingestion

- Cleaning and enrichment

- Labeling and review

- Publishing of curated datasets

- Model training and evaluation

Teams reduce surprises and data-entry errors during training by placing these tasks under orchestration.

Continuous Data Evaluation and Refresh Cycles

Data ages at different speeds. Teams define refresh rhythms by type:

- Daily for pricing, alerts, and fast-changing events

- Weekly for support tickets, behaviour logs, and traffic data

- Monthly or quarterly for policy documents, manuals, and reference data

These cycles keep training data aligned with current conditions rather than historic states.

Monitoring Dataset Drift and Quality Degradation

Drift appears when new inputs diverge from the patterns that the model learned. Teams track:

- Feature distributions over time

- Label distributions across slices

- User edits and error rates

- Segment-level performance metrics

When drift grows, teams extend datasets, adjust prompts or policies, or retrain models.

Table: Drift Signals and Responses

| Drift Signal | Example | Response |

| Feature shift | New phrasing | Refresh dataset |

| Label imbalance | More edge cases | Rebalance slices |

| User corrections | Frequent edits | Expand training set |

Using Feedback Loops to Improve Model Performance

Every interaction produces new information. Edits, approvals, rejections, and comments feed back into future datasets.

Feedback loops improve:

- Clarity of instructions

- Alignment with policy and tone

- Safety and guardrail strength

- Precision for high-value tasks

These loops also reduce support issues and raise trust in AI systems.

How Training Data Impacts AI Performance

Model outputs track the quality of data. Datasets shape accuracy, speed, and stability.

Accuracy, Precision, Recall, and Model Stability

Performance metrics depend on:

- Coverage across scenarios

- Label clarity and consistency

- Structure and metadata quality

- Variety of contexts and edge cases

Clear datasets reduce variance and keep responses consistent.

The Price of Poor-Quality Data

Weak data leads to:

- Incorrect results in production

- Long review cycles and incident analysis

- Policy conflicts and compliance exposure

- Hidden costs in engineering and operations

Teams usually resolve these issues by repairing data rather than tuning models in isolation. Repeated failures often signal gaps or skew in the dataset.

Real-World Improvements from High-Quality Datasets

Organisations frequently see measurable gains after focused data work. Common outcomes include:

- Higher product match rates

- Better support responses and summaries

- Faster routing and triage

- Fewer manual corrections and escalations

Table: Improvements from Better Datasets

| Area | Before | After |

| Product Matching | Inconsistent | Automated decisions |

| Routing | Manual checks | Automated decisions |

| Support | Frequent edits | Clear summaries |

“Data surrounds us, but only those who see the hidden patterns and trends can outperform competitors. We use analytics to feel market changes sooner, see a little further, and act confidently, not reactively.”

— Olesia Holovko, CMO

Comprehensive data and analytics services are the engine behind this confidence, transforming raw market signals into clear strategic direction.

When to Work With AI Data Providers

Some projects require scale, geographic reach, or specialised expertise. External data providers help when internal teams lack capacity or domain coverage. Benchmarking your needs against the capabilities of the top data science companies 2025 can help clarify whether to build these capabilities in-house or outsource them.

What a Professional Data Provider Can Offer

Providers typically offer:

- Structured open-web and third-party records

- Global data collection and local coverage

- Dedicated annotation teams

- Multi-stage quality assurance

- Compliance documentation and audit support

These services support large-scale AI training data and accelerate delivery.

Pricing Models for Large AI Datasets

Common pricing models include:

- Per record or row

- Per entity or profile

- Per token or character

- Subscription for ongoing feeds

- One-time projects for specific scopes

Cost depends on volume, complexity, refresh rate, and geographic reach. Internal leaders track which projects spend the most on AI data and why.

Build vs Buy: Deciding What to Outsource

Teams are built in-house when a dataset provides a core advantage or poses a high risk. Teams buy when external sources can supply safe, broad coverage faster than internal efforts.

This “buy” strategy is often the most viable path for leaner organizations, where specialized AI consulting for small business provides high-level data infrastructure without the enterprise-level overhead.

Both paths still require clear ownership, quality controls, and governance for the combined data used for AI training.

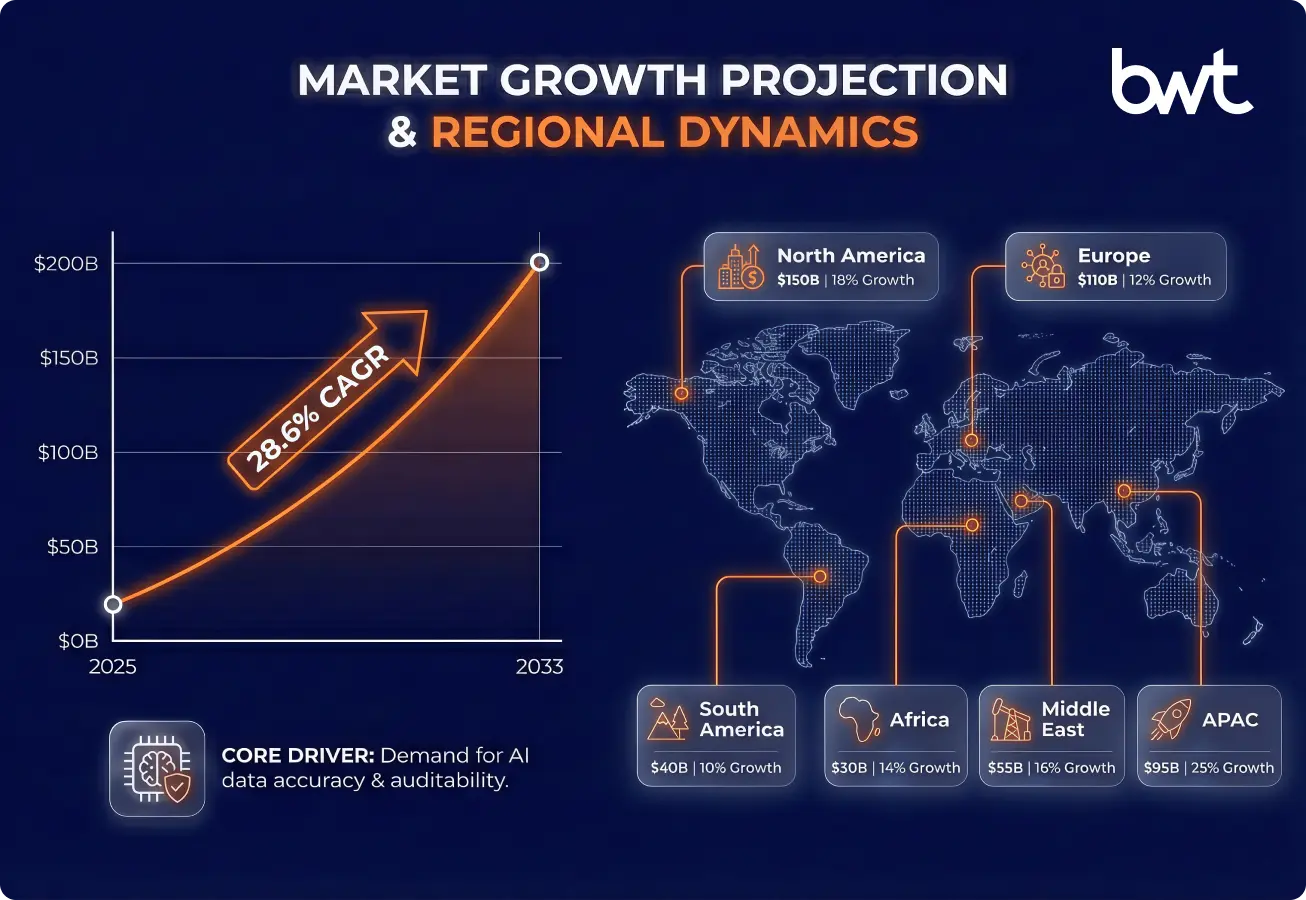

Global AI Training Data Market 2025–2033

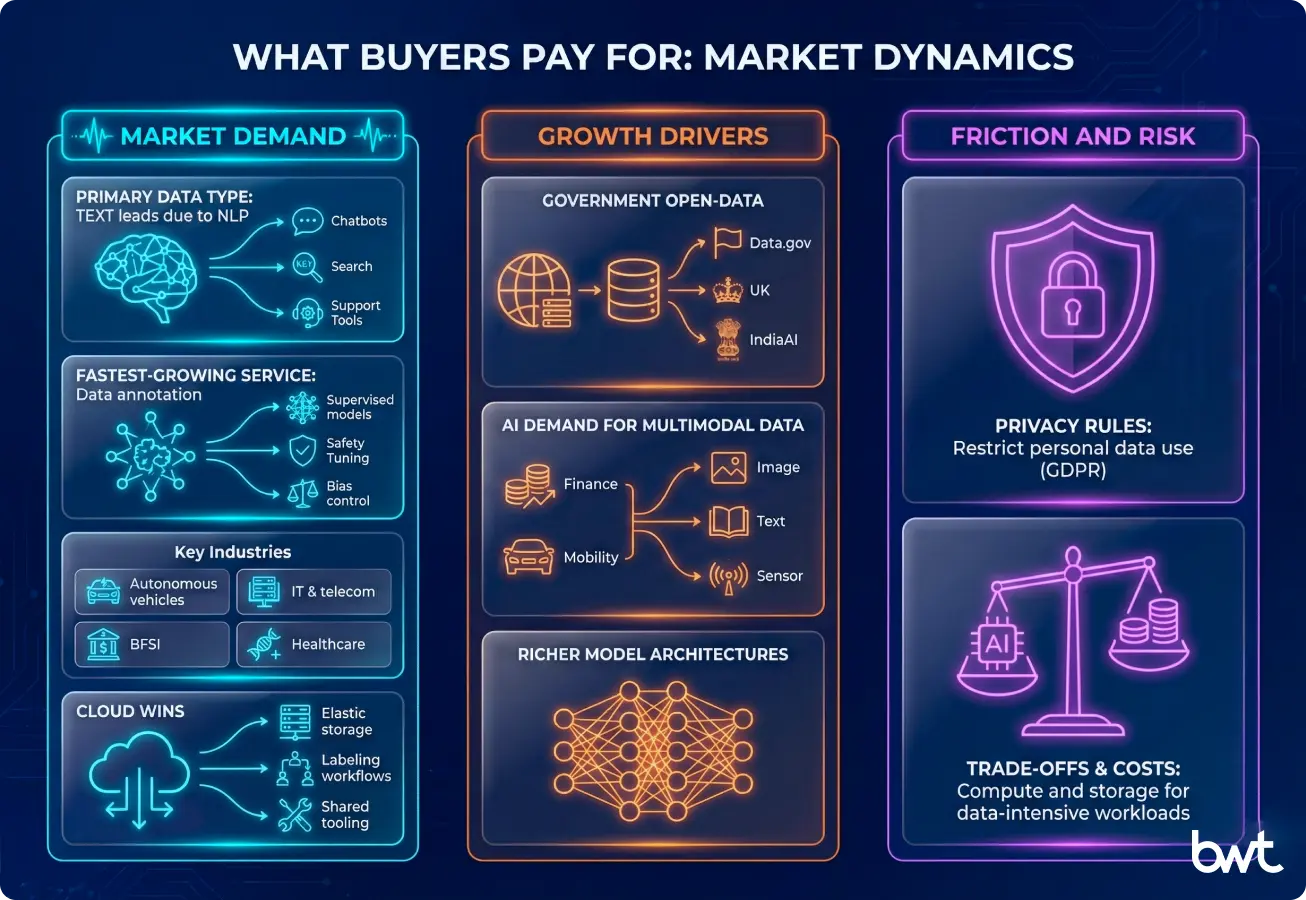

According to Cognitive Market Research, the global AI Training Dataset Market size will reach USD 2,962.4 million in 2025. It will expand at a compound annual growth rate (CAGR) of 28.60% from 2025 to 2033.

The market’s growth shifts responsibility from model tuning to controlled data operations. Companies invest in ingestion, lineage, cleaning, and dataset productisation because these layers determine accuracy, safety, and long-term stability. GroupBWT AI capabilities include the pipelines, governance rules, and data assets that allow organisations to operate in a market defined by scale, compliance, and multimodal datasets.

Market grows at a 28.6% CAGR as companies move from ad hoc data collection to managed, compliant training datasets. For companies building real AI pipelines, this trend shifts investment toward ingestion, cleaning, lineage, and dataset productisation. This creates pressure to structure internal and external data sources. The same processes GroupBWT designs when building end-to-end data foundations for model training.

Text stays core, while video and audio grow with autonomous systems, smart cities, and edge AI.

This guide explains how to treat AI training data as a managed product. It shows which data matters, how to collect it safely, prepare it, govern it, and update it as operations evolve.

High-quality information for AI training supports safe deployment, predictable behaviour, and stable long-term performance. Models evolve quickly. Data remains the durable source of truth, carrying knowledge across tools and versions.

When teams treat data for AI as infrastructure, AI becomes easier to update, govern, and trust. Each new project then builds on a foundation that leaders have already tested, structured, and aligned with the business.

Clean ingestion, unified schemas, redaction rules, versioned datasets, and traceable transformations become the core capabilities that sustain high-performance AI systems. These are the capabilities that GroupBWT provides when building end-to-end data foundations for model training and evaluation.

FAQ

-

How much data does an AI model need?

Small models for narrow tasks can perform with thousands of examples. Large models and complex systems rely on millions or billions of tokens. The key factor is coverage. The model needs exposure to the scenarios in which it will act.

-

How is training data useful for AI?

Training data gives an AI model its operational knowledge. The model learns patterns, relations, boundaries, and signals from labeled or structured examples. High-coverage and high-quality data sets define how accurately the model predicts, reasons, detects, or generates outputs. Poor data creates flawed models, while complete, clean, and representative data enables stable behavior across real tasks.

-

How to prepare data for AI training?

Teams prepare data through a controlled sequence:

- Define the task and the exact outputs the model must produce.

- Collect source data with clear scope, coverage, and licensing.

- Filter sensitive fields and remove noise, duplicates, and corrupted items.

- Normalize formats into consistent schemas.

- Label examples with precise guidelines, edge-case rules, and quality checks.

- Split sets into training, validation, and test partitions.

- Track provenance so each field has an audit trail for future updates.

This sequence gives teams reliable, compliant, and high-yield training data that improves model accuracy and reduces downstream errors.

-

Can I use synthetic data only?

Synthetic data is helpful for rare scenarios and privacy-sensitive domains. Most production systems combine synthetic and real-world data to keep language natural and behaviour realistic.

-

How do I label data quickly at scale?

Automation handles simple records. Human reviewers focus on complex and ambiguous cases. Clear guidelines, sampling, and audits keep labeling data for AI model training consistent.

-

How do I train AI on proprietary company data?

Teams discover systems, classify sensitivity, clean and structure records, and label essential decisions. After that, they choose between RAG, fine-tuning, or custom training.