Overview

Most teams do not have a research problem. They have an ownership problem. When nobody owns scope, approvals, and outputs, teams add feeds and dashboards, then argue about what matters.

A market intelligence solution earns adoption when it ships decision artefacts on a schedule and keeps a traceable record for every claim.

Terminology (used in this post):

- Platform = the operating system (ingestion, governance, review, exports, audit trail).

- Solution = the packaged outcome for a specific team or scope (topics, cadence, owners, exports).

- Marketing intelligence solutions = inside-out campaign and channel analytics; competitor intelligence is used only when the scope is explicitly competitor-only.

Early context: market intelligence case study with solution

In a European insurer case, a small strategy group started with manual newsletters. As distribution grew, cadence slipped and coverage drifted by region. The fix was not more sources. The fix was a governed briefing pipeline with explicit scope, review, and export logs.

What These Systems Are And What They Are Not

This article explains when market intelligence solutions become a governed product rather than a collection of feeds.

Many tools behave like reading interfaces: they collect content, but they do not enforce scope, review, or exports.

Definition

A governed intelligence platform collects signals, classifies them into a taxonomy, ranks relevance, and produces briefing-ready outputs with traceable sources.

This pattern applies across regulated services (insurance, banking, healthcare), consumer verticals (eCommerce, retail, travel, beauty), and advisory domains (consulting and legal).

Why This Matters For Strategic Decision-Making

Most organisations do not fail because they lack information. They fail because scope, review, and outputs are not owned. When ownership is unclear, teams collect more feeds, then spend meetings debating what counts as relevant.

What breaks first in an unowned model:

- Scope drift: new sources and topics get added without a change log, so coverage becomes inconsistent by region, language, and business unit.

- Review gaps: critical items move forward without a clear review state, reviewer identity, or escalation path.

Export inconsistency: stakeholders receive different formats and levels of detail, so the briefing cannot be used as a decision input. - Accountability gaps: there is no single owner for thresholds, cadence, and final artefacts, so decisions revert to opinions.

A governed market intelligence program exists to remove these failure modes by making scope, review, and outputs operationally provable.

You Must Align Market Intelligence With The EU AI Act Before 2026

EU AI Act alignment changes what you log, what you store, and who approves outputs. This becomes a procurement question early.

Key dates to design for (as of December 2025)

- 2 Feb 2025: prohibited practices and AI literacy obligations start to apply.

- 2 Aug 2025: obligations for general-purpose AI (GPAI) start to apply.

- 2 Aug 2026: key obligations for high-risk systems are scheduled to start applying, with transition provisions for some categories.

What this practically means for your platform

“Compliance is not a document you add at the end. It is a set of system properties you can inspect, like who approved a brief, which model version generated a draft, and which export ID left the platform. If those fields are missing or inconsistent, reliability work will not save you in procurement.”

– Dmytro Naumenko | CTO | Cloud Architecture and Delivery

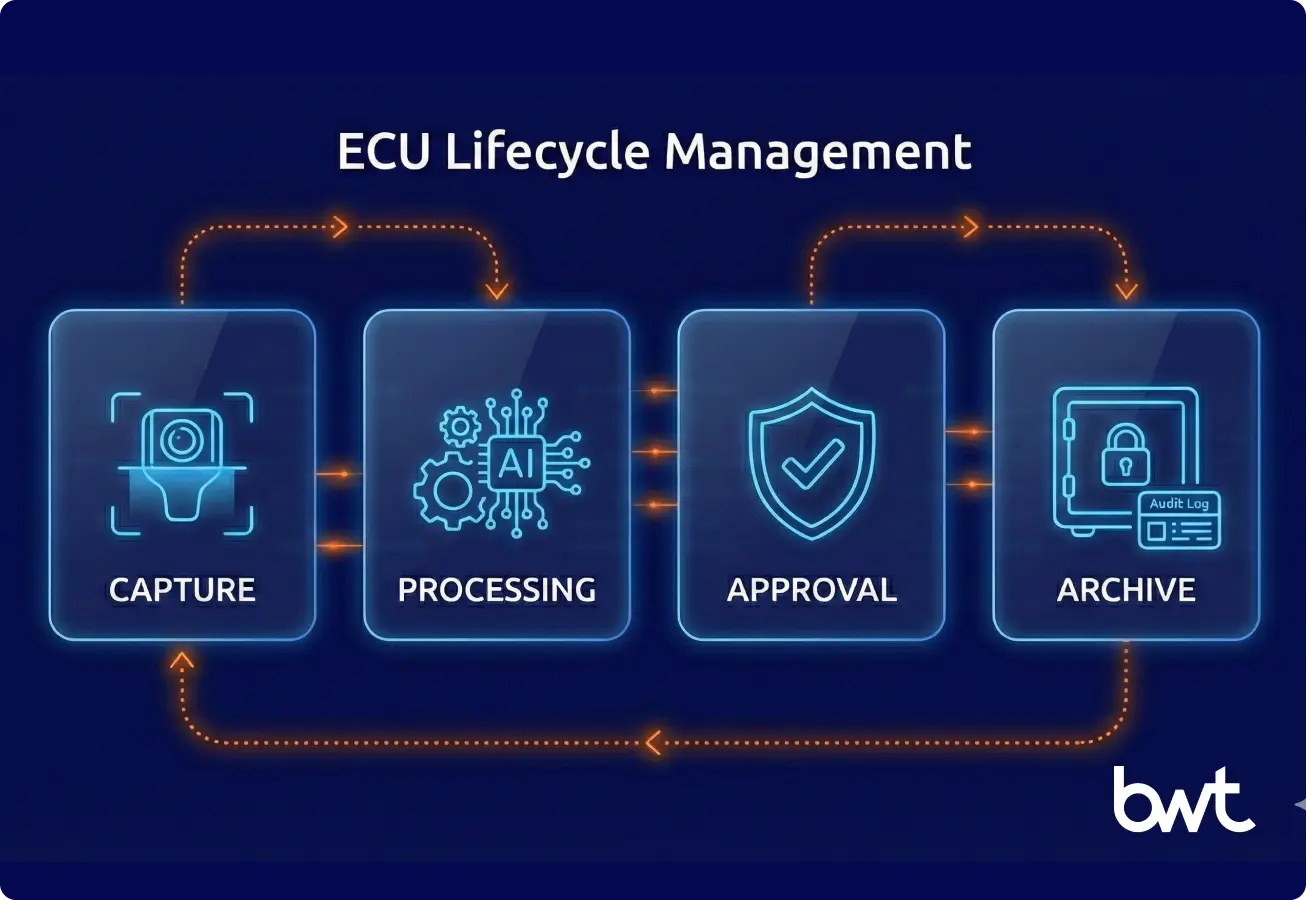

- Traceability of each insight: keep source URL, capture time, processing steps, model version, reviewer, and export ID (audit trail).

- Scoped data sources: manage allowlists and denylists per business unit and geography, with change logs.

- Explicit ownership: assign named owners for topics, thresholds, and briefing cadence.

- UAT and Test Book: document edge cases, acceptance criteria, and sign-offs, then retain results for reviews.

Governance controls buyers should see in outputs

| Control area | What must be visible in outputs | Who owns it |

| Traceability | Source link, capture time, processing steps, model version, reviewer, export ID | Platform owner + reviewer lead |

| Scope control | Approved sources list, exclusions, geo rules, and version history of changes | Topic owner + governance lead |

| Review integrity | Review required on critical topics, review timestamps, and escalation path | Research/comms owner |

| Export integrity | Stable templates, export IDs, delivery logs, retention policy | Comms/strategy owner |

| Testing discipline | Test cases, edge-case outcomes, sign-off record, and regression history | QA/UAT owner |

Market Vs Marketing Intelligence: Outside-In Vs Inside-Out

Marketing intelligence solutions are the inside-out layer for performance management.

Market intelligence (outside-in)

- Primary focus: competitors, markets, regulators, partners

- Outputs: briefs, research packs, decision logs

- Primary owners: strategy, research, product marketing

Marketing intelligence (inside-out)

- Primary focus: campaigns, channels, creatives, funnels

- Outputs: attribution, performance insights, budget guidance

- Primary owners: marketing ops, analytics, growth

How marketing intelligence solutions drive higher campaign ROI depends on connecting external market moves to internal performance decisions without mixing governance boundaries.

Keep the outside-in governance model separate, so scope changes and approvals remain traceable.

The Evolution Toward Modern Intelligence Platforms

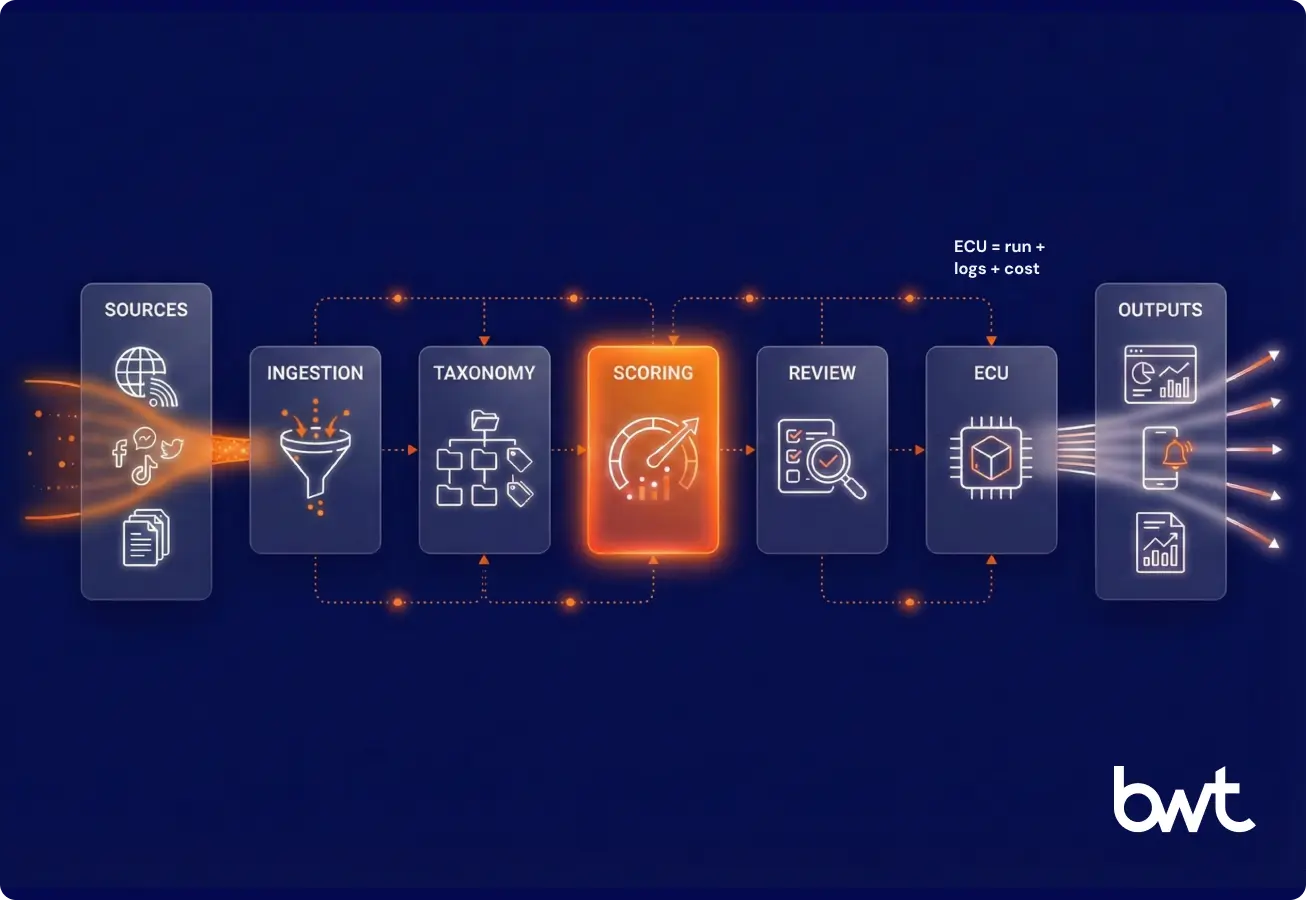

A modern system consolidates ingestion, taxonomy, scoring, review, and exports into one auditable product. You can apply it once across regulated services and consumer verticals without rewriting the operating model for each team.

From fragmented sources to unified ingestion

Make ingestion modular and logged. Keep scope explicit and versioned. If your implementation needs pipelines and reliability work, start with data engineering to standardize ingestion, observability, and delivery SLAs.

“If ingestion is not versioned, you do not have scope control. We treat every source allowlist, fetch rule, and parser change like a release, with a changelog, a rollback path, and a regression sample. That is how you keep the same brief quality when targets change, blocks shift, and volume grows.”

– Alex Yudin | Head of Data Engineering, Web Scraping

Keyword, topic, and entity monitoring

A practical taxonomy has three layers: keywords (terms), topics (owned themes), and entities (competitors, partners, regulators). Business owners should be able to update the configuration while the platform logs changes.

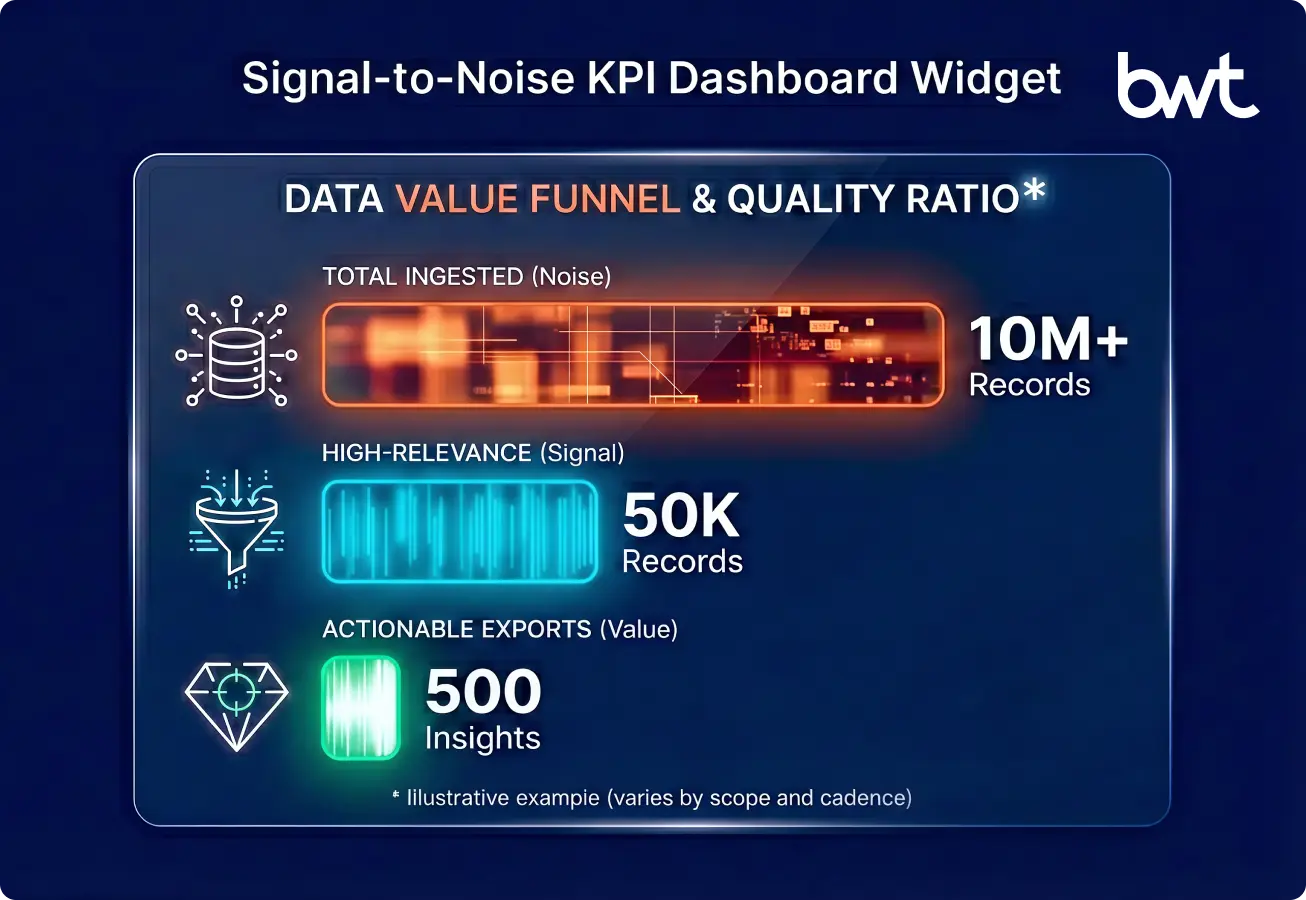

Automated relevance scoring (signal vs noise filtering)

Scoring makes daily monitoring sustainable using ranking models, human feedback, and tiered thresholds.

Export Chain And ECU: How We Productise Intelligence

Export Chain is a pipeline from captured content to briefing outputs with logs and review states. One complete run is an ECU (Export Chain Unit).

This design supports predictable units for billing and capacity planning, rather than a vague “API calls” metric. It also makes it easier to compare enterprise market intelligence solutions pricing and features against throughput and governance controls.

Export Chain steps, owners, and controls

- Capture: fetch approved sources and store snapshots. Owner: data engineering. Controls: scope lists and retention rules.

- Normalize: deduplicate, clean, classify. Owner: data and ML. Controls: sampling and Test Book cases.

- Rank: score relevance by topic. Owner: topic owners. Controls: threshold tuning and override logs.

- Draft: generate summaries for review. Owner: research and comms. Controls: mandatory review on critical topics.

- Export: produce PDF, slides, and tables. Owner: comms and strategy. Controls: templates, export IDs, audit trail.

ECU cost estimator

- ECUs per month = (topics × cadence) + ad-hoc runs

- Cost per ECU = (platform ops + review time + storage) / ECUs

- Cost per stakeholder = total monthly cost / active consumers

AI-Powered Intelligence: Turning Raw Data Into Insights

AI becomes valuable when it produces consistent, reviewable briefs, not more text.

NLP for content understanding and entity extraction

NLP converts content into structure: entities, events, and relationships that remain consistent across sources and languages. For 2025–2026, multilingual coverage is feasible, but errors remain: entity ambiguity, near-duplicate rewrites, and topic drift. Manual taxonomy governance and review sampling remain required, even when models improve.

If you need production support for entity extraction and taxonomy alignment, see the NLP services company.

AI-generated summaries and executive briefs

If you want briefs inside a conversational UI, a governed chatbot development layer can enforce role access, approvals, and export logging inside the same workflow.

Key Features That Separate A Platform From A Feed Reader

Procurement buys controls, outputs, and predictable costs. This is where market intelligence software solutions often fail: they demo dashboards, but they cannot answer audit questions about scope, approvals, and exports.

A market intelligence solution should be judged on governance outcomes: can it prove scope, review, and exports for every briefing cycle without exceptions.

Contrarian standard (for buyer clarity)

Many products sold as “intelligence” are expensive feed readers. If they cannot show Export Chain-level logs and ECU-level costs, they add risk instead of reducing it.

Seven questions that expose a weak platform on a demo

- Can you show the audit trail fields for one insight (source, capture time, processing steps, model version, reviewer, export ID)?

- Can a business owner change scope (sources, keywords, entities) without a ticket, and will the change be versioned and logged?

- What is the default review workflow for critical topics, and how do you prove review happened?

- What is your export model (PDF, slides, tables), and can you show stable templates plus export IDs?

- How do you prove coverage (what was included, excluded, and why) across geographies and languages?

- What are your controls for retention, data residency, and access by role?

- Can you map cost to throughput (runs, topics, cadence) instead of only reporting requests or seats?

Demo claims vs proof: what to ask for and what counts as a pass

Use this table during vendor demos to convert broad promises into concrete evidence. It helps procurement, strategy, and platform owners validate auditability, scope control, review discipline, export integrity, and cost transparency.

| DEMO CLAIM | WHAT TO ASK FOR | PASS CRITERIA |

| “We are compliant-ready” | Show one item’s full audit trail fields end to end | All fields present and tied to an export ID |

| “Business users can manage it” | Change scope as a non-technical user and show the change log | Change is versioned, attributed, and timestamped |

| “We have governance” | Demonstrate mandatory review on a critical topic | Review state is enforced, not optional |

| “We cover everything” | Show a coverage report by geo and language with exclusions | Coverage gaps are explicit and explainable |

| “Exports are easy” | Generate PDF and deck with stable templates and IDs | Exports are consistent, repeatable, and logged |

| “Costs are predictable” | Map throughput to runs, cadence, and review effort | Cost model ties to units of work, not vague usage |

| “Dashboards prove value” | Show usage tied to approvals and on-time exports | Metrics reflect decisions and delivery, not clicks |

Source management and ingestion

Support allowlists and denylists, rate limits, data residency, and immutable logs. When scale and retention matter, align ingestion with solutions for big data so retention, throughput, and audit trails remain stable under load.

Dashboards and usage KPIs

Track decisions and usage: approvals by tier, brief consumption by role, export timeliness, coverage gaps, and ECU usage. The goal is quality visibility, not busy dashboards.

Exports that match leadership habits

Exports should fit cadence: weekly PDF brief, monthly deck, structured rows into BI or CRM. To productize exports, you typically need a disciplined big data implementation rather than one-off scripts.

“When a weekly PDF and a monthly deck arrive on time, with the same template and an export ID that ties back to sources, the organisation stops debating what happened and starts deciding what to do. That is the difference between content volume and decision utility.”

– Olesia Holovko | CMO | B2B Marketing, Analytics & Growth

Business Benefits You Can Measure

Well-run market intelligence solutions create value through delivery quality and measurable decision support, not through content volume.

Measure benefits using delivery, governance, and cost signals:

- On-time brief delivery: % of weekly/monthly exports shipped on schedule, with stable templates and export IDs.

- Review compliance on critical topics: % of critical items that have a recorded reviewer, timestamp, and outcome before export.

- Coverage completeness: % of required sources/regions/languages captured per cycle versus the coverage contract (with explicit exclusions).

- Signal precision: ratio of high-relevance items to total ingested items per topic tier (precision improves as scoring and thresholds mature).

- Decision throughput: number of briefs that generate documented actions, owner assignments, or follow-up research requests.

- Cost predictability with ECUs: cost per ECU, ECUs per month by topic tier, and cost per stakeholder based on active consumers.

- Operational stability: export rework rate (how often a brief must be corrected), and exception rate (how often the cycle runs outside the defined workflow).

These metrics let leadership evaluate the program like an operating system: predictable cadence, controlled scope, enforced review, and repeatable exports.

Use Cases With Clear Terminology

Use cases clarify when you need full market intelligence versus narrow competitor intelligence.

Teams ask for competitor and market intelligence solutions when they want one brief that covers competitor moves and market shifts with a single scope definition. Use the term “competitor intelligence” only when the scope is explicitly competitor-focused, not as a synonym for the whole program.

Product marketing and positioning

This is where artificial intelligence solutions in digital marketing and artificial intelligence solutions for digital marketing are useful, as long as outputs remain attributable and reviewable.

M&A scouting and partnership evaluation

Traceable shortlists and risk summaries work best when evidence links and approval states are enforced.

Market entry and geographic expansion

Comparable briefs reduce internal friction when each region uses the same scope, template, and export cadence.

How To Build Or Implement A Market Intelligence Solution

Implementation is operating design plus platform design.

- Define the taxonomy: define 5–10 topics per business unit, assign owners, build keyword and entity lists, tier topics, draft a Test Book outline.

- Configure ingestion sources: write a coverage contract (must-include sources, exclusions, languages, geographies). Version it monthly.

- Tune scoring and thresholds: start narrow, sample review, log overrides, calibrate monthly.

- Design reporting outputs: pick two exports (weekly brief, monthly pack), then integrate into email, shared drives, meeting decks, and BI.

Manual vs build vs buy vs hybrid

- Manual: fits fewer stakeholders and low cadence; risk is fragile and inconsistent.

- Build: fits strong engineering and governance maturity; risk is it becomes a science project.

- Buy: fits need for admin UI and fast go-live; risk is vendor limits on ingestion and exports.

- Hybrid: fits need custom ingestion plus packaged UI; risk is integration complexity.

Example Product Journey

Manual feeds → curated internal newsletter: demand grows, cadence slips, coverage becomes inconsistent, trust drops.

Alerts-based monitoring → automated aggregation: discovery improves, noise increases, and teams cannot prove what was missed.

Signal scoring → ranked insights: unified ingestion, owned topics, and thresholds make production repeatable.

AI summarization → executive-ready reports: exports have stable templates, IDs, and logs.

This is where a competitor and market intelligence requirement appears: leaders want one brief with one scope definition.

What Changes By 2026

- Agent-based monitoring: agents can probe “what changed since last brief” inside the approved scope.

- Automated strategic recommendations: recommendations must cite evidence, assumptions, and alternatives to pass governance review.

- Personalized intelligence feeds: role views work when topics are owned and exports have stable templates, IDs, and logs.

Most organizations are still moving from manual digests and alerts to governed exports. Treat agent scenarios as a next step, after scope, ownership, exports, and auditability are stable.

If you plan to operationalize that layer, start with a generative AI development company that treats approval and traceability as product features.

Next Steps

If you are comparing leading market intelligence solutions for large enterprises, start with one operational test: can business owners change scope and still produce traceable exports without IT tickets?

For vendor benchmarking context, see top data science companies in USA and use that shortlist to pressure-test governance claims during demos.

If stakeholders ask for market intelligence data solutions, define whether they want ingestion pipelines, a governed briefing product, or both.

If you are selecting market intelligence solutions, evaluate the Export Chain first, then validate review capacity, and then validate export delivery discipline.

FAQ

-

What is a market intelligence platform?

A market intelligence platform is a governed system that turns external and internal signals into traceable executive briefs on a schedule.

-

How is market intelligence different from marketing intelligence?

Market intelligence is outside-in monitoring of markets and competitors, while marketing intelligence is inside-out analytics of your campaigns and channels.

-

What is the core output that earns adoption?

Adoption follows when the platform ships a briefing artefact on a schedule, with sources, reviewers, and export IDs recorded.

-

What should procurement validate first?

Procurement should validate scope controls, audit trail fields, review workflow, and export templates before evaluating dashboards.

-

When does a team move beyond manual digests?

Move beyond manual digests when cadence slips, scope drifts, and review and export steps cannot be proven end-to-end.