Insurance data extraction turns insurance PDFs, web pages, emails, and reports into structured, reviewable outputs (JSON/CSV/PDF) with traceability and governance. If your team still builds weekly updates by copy-paste, the bottleneck is building a controlled workflow that scales from 30 stakeholders to 2,000+ without losing consistency. This is where an extraction program either scales or stalls.

A contrarian view: OCR accuracy is rarely the KPI that drives adoption. In regulated environments, the KPI that matters is “why this item was included” — provenance, a scoring rubric owned by the business, and an audit trail that can be replayed. For broader delivery patterns, see GroupBWT data extraction services.

Glossary (terms used in this guide)

- Insurance document extraction means converting insurance-related content into structured fields plus metadata (source, date, ecosystem, confidence).

- Data extraction insurance workflow is the end-to-end pipeline: discovery → capture → parse → classify → score → review → export → audit.

- RAG (Retrieval-Augmented Generation) is a generation that happens only after retrieving approved documents, binding outputs to sources.

Governance comes first: the six capabilities that must work together

“Scraping systems, for example, don’t fail because the code is bad. They fail because the architecture doesn’t account for how platforms change.”

— Alex Yudin, Head of Data Engineering and Web Scraping Lead, GroupBWT

A scalable program needs six capabilities that work together:

- Capture: ingest PDFs, web pages, emails, newsletters, scans.

- Recognition: parse text (direct parsing plus OCR where needed).

- Understanding: classify, extract entities, and map fields to a schema.

- Relevance scoring: rank signal vs noise using a rubric your business owns.

- Validation and governance: logs, approvals, traceability, change control.

- Delivery: export to PDF/CSV, push via API, or route into BI/knowledge tools.

Executives tend to trust outputs that combine (1) a shortlist, (2) a weekly digest, and (3) explicit source links.

Example: executive shortlist fields (minimum viable)

| Field | Purpose |

| item_title | scan-friendly headline |

| ecosystem_tag | Mobility / Welfare / Property, or your taxonomy |

| source_url | provenance link |

| publish_date | recency context |

| why_included | rubric-based reason (one line) |

| confidence_score | operational quality signal |

| reviewer_status | selected / rejected / follow-up |

| snapshot_hash | replayable version id |

Data formats drive effort: structured vs unstructured inputs

- Semi-structured: recurring report formats, policy schedule PDFs, vendor bulletins.

- Unstructured: news articles, announcements, long narratives, mixed layouts.

The hard part is normalize, deduplicate, rank, and explain relevance with repeatable rules.

Source selection must be lawful, stable, and defensible

Typical monitoring sources include regulator publications, associations, industry reports (PDFs), competitor press releases and product pages, partner announcements, and internal newsletters that need consistent formatting.

If your scope includes public web monitoring at scale, use AI driven web scraping as context and align collection methods with site terms and licensing.

Claims intake: speed comes from standardizing attachments and routing

Attachments vary (scans, photos, mixed PDFs). The goal is standard intake and low setup time per new attachment pattern.

What “good” looks like (example fields):

- claim_id, date_of_loss, location

- attachment_type, extracted_entities, confidence_score

- routing_tag (for example: glass, bodily injury, property)

- review_required (true/false) plus a reason

Automating insurance claims data extraction helps most when it improves routing and reduces rework rather than chasing perfect automation.

Claims intake workflow (production-friendly)

- Collect inbound items from the approved channel (email box, portal, or API).

- Classify attachment types (photo, scan, PDF form, correspondence).

- Extract candidate fields with confidence signals.

- Apply validation rules (required fields, ranges, cross-field checks).

- Route to a queue: straight-through, reviewer, or specialist team.

- Log every decision: model version, rules version, reviewer action.

Policy and renewal analytics: reduce re-keying with a consistent schema

Many servicing tasks still rely on “web + PDF,” not a clean database. If your team needs to extract data from insurance policy documents, start with a stable schema:

- limits, deductibles, insured name, locations, endorsements, effective dates

- version snapshot (hash), source path, extraction timestamp

- schema_version (so changes stay explicit)

This is where insurance extraction of data points must include validation. A field that cannot be trusted becomes operational noise.

Policy schedule schema starter (example)

| Field group | Examples | Validation ideas |

| Parties | insured name, broker, carrier | normalization, duplicate checks |

| Coverage | limits, deductibles, sub-limits | range checks, currency handling |

| Dates | effective, expiry, endorsement dates | chronology checks |

| Locations | address, site identifiers | standard formatting |

| Endorsements | codes, descriptions | controlled vocabulary mapping |

Life and health: stricter controls for access and retention

Life insurance data extraction often sits closer to sensitive workflows and tighter access constraints. If your scope includes data extraction life insurance underwriting, enforce:

- role-based access control and least privilege

- retention rules plus deletion workflows

- reviewer and approver logs for every exported digest

- explainability rules for any score used in decision support

Collection at scale is an operations decision

At scale, collection is about operating under drift: gating changes, layout changes, rate limits, and source turnover, plus lawful use and retention controls.

Technologies behind modern extraction must be tied to outputs and controls

OCR + IDP for scans and recurring PDF layouts

OCR is mandatory for scans. IDP adds layout logic, field mapping, and review flows so the same document family stays consistent over time.

NLP for decision-ready notes

NLP supports topic tagging, entity extraction, duplicate clustering, and consistent summarisation (short plus long).

AI data extraction for insurance statements works best when narratives are long, but outputs must be standardized and auditable.

RPA for legacy destinations

If exports must land in older internal tools, robotic process automation can route outputs without rebuilding downstream systems. Learn more about RPA as a service benefits.

Weekly digest workflow: the simplest model that stays governable

A data extraction insurance workflow is easiest to govern when outputs are fixed. Below is a production-grade flow that supports executive distribution:

- Discover: keywords plus curated sources per ecosystem.

- Retrieve: pull pages/PDFs via permitted methods.

- Parse: extract raw text (direct parsing/OCR).

- Summarise: generate a short plus long summary.

- Score: apply a rubric per ecosystem (relevance plus confidence).

- Review: human selection for executive distribution.

- Export: Executive PDF (short) plus Full PDF (long) plus CSV/JSON.

- Log: immutable metadata (who/when/which sources/which version).

If you need controlled GenAI packaging in software, see generative AI software development.

Scoring rubric example (starter)

| Dimension | 0–2 (low) | 3–4 (medium) | 5 (high) | Notes/examples |

| Regulatory impact | unrelated | indirect | direct requirement | regulator circulars, enforcement updates |

| Commercial relevance | niche | segment-level | ecosystem-wide | product constraints, pricing rules |

| Novelty | repeated | partially new | new signal | first appearance, new clause |

| Evidence strength | unclear | acceptable | strong | primary source vs repost |

| Actionability | none | some | clear action | update checklist, notify owners |

Compliance and security controls (minimum set)

“Even teams with strong in-house tools face failures… because the expertise to catch these shifts in real time is missing.”

— Oleg Boyko, COO, GroupBWT

You must be able to answer: what data is stored, where it is hosted, who can access it, why an item was included, and whether the exact version can be replayed.

Recommended controls:

- retention plus deletion policies

- access levels and reviewer/approver roles

- change control for prompts and scoring rules

- export logs with replayable snapshots

A practical hosting question to answer early: where artifacts and logs live, and whether access is enforced through your identity provider. Even when inputs are public, the digest output and scoring notes can become internal work product. Treat storage, access, and retention as part of the design, not as an afterthought.

A validation mindset is covered in big data software testing.

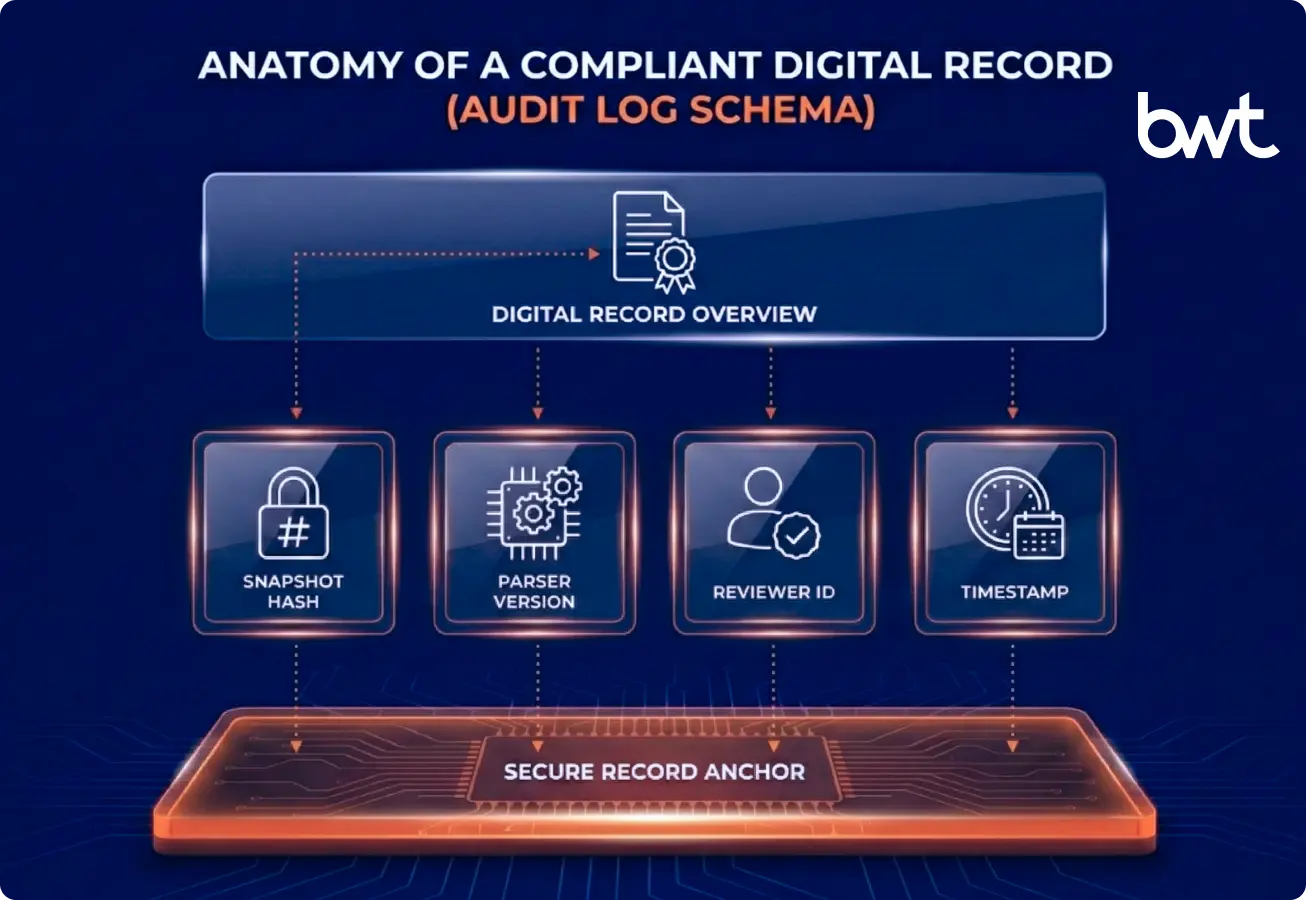

Governance log schema (minimum viable)

- source_id, source_url, license_status

- snapshot_hash, snapshot_timestamp

- parser_version (or extraction method id)

- rubric_version and prompt_version (if GenAI is used)

- confidence_score and review_required_reason

- reviewer_id, approver_id, decision_timestamp

- export_id (ties items to a digest release)

- retention_policy_id and deletion_due_date

ROI: measure time saved, acceptance rate, and rework

“Only the one who outperforms competitors is the one who sees patterns and hidden trends based on historical data.”

— Olesia Holovko, CMO, GroupBWT

Monthly savings ≈ (hours/week saved × blended hourly cost × 4.3) − monthly platform cost.

Worked example:

- Baseline: 10 hours/week → After workflow: 4 hours/week

- Hours saved: 6 hours/week; blended hourly cost: 60; monthly platform cost: 900

- Monthly savings ≈ (6 × 60 × 4.3) − 900 ≈ 648

Pilot metrics (track weekly)

| Metric | What it measures | Why it matters |

| Time-to-digest | weekly production time | shows operational load |

| Acceptance rate | % items kept after review | shows relevance and trust |

| Duplicate reduction | duplicates removed by clustering | cuts noise |

| Coverage gaps | missing topics/sources | validates discovery |

| Export reliability | failed/partial exports | protects stakeholder trust |

Build vs buy: decide with a scorecard

Use a scorecard aligned to monitoring realities: relevance quality (not only OCR accuracy), business-owned configurability, audit trail, export formats, reliability under drift, rights controls, and integration needs.

Use a scorecard designed for data extraction insurance workloads so teams compare controls, not demos.

Build vs buy scorecard (example)

| Criterion | What to verify | Evidence to request |

| Configurability | edit rubrics, sources, taxonomy | admin UI, change logs |

| Audit trail | replayable snapshots and decisions | sample logs, schema |

| Output formats | exec vs full, CSV/JSON, API | example files, API docs |

| Drift handling | monitoring, fallbacks, alerting | incident examples |

| Rights model | retention, deletion, license_status | policy mapping |

| Integration | storage, BI, identity provider | SSO and connectors |

| Cost predictability | fixed fee vs usage spikes | billing model |

Selecting the best AI tools for data extraction in insurance should be treated as risk-managed procurement because auditability matters as much as model quality. If you prototype before productionizing, scope discipline helps; see MVP Development company.

Real-world pattern (Insurance, 2025): scaling a digest from 30 to 2,000+ recipients

Client profile: European composite insurer, innovation unit. Goal: scale a digest into a repeatable communication product with consistent relevance logic.

What changed:

- business-owned keyword/source management

- ecosystem segmentation (Mobility/Welfare/Property)

- AI scoring plus consistent summaries (short plus long)

- human approval before export

- executive vs full PDF outputs with traceability metadata

- operational dashboard (limits, drift signals, coverage)

- fixed monthly fee bundling search/proxy/AI usage into one predictable number

Summary: ship a “safe” workflow first, then expand

Ship-Safe checklist:

- rights per source, with retention rules

- versioned documents (hash/snapshot)

- traceable summaries linked to citations

- quality gates and human review paths

- monitoring for drift and coverage

- incident plan with rollback steps

Pilot (4 cycles):

- Pick one ecosystem.

- Define 20–40 keywords plus 20–60 sources with business ownership.

- Configure two outputs: Executive PDF (short) plus Full PDF (long).

- Run weekly for 3–4 cycles; tune rubric and prompts under change control.

FAQ

-

Can we scrape paywalled sources if we don’t redistribute the text?

Not safely by default—paywall access doesn’t grant automated extraction rights; you need explicit license terms or a permitted access method.

-

What’s the safest alternative to scraping?

Contracted APIs/data feeds or publisher licensing, with documented allowed use and retention rules.

-

What schema do we need so weekly outputs are comparable?

Source → Document (versioned) → Extracted Item (typed claim + citation spans) → Entity/Topic tags → Weekly Brief with approval metadata.

-

How do we stop hallucinations before execs see them?

Enforce grounding and entailment checks, require immutable citations, and route low-confidence/high-impact items to human review.

-

Who owns this operationally?

A Product Owner plus Source/Rights Owner, Taxonomy Owner, Model Owner, Reviewers, Approvers, and SRE—otherwise drift is guaranteed.