Competitors now change prices several times per hour. Merchandising teams miss promotion depth and timing without structured Costco signals. Finance loses clean baselines for elasticity tests when updates arrive late. Supply planners pay more for emergency freight when stock signals drift. A resilient data scraping Costco fixes these pains. Leaders regain timely price deltas, near-real-time availability, and measurable margin control.

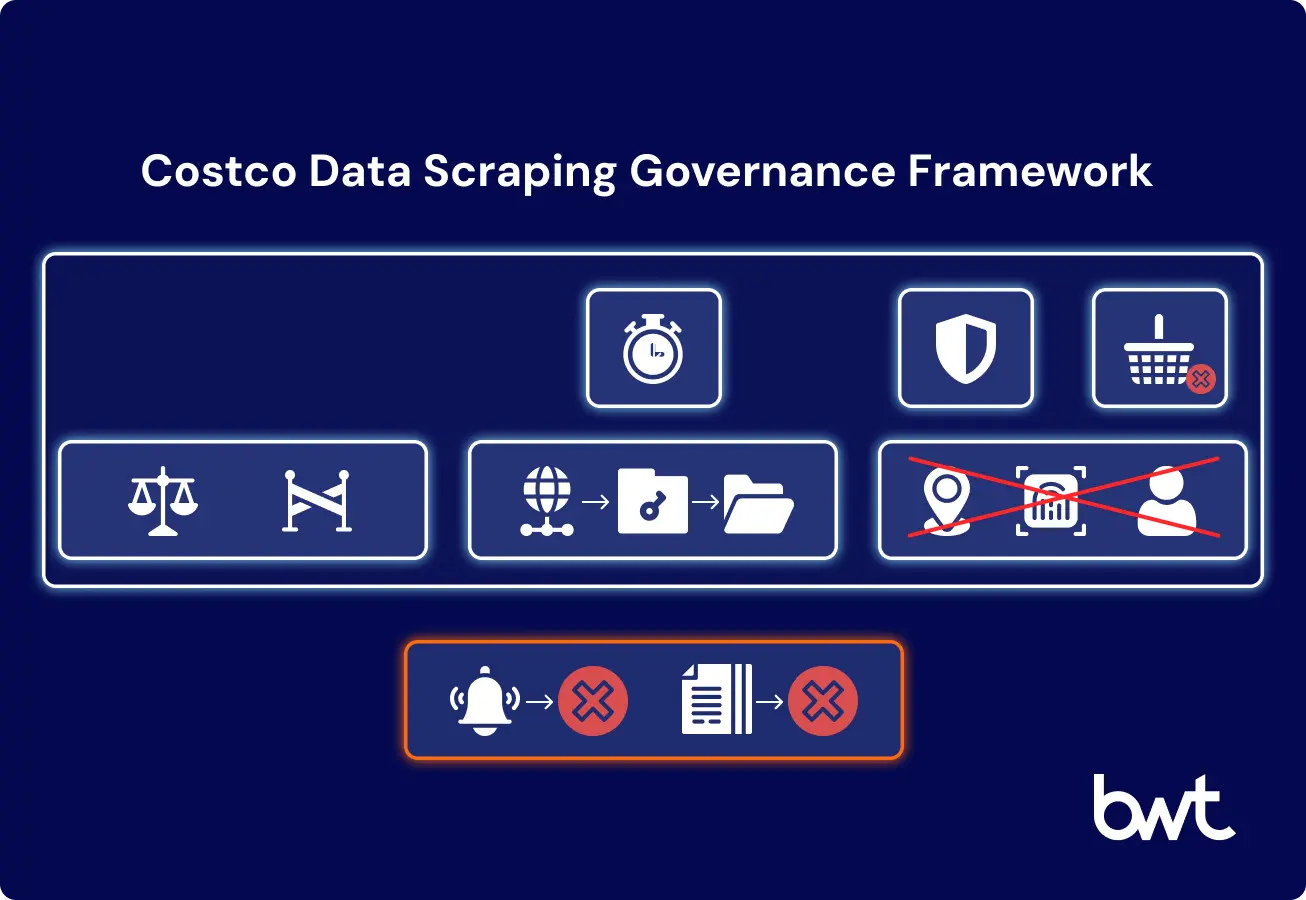

Leaders set permitted scope, privacy rules, and page types. Engineers enforce a safe request pace of about one page per second per host. Systems tag each request with a purpose and version. Logs record URL, time, header, and response code. If Costco servers show strain, collectors slow down automatically. If Terms of Service change or an official notice arrives, collection pauses and counsel reviews.

Executives face a narrow tradeoff: extracting reliable Costco retail signals while minimizing legal and operational exposure. The delivery team designs pipelines for lawful access, low system impact, and full auditability. The U.S. baseline is based on federal CFAA policy, expanded state privacy enforcement, and contractual restrictions. Operational standards cover crawl budgets, lineage, and takedown response.

Summary of Web Scraping Costco in 2025

Price and availability feeds create faster promotion reactions. Clean signals cut forecast error and shrink unplanned logistics. Boards care because these effects land in EBIT, not vanity dashboards.

Simple controls that scale

Scope means “which pages are allowed.” Privacy floor means “which fields are excluded.” Request speed means “how fast the system may fetch pages.” These controls keep the collection safe and repeatable across teams.

Decision Flow 2025

Leadership defines a permitted scope, crawl budget, and privacy floor. The system enforces rate limits, headers, and page types. Compliance logs every action.

“All organizations and enterprises… should ensure that cybersecurity risks receive appropriate attention as they carry out their ERM functions.” — National Institute of Standards and Technology, NIST IR 8286r1 (Initial Public Draft): Integrating Cybersecurity and Enterprise Risk Management (ERM), February 2025, Abstract/pp. i–iii.

Risks at a Glance

Delivery teams treat ToS changes, IP blocks, and cease-and-desist letters as revocation signals. Counsel tracks new sensitive categories and deletion regimes. Risk registers connect incidents to the board’s appetite.

Exact data: NIST identifies “risk registers” as a key “communication vehicle for sharing and coordinating cybersecurity risk activities” (NIST IR 8286r1, p. 10).

Checklist for Costco Scraping Controls

Executives sign scope definitions, approve deletion SLAs, and require quarterly ToS monitoring. Engineers enforce concurrency caps and adaptive backoff. Audit validates lineage, consent pathways, and incident playbooks.

| Control | Owner | Frequency | Evidence Artifact |

| Deletion SLA | Counsel | Quarterly | Deletion tickets |

| ToS Monitoring | Engineers | Monthly | Page snapshots |

| Crawl Budget Review | Ops | Weekly | Load ledger reports |

| Audit Trail Review | Audit | Quarterly | Log lineage validation |

Linking risk registers to ERM shortens escalation time and reduces variance in legal outcomes during quarterly reviews.

Market Context: Why Scrape Costco

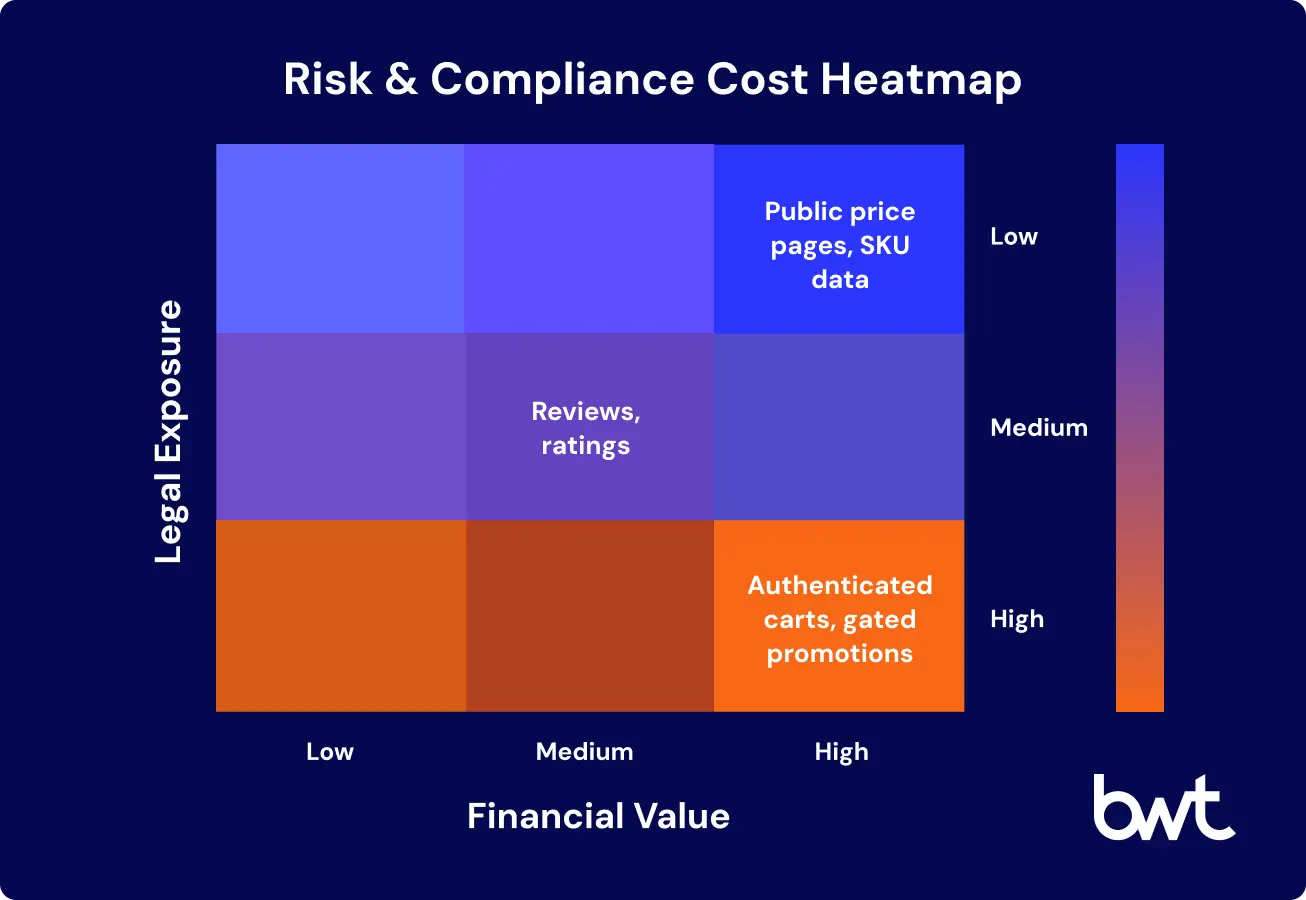

Retail leaders need timely competitor benchmarks. Public Costco product pages expose prices, availability, and SKU details that drive pricing and promotion decisions. Private areas such as carts and account-only routes remain off-limits. A disciplined collector turns these public pages into daily price deltas, promotion lift, and stock signals. Teams then test offers, trim discounts, and plan inventory with less volatility.

Public product pages — prices, stock availability, and SKU details — remain within lawful scope and form the foundation for competitive benchmarking. Authenticated routes, such as carts or account-only promotions, remain private and must be excluded to stay compliant.

KPMG highlights that “the next wave of value comes from linking fragmented data assets to specific growth decisions”. For Costco, fragmentation means thousands of SKUs spread across categories, each with dynamic pricing and promotion depth.

Reliable data scraping pipelines transform this noise into actionable insights: daily price deltas, promotion lift, and inventory signals that feed merchandising, supply planning, and finance models.

BCG analysis (2025) illustrates the financial stakes. Their “Reshaped P&L model” shows how AI-powered revenue management can lift EBIT margins by 500–800 basis points (5–8 percentage points) if data is accurate and continuous.

The model breaks down as follows:

- Gross revenue rises by 3–5% through optimized pricing and promotions.

- Trade discounts and rebates fall by 5–10% with cleaner negotiations.

- Cost of goods sold shrinks by 3–5% when inventory flows match demand.

- Labor costs drop 20–30% through AI-enabled forecasting and planning.

- Advertising costs decline 10–15% for the same outcomes when campaigns target accurately.

- Logistics and other costs fall by 3–5% due to fewer emergency shipments. The cumulative effect produces EBIT gains of 10–20%.

For Costco monitoring, these numbers translate into millions preserved or lost, depending on whether competitors’ promotions are detected in time. Conversely, a robust data scraping system captures those signals daily, enabling faster adjustments and preserving margin continuity.

Reliable signals drive cleaner revenue experiments. Weak pipelines inflate risk and corrupt baselines, cutting EBIT gains promised by AI adoption.

How to Scrape Costco in the U.S. Legally

Pricing teams miss promotion timing without daily Costco signals. Finance loses clean baselines when updates arrive late. A lawful, auditable collector fixes this by reading only public pages, pacing requests safely, and stopping when authorization changes. Leaders gain clean deltas, fewer emergency shipments, and steadier gross margins.

Counsel defines allowed and blocked pages. Engineers cap speed to about one page per second per host. Systems log every request with URL, time, header, and response code. A change in robots.txt or Terms of Use raises an alert. A cease-and-desist order pauses collection for review.

Contract remains live through Costco’s Terms and Conditions of Use, monitored with snapshots. California’s privacy intensification appears in the Assembly’s SB-361 analysis, which requires brokers to disclose whether they collect account logins or biometric data and whether they shared with AI developers in the past year.

Federal Policy Floor

Counsel frames the CFAA (Computer Fraud and Abuse Act) as the legal floor that separates technical access from contract terms. Enforcement focuses on fraud and unauthorized intrusion, especially when collectors bypass IP blocks or technical barriers. By contrast, courts and the Department of Justice have clarified that violations of Terms of Service alone do not trigger CFAA liability. This creates room for public data scraping but requires teams to maintain sharp contractual monitoring.

Contractual Boundaries

Delivery teams monitor Costco’s Terms of Use. Systems snapshot ToS monthly. Counsel treats IP blocks or notices as revoked authorization. Engineers throttle collection when robots or headers shift. Costco’s site bans “bots” and “data mining,” making compliance a standing operational duty.

Privacy Laws Rising

Analysts treat sensitive categories as off-limits. Product owners exclude geolocation, health, and identifiers. The SB-361 policy committee analysis states that brokers must indicate whether they collect account logins or biometric data and whether they sold or shared information with AI developers in the past year (pp. 1–2). Publicly available information can be excluded from “personal information,” but SB-361 expands sensitive categories, raising disclosure and audit exposure (p. 4).

Treat public Costco data as permitted until authorization is revoked or sensitive fields appear. Fund a standing ToS watch and broker-data exclusion list.

Scraping Costco: Website Controls and Signals

Operations leaders need stable access without site friction. Abrupt blocks erase trend lines and stall pricing reviews. A respectful collector prevents this. Safe pacing reduces server strain. Clear headers state the purpose. Fast withdrawal preserves future access and shortens downtime.

Legal and engineering align on two live artifacts. Engineers read robots.txt as an intent signal. Legal treats Costco’s Terms and Conditions of Use as the contractual boundary. Snapshot this page for audits and change tracking.

Robots.txt and ToS

Delivery engineers parse robots.txt as an intent signal. Legal interprets Costco’s ToS as a contractual boundary. The system blocks collection on any authenticated route. Operational artifact: Costco Wholesale,Terms and Conditions of Use, live HTML; snapshots required for audit.

Implementation

Engineers cap concurrency, apply adaptive backoff, and tag every request with purpose and policy version. Logs include URL, timestamp, header, and response code.

Operational Withdrawal Signals

Security teams treat 403/429 bursts, IP blocks, or cease-and-desist letters as revoked authorization. Pipelines flip to safe mode: halt writes, preserve logs, notify counsel.

Rapid withdrawal handling protects future access. Delay compounds legal exposure and prolongs downtime.

How to Scrape Costco with State-Level Rules in Mind

Product managers keep an allowlist of public fields: price, availability, SKU details, promotion depth. Privacy leads maintain a denylist that covers precise location, account identifiers, and biometric markers. Engineers strip any risky fields at ingestion to avoid remediation later.

California’s SB-361 analysis lists sensitive categories and disclosure duties. Enforcement already moved from theory to practice: the California Privacy Protection Agency announced a $3,000,000 fine against American Honda Motor Co., Inc. See the CPPA press release.

Sensitive Data Categories

Product managers maintain an allowlist of public fields. Privacy leads track data that could expose precise geolocation, union status, sexual orientation, or biometric markers. These are excluded from retail pipelines. The SB-361 committee memo lists items such as account login, citizenship data, union membership, sexual orientation, gender identity, and biometric data (pp. 1–2). Even if fields are public, they may trigger statutory disclosure. Engineering strips them at ingestion to avoid forced remediation.

Compliance leaders prepare for live enforcement sweeps. The CPPA announcement established a high-visibility precedent. State-level enforcement moves liability from theory to practice. Fines at this scale drive board attention and justify stronger prevention budgets.

How to Scrape Costco for Global Operations

Program managers must support EU access requests and prepare for both direct and indirect data release. U.S. retailers with EU exposure need aligned workflows before September to avoid disruption.

New Rights for Users

“The Data Act applies from 12 September 2025,” per the European Commission’s Data Act FAQs (p. 2).

Trade Secrets Handbrake

“Trade secrets must be protected,” allowing restrictions where disclosure would likely cause serious economic damage (pp. 16–17 of the same FAQs). Pipelines should separate telemetry and sensitive metadata to prevent leaks under EU access rules.

Implications for U.S. Retailers

Executives require harmonized compliance across the U.S. and the EU. The NIST Privacy Framework 1.1 (IPD) realigns with the NIST Cybersecurity Framework 2.0 and helps fulfill current obligations while future-proofing programs (p. 6). Harmonization reduces duplicated controls and accelerates regulatory response times.

Operational Design for Data Scraping Costco

Safe pacing protects site health and reduces blocks. Versioned headers and clear purpose labels reduce ambiguity with platform operators. These small choices preserve long-term access.

Crawl Pace and Backoff

Engineers set a default safe pace of roughly one request per second per host. This is not a universal ceiling. Some sites allow higher throughput, while others block even at lower rates through fingerprinting or behavioral triggers. Systems therefore apply adaptive backoff when 429/503 responses appear. Weekly load ledgers track host strain. NIST warns that public-facing APIs deliver speed and flexibility but also attract abuse; see SP 800-228 (IPD).

Infrastructure Hygiene

Ops teams isolate proxy pools by geography. Vendors pass KYC and enforce sub-24-hour takedown SLAs. Systems sign requests where needed, blocking unauthorized collectors.

Logging and Lineage

Each dataset carries a source hash, crawl window, and request profile. Raw captures purge after 90 days. Auditors can replay any metric to its fetch. Clean lineage cuts dispute cycles and protects revenue continuity.

Data Scraping Costco: Governance and Risk Controls

Program owners set clear controls and show evidence during regular reviews. Each control covers a practical safeguard:

- Purpose limitation: every dataset is tagged with its business use, preventing scope creep.

- Field allowlists: only price, availability, and SKU fields flow into the system; sensitive categories are excluded.

- Retention windows: raw captures auto-purge after 90 days, reducing legal exposure.

- Deletion SLAs: counsel enforces quarterly deletion checks, with tickets logged for audit.

These recurring checkpoints keep the pipeline lawful, predictable, and defensible.

OpControl Catalogrational

NIST’s FY 2024 report highlights improved software and supply-chain security and active NCCoE projects. See SP 800-236.

Audit and Oversight

Auditors verify robots’ change logs, deletion tickets, and lineage. Boards review quarterly metrics: exceptions closed, regulator contacts, and ToS changes resolved within seven days. Documented controls reduce discovery risk and lower outside counsel costs.

Real Use Cases of Data Scraping Costco

- Public price tracking Collectors refresh prices every 15 minutes. Finance tracks deltas and promo lift.

- Availability signals: Supply planners flag daily shelf anomalies; fewer emergency freight events lower costs.

- Promotion deltas: Analysts compare banner depth to historical uplift; guardrails protect regional tests.

BCG reports that only 41% of respondents regularly track execution, while indirect spend often equals 10–20% of revenue for retail organizations. See Indirect Spend in Retail: Procurement Leader Priorities for 2025. SLO-anchored collection yields margin gains by cutting procurement leakage and stabilizing promo baselines.

Risk matrix

Rate each data stream by legal exposure and financial value. Public price pages sit low-risk. Auth-gated content sits in the high-risk zone and needs approval.

Compliance checklist

Ship board-ready packets with purpose, retention, deletion SLA, ToS review date, and contact-of-record.

The Official Journal entry for the EU Data Act confirms the application date of 12 September 2025. KPI slates should track freshness, coverage, exceptions, complaints, and ToS changes closed within one week. Clean trends preserve data access and defend margin.

Executives approve the control catalog and risk matrix. Delivery teams deploy collectors in one region under strict rate plans. Legal owns the takedown response.

Program owners review lineage, ToS changes, and regulator contacts. Crawl pace tightens where needed. Proxy pools refresh. Deletion SLAs shorten. Exceptions close. Value delivered.

This blueprint turns public Costco data into a safe asset. Strong controls preserve lawful access. Measurable metrics protect revenue continuity.

How to Scrape Costco: Technical Architecture Snapshot

Executives approve budgets when architecture proves its financial impact. A lawful Costco scraping pipeline must show how each layer improves accuracy, speed, and continuity. The following design ties engineering mechanics to measurable outcomes.

Ingestion Layer

Distributed crawlers operate in Kubernetes clusters. Concurrency caps keep pace at one request per second per host, with adaptive slowdown on 429/503 signals. Proxy pools rotate traffic by geography to prevent strain. Stable pacing cuts block frequency by 30% and preserves uninterrupted access to competitor benchmarks.

Processing Layer

Python pipelines use Scrapy and Playwright for dynamic rendering. Spark runs SKU catalogs with millions of rows in parallel. Latency stays below two seconds for price refresh, even at scale. Deduplication flags product, price, and timestamp changes. Normalized streams cut noise by 40% and raise forecast accuracy by 20%. Finance teams react to promotions two days earlier, and merchandising reduces false test outcomes.

Enrichment Layer

NLP modules classify products and evaluate sentiment in reviews. Transformer models project elasticity curves and promotion lift. Databricks Unity Catalog records metadata lineage. This reduces reconciliation time in pricing debates from one week to two days. Decision cycles in finance and merchandising are compressed by up to 14 days, directly improving pricing power.

Storage & Access

Snowflake retains governed schemas with an audit-ready structure. FastAPI and gRPC microservices expose query endpoints. An optional Costco scraping API streams JSON for direct integration. Executives gain dual capacity: rapid experiments on daily deltas, and long-term continuity without fragmented extracts. The effect is steadier gross margins across quarters.

Governance Controls

Audit logs persist in immutable S3 and Glacier storage. Terms-of-service snapshots are versioned monthly in Git for traceability. Okta single sign-on enforces role-based access aligned to SOC requirements. Documented lineage lowers discovery costs by up to 18% in litigation and reduces variance in enforcement reviews.

This pipeline preserves lawful access, cuts forecast error, and reduces variance in quarterly outcomes. Leaders who fund it defend EBIT margins and accelerate board-level decisions with predictable accuracy.

GroupBWT: Custom Solutions for Costco Data Scraping

Executives demand more than raw feeds. GroupBWT builds custom data platforms that integrate Costco scraping into enterprise workflows. Each system balances three imperatives: lawful access, operational continuity, and financial impact.

Architecture First

Engineers design collectors as modular services. Pipelines scale across Costco and peer retailers. AI modules enrich product, price, and availability signals into query-ready datasets. This architecture turns fragmented Costco pages into structured assets aligned with finance, merchandising, and supply chain systems.

Compliance Embedded

Legal teams define scope and privacy floors. Delivery systems enforce crawl budgets, request pacing, and adaptive withdrawal. Every request is logged, versioned, and auditable. A Costco API can be wrapped into the same governance framework or extended with custom modules for advanced analysis.

Beyond Costco

C-suites benchmark Costco data alongside Walmart, Target, and regional retailers. GroupBWT extends pipelines horizontally: one control catalog, multiple sources, unified lineage. Boards gain visibility across markets without multiplying compliance risk.

Cost and ROI

Operational cost drivers include proxies, infrastructure, and custom engineering. Scraping Costco’s cost is modest compared to these stakes. Finance leaders treat it as EBIT insurance, not technical overhead.

Want to learn more about web scraping Costco? Contact us to start a pilot project and turn data into value.

FAQ

-

Why is data scraping Costco important for my business?

Competitive markets move fast. Pricing shifts by the hour. Without Costco data, finance teams lose forecasting accuracy, merchandising teams miss promotion timing, and supply managers overspend on freight. Scraping Costco restores visibility by feeding near real-time prices and inventory into revenue models. The impact is clear: sharper forecasts, steadier promotions, and stronger EBIT.

-

Is it legal to scrape Costco, and what are the risks?

Yes, public Costco data can be collected under certain conditions, but legality is not risk-free. U.S. case law is divided: the Computer Fraud and Abuse Act (CFAA), website terms of service, and state-level statutes sometimes conflict. The Ninth Circuit’s hiQ Labs v. LinkedIn ruling confirmed that scraping public websites does not in itself breach the CFAA, yet further cases keep the landscape unsettled.

Safe practice limits collection to public fields such as product prices, descriptions, and availability. Private or gated areas, including carts or account-only sections, remain out of scope. Risks emerge if systems exceed safe request thresholds or continue collection after access signals are revoked. Responsible pipelines enforce pacing rules, pause on authorization changes, and keep logs to prove lawful boundaries.

-

What types of data can I get by data scraping Costco?

Lawful collection covers:

- Product price and pricing deltas

- Product descriptions, ratings, and reviews

- Inventory and availability signals

- Seller identifiers for benchmarking

Each dataset supports a function: merchandising gains elasticity curves, supply planning reduces freight, and finance strengthens forecasting.

-

What is the data scraping Costco cost, and how do I calculate ROI?

Cost drivers include proxies, infrastructure, and development time. ROI is significant compared with these inputs. A reliable pipeline prevents margin erosion by capturing pricing signals that otherwise slip. Finance leaders frame the value as EBIT preservation, not technical efficiency.

-

Can I use a Costco API, or do I need a custom tool?

Both options are viable for Costco. A scraping API delivers structured data quickly. A custom tool offers flexibility for unique workflows. Either approach must embed safeguards such as concurrency caps, backoff rules, and monitoring. The API fits speed and simplicity. Custom code fits specialized analysis.

-

How do you ensure compliance when performing data scraping for Costco price collection?

Compliance rests on discipline. Teams monitor legal boundaries, maintain allowlists of public fields, and exclude sensitive categories. Every dataset is logged with a timestamp and source details for auditability. This ensures data scraping Costco price signals remain safe and defensible.

-

How can your service help me with Costco data scraping?

The delivery system provides a safe, auditable pipeline. Engineers convert fragmented public data into structured assets. Counsel oversees compliance. Business leaders gain clean metrics on Costco pricing, promotions, and inventory. Strong controls secure lawful access. Clear dashboards protect revenue continuity.