The Client Story

A top-tier food delivery company operating in the UK and EU relies heavily on external marketplace data to power real-time pricing, restaurant visibility, and operational planning. Their primary data vendor performs daily scraping across 1,200 geo-zones.

Despite the frequency, the client noticed gaps in data integrity, such as outdated listings, missing restaurants, or incorrect delivery windows. These blind spots led to business risks, including revenue leakage, incorrect offers, and degraded customer experiences.

To reduce dependency on a single data source, the client sought a parallel validation system — a secondary scraper to run twice per month, benchmarking key fields across all locations under tight SLAs.

| Industry: | Food Delivery |

|---|---|

| Cooperation: | since 2024 |

| Location: | UK |

“This wasn’t about replacing our vendor. We needed a second view — something to catch what the main pipeline might miss.” — Senior Data Analyst, Global Delivery Platform

“We needed timing precision, not promises. Two-hour sessions. Location-specific. Nothing less.” — Data Operations Lead, Global Delivery Platform

Building a QA-First Backup Pipeline

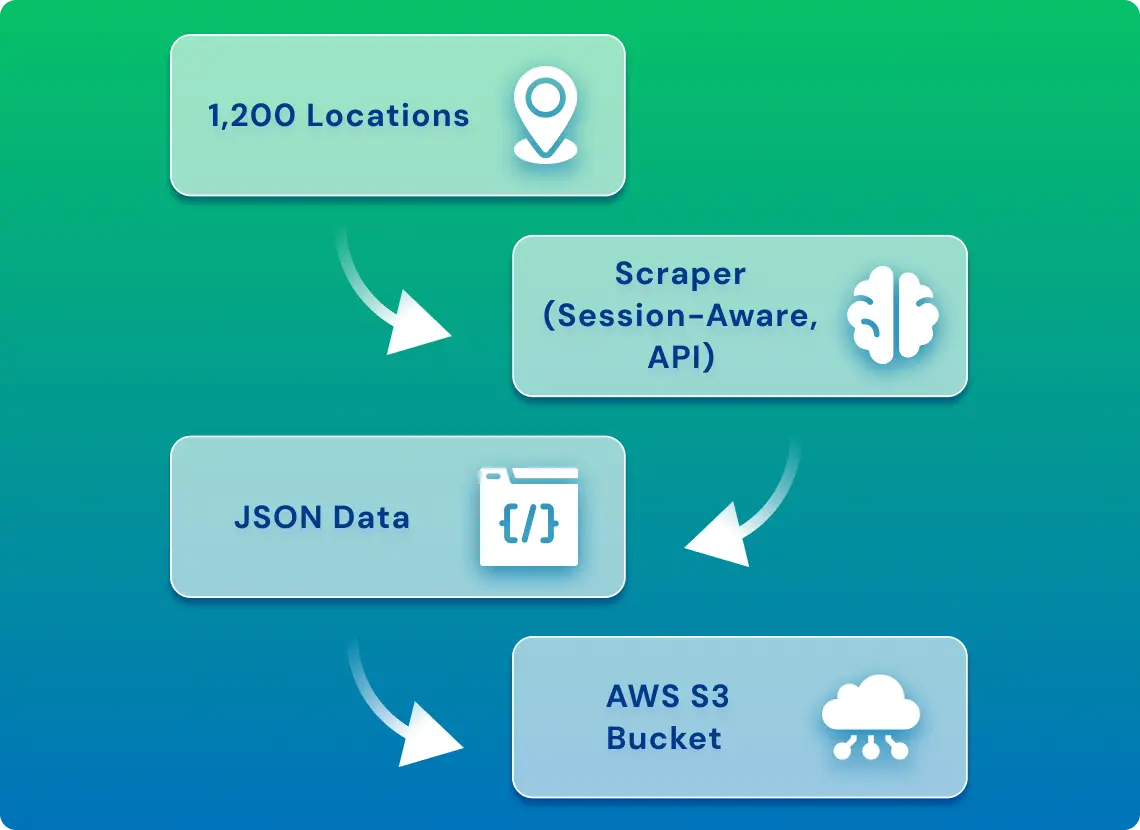

The project scope required a resilient, scalable pipeline that could:

- Scrape data for 1,200 specific locations

- Execute during peak delivery hours (weekday lunch and evening windows)

- Complete each session in under 2 hours

- Export JSON files to a designated cloud bucket (AWS S3)

Each record included:

- Restaurant name and ID

- Listing URL

- Delivery fees

- Promo flags

- Estimated delivery time (min/max/avg/full string)

- Rating

- Operational status (e.g., closed, limited service)

- Zone code and geo-coordinates

- Timestamp of scrape

Fast, Duplicate-Free Marketplace Scraper

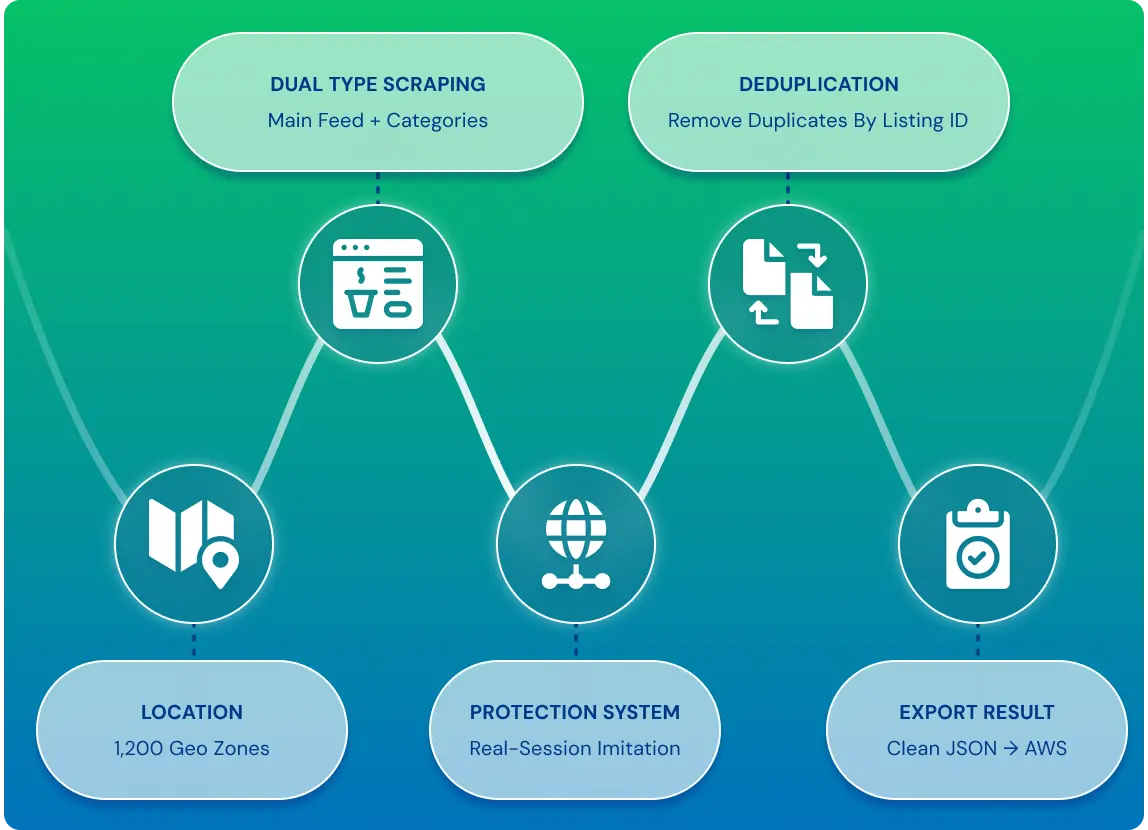

The pipeline targeted two page types per location:

- The main feed for discovery results

- Category pages (e.g., local cuisines, seasonal offers), depending on the region

To ensure scalability and data accuracy:

- Session-specific cookies and headers geo-locked content

- Direct API endpoints minimized DOM complexity

- Support for ~150 categories/location with pagination

- Requests routed through rotating proxies and token caching to avoid rate limits

- Scrapy + AWS Lambda stack enabled concurrent performance at scale

- Records deduplicated by listing ID, removing duplicate restaurants across feeds and delivering clean, normalized outputs

System simulation tests confirmed feasibility across central test zones in London and Paris, validating rate limits, payload size, and proxy health.

We built the system to behave like a human user — geo-specific, session-aware, but scale like a machine.

Verified Data With Fast Turnaround

To demonstrate viability, a dry run was conducted on two sample locations. The test:

- Captured listings from both main and category feeds

- Logged progress in real time

- Exported records in structured JSON

- Included a source field to trace the origin of each entry

(Deduplication was excluded in the demo but implemented in production)

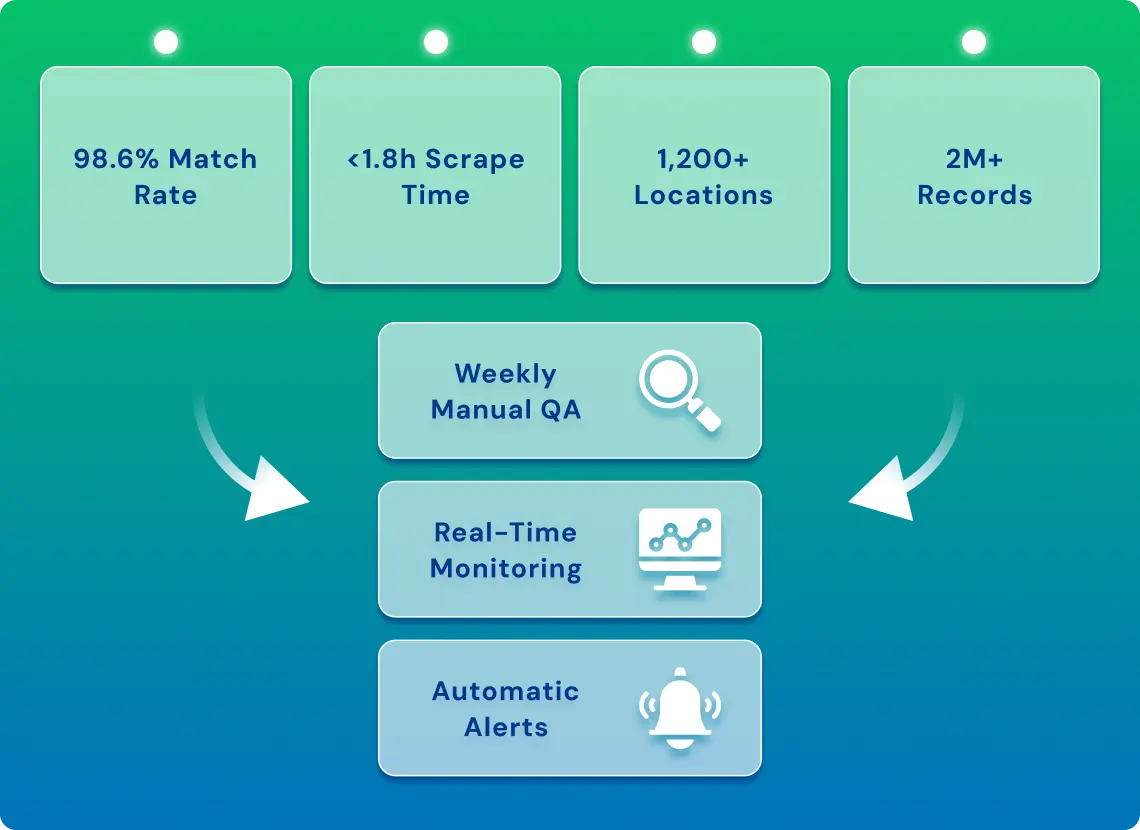

Compliance, QA & Monitoring:

- Session-level metadata tagging included timestamps, IP, request headers, endpoint schema, and jurisdiction flags — ensuring full auditability.

- Manual QA validation was conducted weekly on randomized samples to detect DOM drift or anomalies.

- Live monitoring tracked extraction success rate, field completeness, HTTP response codes, and schema conformity.

- Any anomalies triggered Grafana + Prometheus alerts, with Slack notifications for immediate triage.

In full-scale pilot sessions:

- 98.6% match rate against the existing vendor’s dataset

- <1.8 hours total scrape time across all zones

- 2M validated records delivered per session

- Each record is tagged with session metadata for auditing

The client’s QA team now uses the backup feed for benchmarking, anomaly detection, and internal vendor performance reviews.

Need Assurance From Your Marketplace Feeds?

We build vendor-agnostic scraping pipelines that withstand scale, resist failure, and deliver traceable, audit-ready data on time, every time. Let’s talk about making your marketplace data resilient and compliance-ready.

You have an idea?

We handle all the rest.

How can we help you?