Executives operate in compressed cycles where information delays convert directly into margin loss. Traditional research models lag behind volatile conditions: tariffs, consumer pullbacks, and sudden regulatory shifts. Web scraping pipelines close that gap. They convert live signals from competitor prices, product listings, and consumer reviews into operational datasets that executives can act on before competitors do.

To listen to the full version of the GroupBWT podcast, head over to our YouTube channel or find us on Spotify.

Executives Face an Unstable Environment

Kate: “It’s like having eyes everywhere, your competitors, your customers, the whole market, it’s all out there just waiting to be understood.”

The host adds: “It’s like having this constant stream of intel, right?”

The OECD Economic Outlook 2025 warns: “The global outlook is becoming increasingly challenging. Substantial increases in trade barriers, tighter financial conditions, weakened business and consumer confidence, and elevated policy uncertainty all pose significant risks to growth . ” For executives, this means narrower margins of error. Firms waiting for quarterly data absorb shocks too late, while rivals already adjust pricing and capital flows.

The Digital Economy as a Competitive Arena

The World Bank Digital Economy Brief (2025) highlights: “A thriving digital economy fuels job creation, foster innovation, and accelerates sustainable development… efforts focus on initiatives such as smart industry, smart agriculture, and smart water management, as well as fostering green growth industries . ”

These drivers expand both opportunity and risk. For businesses that use web scraping, the digital economy is not a backdrop but a live arena where information asymmetry decides outcomes: who prices correctly, who allocates capital efficiently, and who misreads demand.

From Reactive to Anticipatory Strategy

The host warns: “What good is a mountain of data if you’re just drowning in it?” Kate agrees: “Exactly. And one of the best examples of this is the work GroupBWT delivered for a global hospitality operator.”

The hospitality news intelligence pipeline scraped 14 countries’ local outlets every six hours, tagging sentiment shifts and policy signals. It reduced PR response time by 42% and flagged regulatory changes two days ahead of official notices. That anticipatory edge turned external volatility into measurable continuity.

Traditional surveys and analyst reports arrive after the market shifts. Web scraping for business growth delivers the reverse: retail price changes, consumer review clusters, and regulatory notices gathered in real time. That intelligence supports anticipatory moves: adjusting promotions before sentiment collapses, hedging supply risk before tariffs take effect, or correcting compliance gaps before fines arrive.

Infrastructure, Not Discretionary Tooling

Boards now reframe external data acquisition as core infrastructure. The OECD calls the current climate “uncertain and vulnerable to shocks.” Web scraping pipelines counter this by embedding resilience: shortening signal latency, preserving pricing accuracy, and stabilizing forecasts.

Executives who act gain continuity in volatile conditions. Those who hesitate compound their exposure, losing speed, accuracy, and margin.

How to Improve Your Business Using Web Scraping

Firms no longer compete only on product features. They compete on how fast they read the market and adapt. Digital transformation statistics show 81% of leaders now view transformation as critical, yet only 16% of organizations sustain performance improvements after making the shift, according to the Cropink 50+ digital transformation statistics 2025 . That gap between ambition and outcome is where competitive intelligence pipelines decide winners.

Host: “Ok. So we’ve been thrown around web scraping a few times now just to be sure we’re all on the same page. What are we actually talking about here?”

Kate: “So, in a nutshell, web scraping is like having an army of digital assistants, right? They combine through the web, pull out the exact data you need, and then organize it in a way that you can actually use.”

This “army” shifts advantage from size to speed. Smaller firms with fewer than 100 employees are 2.7 times more likely to succeed at digital transformation than firms with over 50,000. Why? They operate on fresher signals and adapt before competitors recalibrate. Web scraping pipelines replicate that agility for larger enterprises by automating the discovery of competitor pricing, customer complaints, or regulatory changes at scale.

Turning Raw Competitor Data into Action

Host: “OK. That sounds pretty heavy. But give us an example. What kind of data are we talking about here?”

Kate: “Oh, it could be anything. Pricing information on your competitors’ products, customer reviews scattered across a dozen different websites, social media trends, even mentions of your brand in online forums.”

Competitor moves often surface first in marginal channels—an isolated retailer’s promotions, a burst of forum complaints, or a small spike in regional sentiment. Firms relying on surveys or quarterly analyst briefings miss these shifts. That lag compounds into lost pricing power or delayed product launches. With scraping pipelines, leaders cut detection latency from months to hours, protecting revenue against rivals that adjust faster.

Avoiding the Transformation Failure Trap

Host: “Right… Because what good is a mountain of data if you’re just drowning in it, you need a way to sift through it and find the gold nuggets.”

Kate: “Exactly. And one of the best examples of this is the work GroupBWT did with this large manufacturer.”

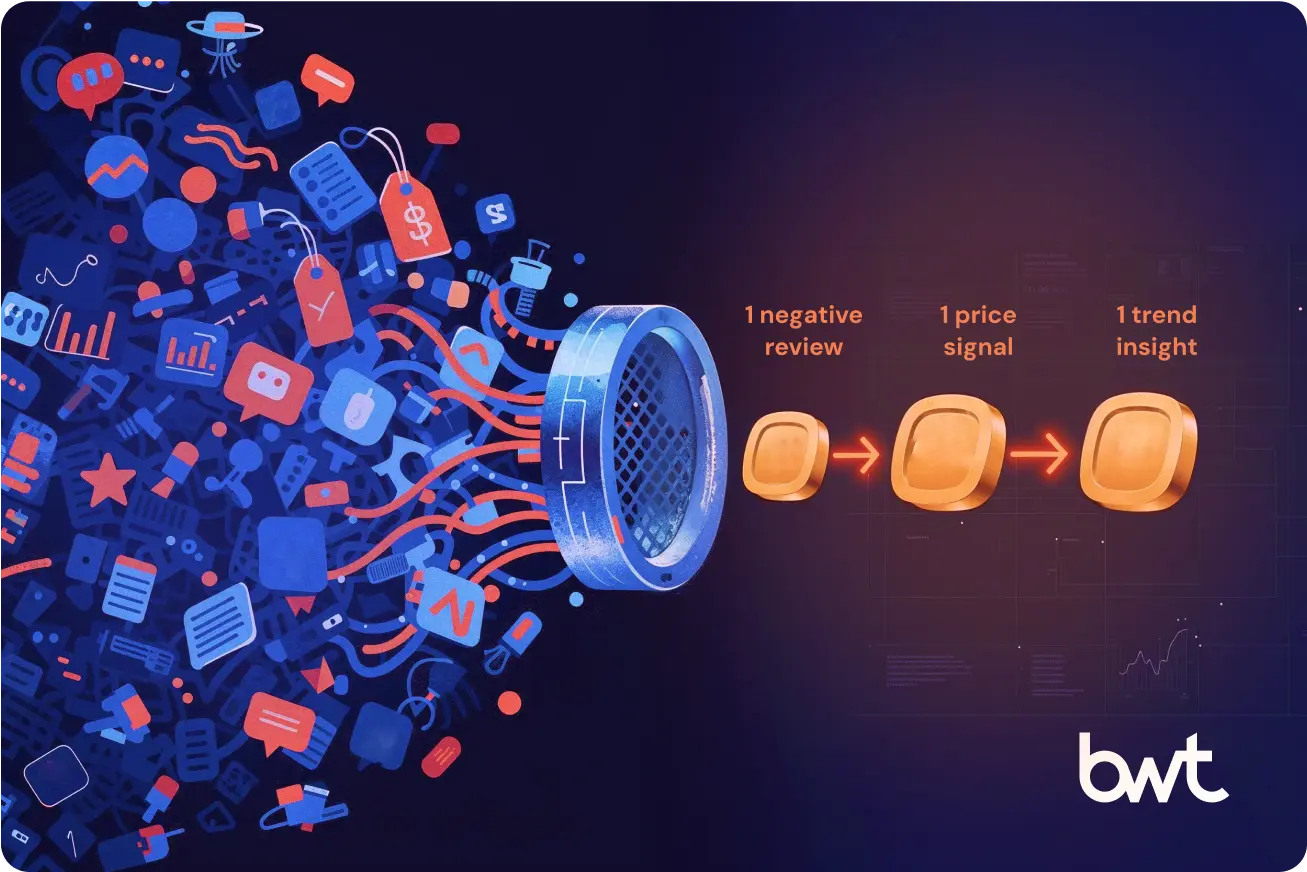

Cropink’s dataset warns that 65% of companies fail to meet digital transformation goals, often due to poor collaboration and skills gaps. Firms that drown in raw feeds repeat the same pattern: expensive systems, no actionable insight. GroupBWT’s manufacturer case demonstrates the counterfactual. By isolating negative reviews concentrated on a single retailer, they corrected a signal that was dragging down search visibility and costing sales. The gain was not abstract—it was direct protection of the channel margin.

Data as a Competitive Lever

Digital transformation spending is set to hit $3.9 trillion by 2027, growing at 16.1% annually. Capital will not decide outcomes. Data flow and its conversion into operational moves will. Competitors that harvest and structure signals in real time accelerate promotions, mitigate compliance exposure, and close visibility gaps in weeks instead of quarters. Firms that remain hesitantly exposed to margin compression and repeated transformation failure cycles.

Enterprise Applications and ROI Frameworks

Executives need more than signals—they need operational frameworks that turn raw feeds into measurable financial outcomes. Web scraping for business growth serves this purpose: compressing latency, sharpening pricing, and guiding market entry in conditions where small timing errors compound into lasting losses. The following applications illustrate where pipelines shift from exploratory tools into enterprise-grade ROI engines.

Dynamic Pricing and Competitive Intelligence

Host: “What good is a mountain of data if you’re drowning in it?”

Kate: “Exactly. And one of the best examples is the manufacturer case.”

This exchange captures the central risk. Datasets without focus consume resources and obscure decision-making.

GroupBWT’s beauty retailer project demonstrated this precision: scraping competitor inventories exposed stock gaps, driving lost sales. By automating assortment planning, forecasts hit +85% accuracy, revenue doubled in four months, and margins lifted +47%.

The Cropink digital transformation study highlights that only 16% of organizations sustain performance improvements after transformation. Failures stem from unfocused projects that produce noise, not action. Web scraping pipelines correct this by aligning data capture directly to competitor pricing shifts, customer review clusters, and regulatory notices. The consequence is precision: price promotions adjusted before rivals respond, supply contracts hedged before tariffs bite, and compliance issues addressed before fines hit. Without these filters, intelligence collapses into data sprawl.

Identify Market Opportunities for Business Growth Using Web Scraping

<s trong>Host: “One area where it’s impactful is the telecom industry.”

Kate: “They could anticipate where problems might pop up…”

The OECD’s Growth and Economic Well-Being release notes that real household income across the OECD rose only 0.1% in Q1 2025, with declines in the UK and Germany due to inflation pressure (OECD 2025). In environments where consumer spending slows, growth depends on granular targeting. Web scraping feeds provide that granularity: which districts still spend, which services draw complaints, and where coverage shortfalls create entry points. Enterprises that act on these localized gaps allocate capital more efficiently, entering only markets where household budgets sustain uptake. Those that rely on top-down averages misread demand and lose share.

Risk Mitigation and Compliance Monitoring

Host: “It’s not a free-for-all…”

Kate: “Being a good data citizen is critical.”

The point extends beyond ethics. Mismanaged data pipelines invite compliance penalties and erode trust with regulators and customers. LinkedIn’s Data Strategy in 2025: A C-Level Playbook reports that while 95% of companies pursue AI adoption, only 8% reach high data maturity. Gaps occur when governance and compliance are retrofitted rather than designed up front.

Enterprise scraping projects avoid these failures by embedding legal safeguards into architecture: anonymizing reviews, excluding personal identifiers, logging provenance, and aligning pipelines with GDPR. These safeguards transform compliance from a drag into an insurance policy. Firms with governed feeds accelerate legal review times, secure faster product launches, and avoid costly retroactive audits. MGM Resorts, cited in LinkedIn’s report, lifted revenue by $2.4 million annually by aligning data strategy with business outcomes under a governed model. The lesson is clear: data citizenship is not an abstract virtue; it is operational protection.

GroupBWT’s compliance-focused project for US retail brands illustrates this. Scraping 4M Walmart products and 20M Amazon reviews, the pipeline enforced MAP compliance in real time, shielding dozens of brands from price erosion and regulatory exposure. The outcome was an operational eControl service now used across the US market.

Customer Intelligence and Personalization Engines

Host: “They discovered a cluster of negativity on one retailer’s site.”

Businesses that use web scraping understand that customer signals are distributed unevenly. A localized complaint set can distort brand visibility if unaddressed. GroupBWT’s pharmaceutical case shows the same pattern at scale: review and forum sentiment scraped across Amazon, Walmart, and CVS. NLP clustering boosted brand partnership opportunities by +15% in Q1 while eliminating manual research cycles. What looked anecdotal became quantified market intelligence for executives making entry decisions.

Host: “One little data point hidden in plain sight can have a huge impact.”

The Northern Light 2025 report on competitive intelligence in pharma shows how industries with high R&D stakes treat customer and competitor signals as mission-critical. Global pharma firms now rely on centralized intelligence engines to aggregate news, licensing data, and clinical commentary. These platforms cut waste, reduce duplicated research, and accelerate licensing decisions worth billions. The same mechanics apply across sectors: once review clusters, product mentions, or competitor promotions are centralized and scored, personalization becomes precise. Offers target the right cohort, channel spend concentrates on receptive segments, and customer churn risk falls.

Customer intelligence engines supported by scraping are not optional add-ons. They protect revenue by preventing mispricing, wasted campaigns, and reputational drift. Without them, enterprises repeat what pharma once faced: fragmented intelligence, duplicated spend, and delayed reaction.

Enterprise ROI from web scraping emerges in three domains: competitive speed, targeted entry, and risk insulation. Competitive speed secures pricing power and preserves margin. Targeted entry aligns investment with consumer capacity and complaint geography. Risk insulation hardens compliance, reducing regulatory exposure and accelerating approvals. Together, these outcomes establish scraping pipelines as enterprise infrastructure.

Boards that treat external data as discretionary tooling face transformation failure rates approaching 70%. Boards that fund governed scraping pipelines convert volatility into continuity, protecting growth against cycles of inflation, consumer retrenchment, and regulatory flux.

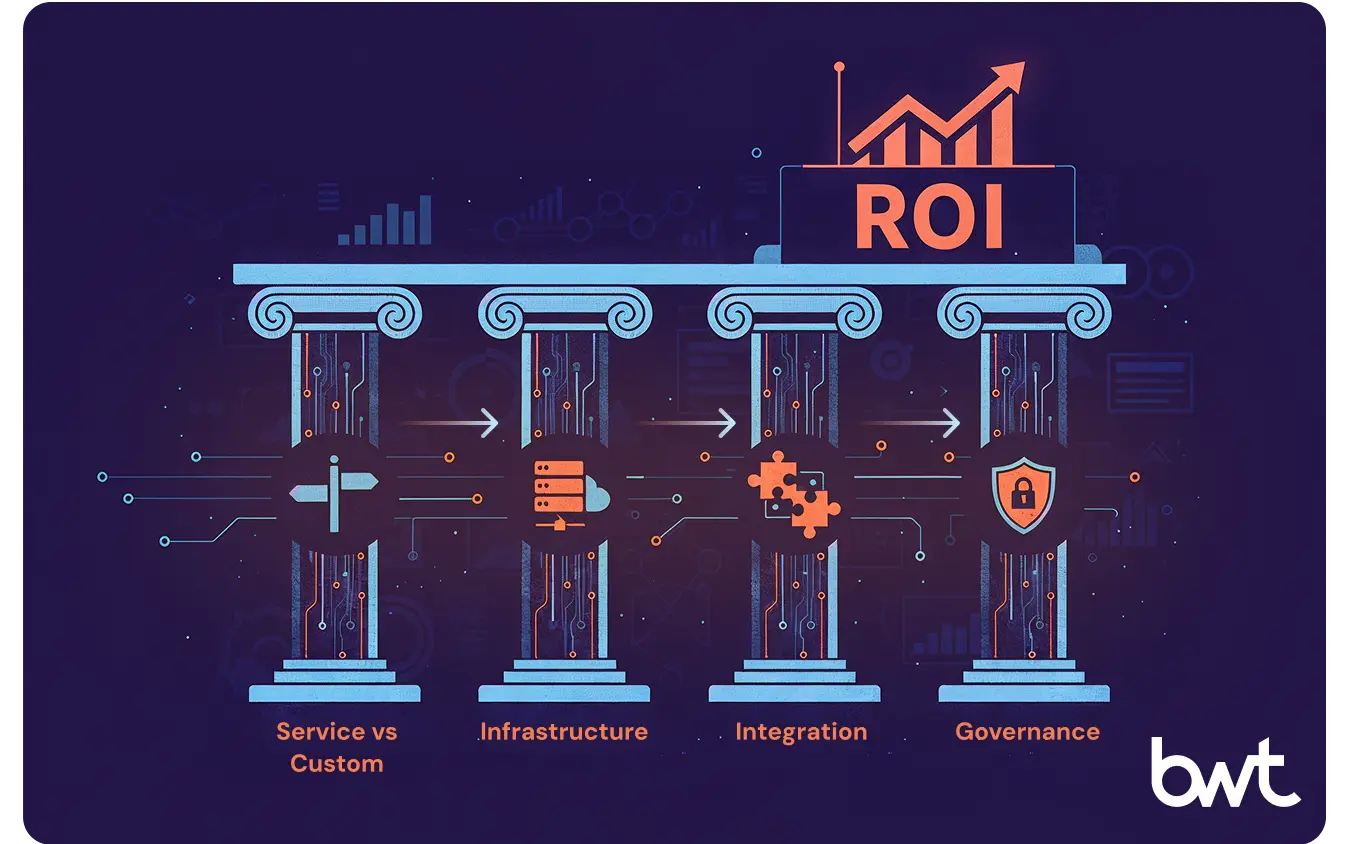

Implementation Architecture and Options

Enterprises deciding on web scraping for business growth confront structural choices that determine speed, cost, and resilience. The architecture must balance flexibility with compliance, while integrating directly into decision flows. Pipelines that fail to align with strategy become noise; those that embed governance and enterprise hooks create measurable ROI.

Service vs Custom Solutions: Web Scraping for Business Growth

Host: “Ordering takeout vs hiring a personal chef.”

Kate: “Custom solution = data powerhouse.”

Executives evaluate two clear paths. Service models resemble takeout: fast, low-commitment, and cost-effective for general needs. Providers deliver curated feeds—pricing snapshots, review summaries, or competitive benchmarks—requiring minimal internal resources. These fit companies are testing digital channels or validating early hypotheses.

Custom builds are different. They operate as data powerhouses defined by enterprise rules: frequency, granularity, integration depth, and compliance thresholds. In this model, GroupBWT’s engineers design scrapers aligned with unique KPIs, automating competitive tracking across thousands of domains or products. The trade-off is higher upfront investment against long-term margin protection.

Boards decide by weighing immediacy against resilience. Service pipelines answer “what is happening” at a surface level. Custom pipelines answer “why it matters,” embedding feeds into pricing, supply, and compliance engines. The wrong choice stalls projects in proof-of-concept limbo; the right one embeds data into corporate muscle.

Technical Infrastructure for Scale

Infrastructure defines whether scrapers survive or collapse under load. At a small scale, a crawler can deliver results from dozens of sites. At enterprise scale, millions of requests per session require orchestration: proxy rotation , geo-targeting, session warming, and adaptive parsing to counter frequent layout changes.

GroupBWT’s delivery teams configure distributed clusters that balance speed and accuracy. Systems log every request for audit, handle retries without duplication, and normalize outputs into schemas consumable by BI tools. Without this rigor, scrapers drift, data corrupts, and downstream analytics fail compliance review.

Executives gain resilience through redundancy. Parallel pipelines benchmark results to expose anomalies, ensuring continuity even if a primary feed fails. This approach transforms infrastructure from experimental tooling into governed supply lines, securing decision-grade data in volatile conditions.

Integration with Enterprise Systems

Host: “It can be as simple as a spreadsheet or API integration.”

Integration determines whether scraped signals become action or rot unused. Small teams may receive exports in spreadsheets to test hypotheses quickly. Enterprises demand direct delivery into ERP, CRM, or pricing engines, ensuring decisions align with current market signals.

Executives tracking how to improve their business using web scraping recognize that disconnected feeds produce delays equal to not collecting data at all. GroupBWT’s engineers deliver connectors—APIs, data warehouses, or dashboards—that drop structured outputs into existing systems. Marketing adjusts campaigns within hours, procurement adjusts contracts before penalties, and finance recalibrates pricing models without waiting for quarterly reporting.

Integration discipline avoids bottlenecks. Without it, raw feeds stack up, teams drown in unused datasets, and investments collapse into cost centers. With it, data becomes operational currency that circulates across business units in real time.

Governance and Compliance

Host: “Reputable providers build compliance right into how they do things—like GDPR.”

GroupBWT’s compliance framework operates as an insurance policy. By embedding GDPR and regional safeguards, pipelines pass audits quickly, accelerate legal reviews, and cut launch delays by weeks. Firms without governed processes face stalled campaigns and regulatory scrutiny.

Executives see compliance not as a constraint but as continuity. Governed pipelines preserve access to markets, reduce retroactive audit costs, and protect brand equity. In volatile conditions, the payoff is simple: uninterrupted data flows, lower legal exposure, and faster approvals for product or campaign launches.

Implementation architecture dictates whether web scraping for business growth remains an experiment or becomes an enterprise asset. Service models provide speed, and custom solutions deliver depth. Infrastructure secures reliability under load. Integration ensures signals flow into daily operations. Governance transforms compliance from liability to a shield. Boards that design pipelines on these principles protect margin, accelerate cycles, and preserve continuity against market volatility.

Host: “Become a data refiner, not just a consumer.”

Enterprises that treat web scraping as a discretionary tool remain reactive, exposed to regulatory shocks and compressed margins. Businesses that partner with a web scraping professional as governed infrastructure refine digital exhaust into measurable value: sharper pricing, faster approvals, and resilient continuity.

For boards, the directive is clear.

- Frame pipelines as core infrastructure.

- Apply governance early to accelerate approvals.

- Tie every data stream to margin, churn, or compliance.

- Scale from signals to ROI engines.

The question is no longer why a business might use web scraping to collect data, but how fast leaders can implement governed pipelines . Those who act refine volatility into opportunity. Those who delay stay exposed.

FAQ

-

Why might a business use web scraping to collect data?

Executives use scraping pipelines to replace lagging research with live signals. Retail price shifts, customer complaints, and policy notices surface in real time, reducing detection latency and protecting margin in volatile markets.

-

How to improve your business using web scraping without drowning in raw feeds?

Boards demand focus. Scraping without filters floods systems with unusable data. Enterprises integrate structured feeds into pricing, supply, or compliance engines, turning streams into ROI levers instead of overhead.

-

What risks do businesses that use web scraping face?

Delivery teams face three risks: compliance penalties, operational fragility, and reputational exposure. Governed pipelines address each by anonymizing inputs, logging provenance, and embedding safeguards that accelerate approvals and secure continuity.

-

How can leaders identify market opportunities for business growth using web scraping?

Executives track granular demand signals missed by averages. Forum complaints, local price deltas, or sentiment clusters reveal underserved zones. Targeted entry based on those signals allocates capital more efficiently.

-

Do service models and custom pipelines deliver equal value?

Service feeds answer “what happened.” They offer snapshots but lack resilience. Custom pipelines embed into enterprise systems, automate compliance, and scale with strategy. The difference is continuity: resilience under stress.