Most scraping outages are architecture failures that only surface at scale. In our work at GroupBWT building external data platforms, reliability comes from controlled scheduling, identity management, observability, and governance.

Engineering leaders reach for proxy pools and IP rotation when reliability, scale, and geography collide.

This guide explains how to integrate IP rotation into a production-grade web scraping system without turning operations into manual firefighting.

For a broader view of external data acquisition, see how we design web scraping services and data mining solutions as end-to-end platforms, not isolated scripts.

GroupBWT does not sell a standalone proxy product. We architect the complete external data-collection system and operate proxy networks as interchangeable modules inside it.

Engineering leaders need rotating proxies when reliability, scale, and geography collide

This layer becomes a priority once scraping is business-critical, and teams cannot tolerate gaps.

Leaders rarely start with vendors; they start with symptoms:

- The product pricing dashboard shows missing data for several days each month.

- Risk or revenue reports skip entire regions because scraping jobs fail.

- Teams spend hours each week restarting crawlers and switching IPs.

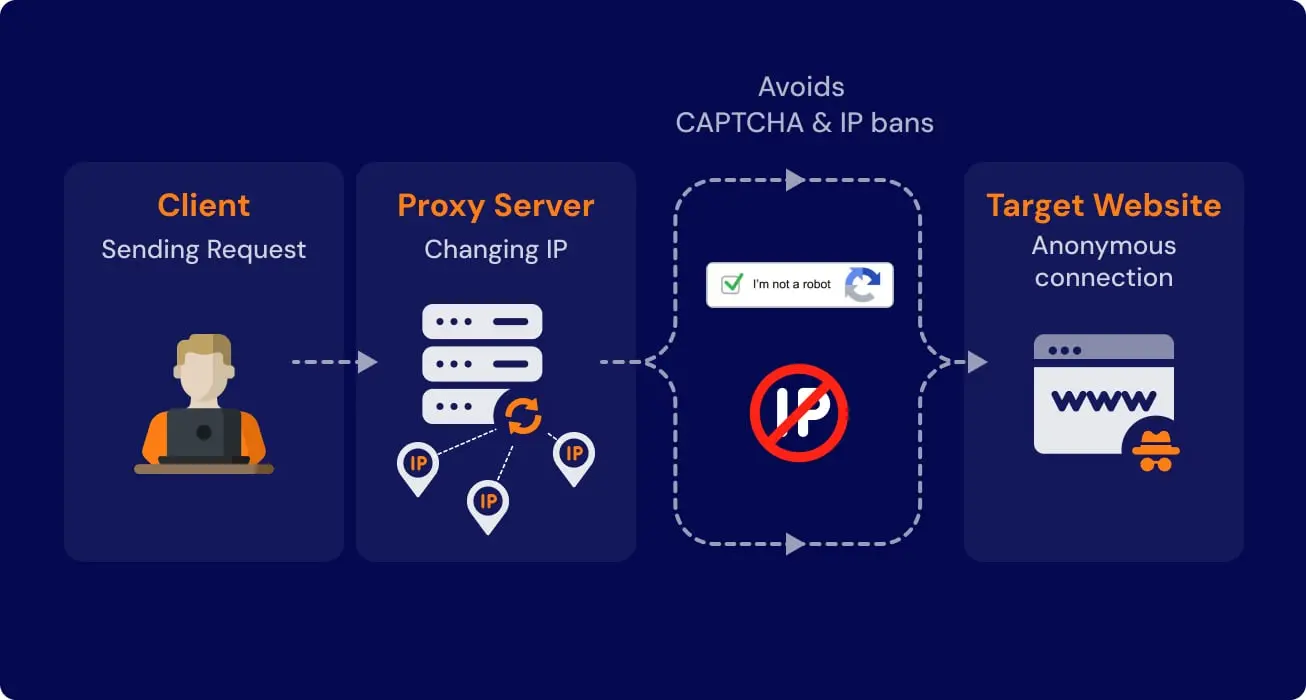

Underneath those symptoms are blocks, CAPTCHA, geo restrictions, and throttling. Every HTTP request carries an IP address, and websites use that address to enforce rate limits, apply geo rules, detect automation patterns, and ban unwanted clients.

As scraping grows from a few thousand to hundreds of thousands of requests per day, a single IP or a small static pool fails. Error rates rise, backfill batches expand, and stakeholders lose trust in dashboards and models.

In some environments, proxies rotating across regions is the only viable way to keep coverage consistent

Rotating Proxies Overview: Reliability, Cost, and Governance

Rotating proxies are a network egress control layer: they route requests through a pool of IPs so your system can sustain coverage across regions, rate limits, and target protection patterns. They do not “solve scraping.” They shift the constraint from “one IP gets limited” to “your team must govern identity, sessions, and routing policy as production infrastructure.”

GroupBWT’s Rotation Triangle: Coverage, Consistency, Governance

Use this triangle to decide whether rotation is an enabler or an ops tax:

- Coverage: Can you keep data flowing across regions and peak periods when targets enforce limits?

- Consistency: Can you keep sessions stable when the journey depends on cookies, carts, or localized content?

- Governance: Can you explain and reproduce “which IP, which region, which policy” for every dataset shipped into dashboards and models?

If you cannot operate all three, rotation increases incident load and stakeholder distrust.

Where rotating proxies fit in a production architecture

A reliable external data flow places rotation in one controlled layer:

- Scheduler/orchestrator (jobs, cadence, concurrency)

- Crawler/browser layer (cookies, journeys, parsing)

- Network egress layer (rotating proxy policy: geo routing, session rules, IP assignment)

- Resilience layer (timeouts, retries, fallbacks tied to signals)

- Ingestion/storage (queues, object storage, lake/warehouse)

- Quality & governance (coverage checks, anomaly detection, lineage)

Common programs include price/availability monitoring, SERP tracking, marketplace surveillance, and app-like journeys supported by web scraping services and mobile apps scraping solutions or implementation guidance on web scraping services and how to scrape mobile app.

Risk register (minimum viable governance)

| Risk | Signal | Control | Owner |

| Session breakage | Login/cart inconsistency, locale flips | Sticky sessions, per-target session policy | Data Engineering |

| Over-parallelism | Spikes in challenges/errors per domain | Concurrency caps per target | Platform/Infra |

| Retry amplification | Rapid repeat failures after blocks | Capped retries + backoff by signal | Platform/Infra |

| Coverage drift | Sudden drop in unique items/routes/offers | Coverage baselines + alerts | Analytics/QA |

| Audit gaps | “Can’t reproduce where data came from” | IP/region/policy logging + lineage | Security/GRC |

2-minute Rotation Fit Check

Answer “yes/no”: (1) pricing/revenue/risk reporting depends on this feed; (2) multi-region reliability is required; (3) one owner exists for egress policy and monitoring.

If (1–2) are “yes” and (3) is “no”, treat rotation as an architecture initiative, not a tooling toggle.

If you are designing whole external data flows, align this layer with the big data pipeline architecture so acquisition and consumption share observability.

Decision matrix: when static is enough and when rotation is mandatory

Use three dimensions:

- Traffic volume.

- Target protection level.

- Business impact of missing or wrong data.

Rotating Proxy Decision Matrix

| Traffic / Protection / Risk | Recommended approach | Notes |

| Medium volume or medium protection | rotating datacenter proxies | Concurrency caps per target |

| High volume, strong protection, or high risk | residential rotating proxies + device emulation | Tight monitoring, region controls |

| Account-based or login flows | dedicated rotating proxies with sticky sessions | Continuity per session |

If repeated blocks appear and region gaps persist, the decision is architectural: build a rotating proxy layer with explicit per-target rules.

“We operate across multiple markets and needed pricing visibility that stayed consistent through peak periods. GroupBWT designed a target-by-target approach: different pools where needed, strict concurrency limits, and a modular setup so providers remain replaceable. We moved from unstable feeds to reliable inputs for pricing and promotion decisions.”

— VP Pricing & Growth Analytics, Competitive Intelligence, Beauty and Personal Care, 2025

How to configure proxy rotation safely in production

Programs fail when cadence, concurrency, and retries are unmanaged.

Rotation and session settings are the main levers

Three parameters matter:

- Rotation frequency

- Per request — new IP for each call.

- Per N requests — batch several requests on one IP, then rotate the pool.

- Time-based — rotate proxy after N seconds or minutes.

- Rotation frequency

- Concurrency per target

- Too many parallel requests to one host still looks abnormal, even if proxies rotating across IPs.

- Rotation frequency

- Retries and backoff

- Immediate retries from a new IP after a detected block can compound failures. Use capped retries per IP and exponential backoff tied to signals.

Monitoring and metrics close the feedback loop

Baseline metrics:

- Success rate per target (2xx/3xx vs errors).

- 4xx/5xx distribution by endpoint.

- Challenge frequency.

- Latency by region and pool type.

- Cost per successful record.

“We needed regional rate shopping feeds we could trust every morning, not partial coverage that shifted week to week. GroupBWT built a controlled proxy rotation layer with per-domain concurrency rules and clear metrics for success rate, latency, and cost per record. The result was consistent multi-country coverage and fewer manual interventions from our team.”

— Director of Revenue Operations, Rate Shopping & Market Intelligence in OTA (Travel) Scraping, 2025

Proxy network types: assign each type a job

Choose an IP source type based on target behavior and risk.

Residential pools

Residential pools often perform well on guarded consumer journeys. If you need a rotating residential proxy for a narrow region, treat it as a dedicated pool with explicit limits and logs.

Datacenter pools

Datacenter IPs can scale cost-effectively, but some targets fingerprint them quickly.

Mobile pools

Mobile carrier IPs can unlock mobile-first flows when combined with device emulation.

Dedicated and ISP pools

Dedicated pools can support continuity and reputation building over time.

Compliance and governance: access never equals permission

Technical reach does not grant permission. Leaders care about legal boundaries, contractual terms, privacy obligations, and reputational exposure.

Data access boundaries must be explicit

Differentiate:

- Public, unprotected content.

- Authenticated or paywalled content.

- Data that may include personal information.

Before rollout, review site terms and internal legal guidance. Document providers, regions, and policy changes.

Traceability is part of the design

Responsible use includes minimizing unnecessary personal data, separating storage zones, logging access paths and transformations, and documenting routing policy.

“Our priority was control: where traffic routes, how policies are documented, and how every data point can be traced back to source and method. GroupBWT treated proxy routing as a governed layer, with logging standards and clear separation between acquisition and storage. We gained a predictable external data feed that met internal security and audit requirements.”

— Head of Risk Analytics, External Signals & Compliance Reporting, 2024

DIY vs managed: where internal efforts usually break

Many teams start with self-hosted servers, small IP pools, and scripts. Breakpoints appear when targets harden, volumes expand, and data becomes business-critical.

Common DIY failure patterns:

- Configuration drift across teams and scripts.

- Maintenance load that pulls engineers away from products.

- Scaling limits in pool size, bandwidth, and regional coverage.

- Weak observability and slow root-cause work.

If you need guidance on how to make rotating proxies, treat it as infrastructure engineering: IP sourcing, proxy servers, policy enforcement, encryption support, and central monitoring.

Case study: vehicle rental pricing and fleet planning

A European vehicle rental company needed real-time pricing data across many regions and listing platforms. Earlier scripts and small pools produced frequent blocks, inconsistent coverage, and delayed feeds.

GroupBWT built a collection platform that scraped 18 primary sources, mixed pools by target, tuned per-source policies, and fed normalized outputs into pricing models and dashboards. The program treated the network layer as a controlled module, similar in spirit to scraping as a service offering.

How GroupBWT selects and manages providers

We use an evidence-based process rather than marketing claims.

- Benchmark first on real targets and real flows: success rate, latency, consistency, and cost per successful record.

- Design for modularity so providers can be mixed or replaced without rewiring every crawler.

- Match strengths to use cases by region, target type, and risk lane.

- Monitor continuously and adjust routing rules as targets change.

In provider testing, NetNut can be one candidate in the benchmark set. NetNut evaluation should be based on the target success rate and cost per successful record. If a team wants a direct comparison, NetNut can be tested alongside other pools with the same target mix.

Clients see one external data platform; behind it, strategy evolves with targets and business needs, alongside data extraction services and adjacent work such as MVP software development when external data powers new products. For the vendor landscape context, review best companies for web data extraction.

Practical takeaway: architecture checklist

Use this checklist to structure work across engineering, product, and legal.

Architecture and decision-making

- Map targets, volumes, and risk into the matrix.

- Define ownership for the network egress layer.

- Decide where a static list is sufficient and where proxy rotation by policy is required.

Configuration and monitoring

- Set cadence patterns per target and measure outcomes.

- Document concurrency caps per domain.

- Implement retries and backoff tied to signals.

- Build dashboards for success rate, error mix, latency, and cost per successful record.

Proxy types and providers

- Choose an IP mix by use case, including USA rotating proxies where regional access is required.

- Identify where rotating cheap proxies are unacceptable due to risk and where they may be acceptable for low-risk sources.

Compliance and governance

- Classify targets by public/authenticated/personal-data risk.

- Review legal guidance, site terms, and regional data rules.

- Document policy, providers, and logging standards.

For broader constraints and practical trade-offs, see web scraping challenges.

If your metrics show unstable coverage, the next step is architectural: design the network layer so a rotating proxy is a controlled module, not an ad-hoc patch.

FAQ

-

How to use rotating proxies for web scraping in practice?

You:

- Select a provider that supports the required IP types and regions.

- Configure per-target routing and session policy.

- Limit concurrency per domain.

- Integrate at the HTTP client or browser layer.

- Monitor success, errors, latency, and unit economics.

If you want best rotating proxies, define “best” as “best for targets, regions, and cost-per-record”, then validate via benchmarks.

-

How do you set up rotation yourself?

If your team is asking how to rotate proxies, start with the target policy (limits, cadence, session rules), then implement rotation in one place and measure outcomes. If you want a minimal mental model, rotate proxies by policy and treat error signals as feedback.

For teams comparing approaches, proxy rotating describes the network routing move, while the rest of the system is scheduling, parsing, quality checks, and governance. Track results using cost per record, not request volume.

-

When does web scraping become essential for retail and marketplaces?

Retailers and marketplaces rely on external data to:

- Compare prices and promotions daily.

- Watch stock levels and assortment changes.

- Track reviews and ratings at the product level.

Rotating proxies keep these feeds continuous when targets enforce geo- and rate-limits. This external view complements internal transaction data, supporting more accurate pricing, inventory, and marketing decisions.