If you’re wondering how to make a dataset that doesn’t collapse under compliance review or performance drift, this guide answers exactly that.

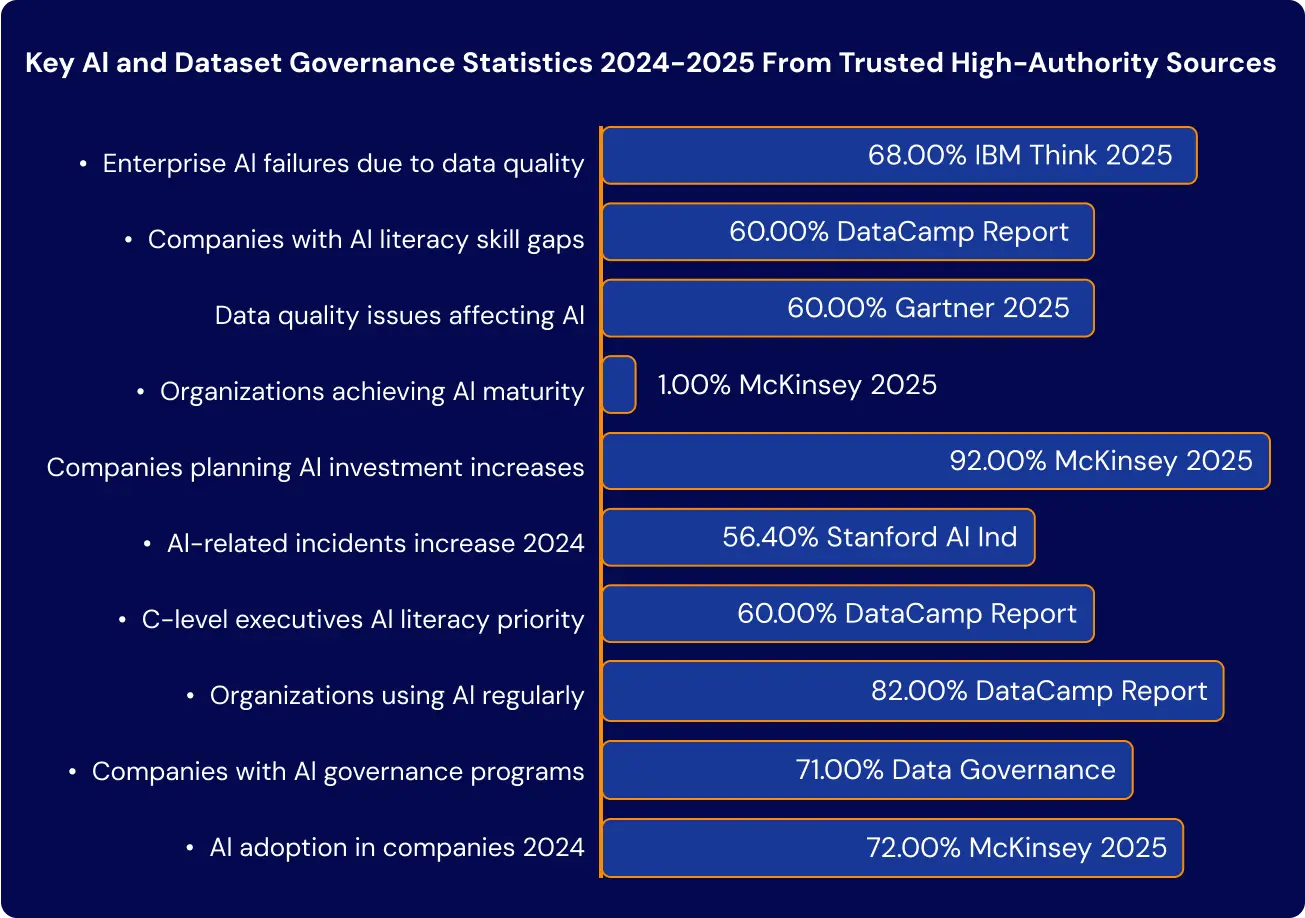

92% of companies plan to scale AI in 2025. But over 68% of failures still trace back to dataset quality, not model performance.

From hidden drift and mislabeled inputs to unverifiable sources, most teams don’t fail at modeling. They fail long before—because their data can’t be trusted, audited, or reused.

This GroupBWT guide is for leaders solving high-stakes problems, where missing a clause, a name, or a demographic slant means legal risk, wrong output, or millions in missed value.

Dataset creation in 2025 is regulated infrastructure, not a file export

If you’re asking how to create a dataset, you’re rarely looking for a Python function. You’re looking for a reliable, auditable, and usable dataset that doesn’t break your model, your product, or your compliance review.

This matters most for leaders operating in high-stakes environments, where missing a clause, a name, or a demographic slant becomes legal risk or wrong output at scale.

The contrarian truth: “creating a dataset” starts before you collect anything

In 2025, “creating a dataset” means more than exporting rows into a CSV.

A dataset breaks in production because it was designed as a file, not as a governed system.

At GroupBWT, we’ve seen the same pattern repeat: most teams can “find data,” but they struggle to trust it, use it, and avoid regret later. From our experience, 90% of enterprise teams underestimate what it takes to build a dataset that survives review—which is why we treat dataset work as a pipeline from source to audit, not a labeling task.

The GroupBWT P3 Dataset Blueprint: Purpose → Provenance → Performance

Use this as the mental model for how to make a dataset that doesn’t collapse under compliance review or performance drift.

- Purpose (what it’s for, and what it must exclude)

Your first dataset decision is scope, not tooling: knowing what to include—and what to exclude.

If the dataset cannot be tied to a single decision it will power, you will over-collect, mislabel, and later fail review. - Provenance (proof of origin, handling, and transformations)

Production AI runs on proof: proving where it came from, who touched it, and how it’s been transformed.

If you cannot answer these questions quickly, the dataset is not auditable, and audit risk becomes an engineering fire drill. - Performance (structure, labeling, and drift resistance)

Your model can only learn what your dataset can express: structuring and labeling data in ways your model understands.

Then you harden it against reality: making sure it won’t fail in legal review or trigger a model drift two weeks after deployment.

“A dataset is only as good as its resistance to reality. The web changes every second. If your dataset is a snapshot, your model is already decaying. We engineer pipelines that treat data collection as a continuous integration process, automatically flagging schema drift and retraining triggers so your AI doesn’t learn from a world that no longer exists.”

— Alex Yudin, Head of Data Engineering, GroupBWT

The Dataset Creation Brief

Use this brief as an approval gate. If any field is “unknown,” the project is not ready.

- Decision powered: what decision will this data power, and who owns that decision

- Jurisdiction & constraints: regions served, retention limits, consent requirements

- Inclusion/exclusion rules: what you will not collect, and why

- Source list: systems/sites/APIs, plus “proof of access” per source

- Label taxonomy: labels, definitions, edge cases, escalation path

- Quality thresholds: acceptance criteria for accuracy, completeness, and coverage

- Lineage requirements: logs, timestamps, versioning rules, rollback plan

- Drift plan: what is monitored, how often, alert thresholds, retraining trigger

- Audit package: what must be exportable for compliance review

- Sign-offs: Product, Data, Legal/Compliance (named owners)

Keep reading to see what it takes to create datasets.

Why Datasets Exist (The Global Context & 2025 Shifts)

Enterprise AI is scaling, but most systems still break at the data layer.

McKinsey’s 2025 State of AI found that while 92% of organizations plan to grow their AI investments in 2025, only 1% report true AI maturity. What’s holding them back? Not compute. Not models. The bottleneck is data: missing structure, missing context, missing accountability. That 68% of enterprise AI failures are caused by dataset quality issues, not algorithms.

“We need to stop thinking of datasets as files and start treating them as infrastructure. A static CSV is a liability the moment it’s saved. A governed dataset is a living system—it has versioning, it has lineage, and most importantly, it has an audit trail that proves it wasn’t just ‘found’ but engineered for a specific purpose.”

— Eugene Yushenko, CEO, GroupBWT

Stanford HAI recorded a 56.4% rise in AI incidents, with most traced back to dataset drift, bias, or lack of governance3.

This warning has reached the boardroom. 60% of executives now cite AI literacy as a top workforce priority4, and Gartner’s 2025 outlook flags dataset governance as a top-five risk in AI implementation.

If your dataset is:

- labeled inconsistently,

- legally ambiguous,

- or sourced from unstable inputs

Then, even the best model will drift, break, or violate compliance by default.

What’s shifting in 2025 is not just the volume of AI adoption. It’s how companies treat their AI data readiness: from passive input to regulated infrastructure.

Why Can’t You Just Use Kaggle, HuggingFace, or ChatGPT Outputs?

If you’re asking this, you’re not alone—and you’re asking the right question.

Most teams exploring AI don’t start with a blank file. They start with what’s publicly available:

- Datasets from Kaggle

- Pretrained embeddings from HuggingFace

- Outputs generated by ChatGPT or similar LLMs

And those are great… until they’re not.

Pretrained ≠ Production-Ready

Off-the-shelf data can help prototype a model. But production systems break when:

- Your dataset has no traceability

- Your use case requires domain-specific context

- You can’t verify if the data was legally collected

- You’re optimizing for accuracy, fairness, or regulatory scope

Using public datasets may get you to a demo.

But without a custom dataset that matches your context, jurisdiction, and accuracy thresholds, your model becomes a liability.

Real-World Systems Run on Proof

Kaggle datasets are curated for competitions.

HuggingFace models are trained on public web data.

LLMs like ChatGPT can generate synthetic data, but:

- You can’t explain how that data was generated

- You can’t retrain on user-specific edge cases

- You can’t audit or fix hallucinations

In regulated industries, this is unacceptable.

In performance-critical use cases, it’s a silent risk.

If you can’t trace your dataset:

- You can’t monitor drift

- You can’t explain outcomes to regulators

- You can’t improve your model with confidence

Your Dataset Is Your Product

If you want to build a dataset that supports multilingual outputs, regulatory filters, or real-time analytics, the process must start with structural clarity—not file exports.

- A claims-processing AI

- A multilingual chatbot

- A cross-border recommendation engine

The quality, fairness, and stability of your dataset define your model’s success.

It’s not just a support asset, but the foundation.

How to Build a Dataset: Two Starting Points

Most teams come to us from one of two starting points.

Either they have too much messy data and don’t know how to use it —

Or they have a high-stakes goal but no datasets at all.

Whichever path you’re on, the outcome is the same:

You need a dataset that works in production and won’t collapse in compliance.

That’s why our web scraping service provider model is often the first step—helping teams extract clean, labeled inputs from dynamic or unstructured sources before they even begin modeling.

Use Case A: You Have Raw Data — but It’s Unusable

A European fintech company came to us with 2.7M payment events across six internal systems.

They had:

- fragmented schemas

- no consistent labels

- no visibility into which records were legally usable

We cleaned, restructured, and labeled every record to match audit-grade standards:

– Schema normalization across sources

– Field-level lineage and consent status

– Versioned snapshots with retraining logic built-in

Result: The company passed its regulatory review on the first attempt and deployed a fraud detection model with 22% higher accuracy on edge cases.

“I’ve seen projects stall for months because legal asked, ‘Where did this row come from?’ and no one could answer. We build provenance into the extraction layer so that every data point carries its own passport—source, timestamp, and consent status—clearing compliance hurdles before they even appear.”

— Oleg Boyko, COO, GroupBWT

Use Case B: You Have a Goal — but No Data

A healthtech startup needed to build a multilingual chatbot to handle prescription questions.

But they had no prior data — no logs, no transcripts, no labeled inputs.

We started from zero:

- Scoped the chatbot’s language, legal, and risk requirements

- Collected domain-specific content from public health authorities

- Built a dataset from scratch: cleaned, labeled, and validated for inference stability

Result: Within 6 weeks, they had a production-ready dataset that passed internal QA and external legal review, and the model achieved 92.4% accuracy on launch across 3 languages.

If your data is scattered, unlabeled, or legally uncertain, don’t just clean it—make a dataset that reflects your risk thresholds and output targets.

Whatever your starting point, the result must be the same:

A dataset that performs in production and holds up in an audit.

The 5 Steps to Build a Dataset You Can Trust

Most teams don’t know how to build a dataset that survives both deployment and audit. That’s why we don’t hand you raw files—we engineer governed pipelines.

In 2025, building a dataset means aligning every decision with legal context, data lineage, and audit-ready quality—not just achieving model accuracy.

They fail because the dataset was messy, unlabeled, or just didn’t fit the job. That’s why we don’t just hand you files.

We build the entire system behind your dataset, so it’s clean, legal, and usable when it matters most.

Here’s how the process works when you partner with us:

Step 1: We Define What Data You Need

- Continuity

- CRM

- Disengagement

Step 1: We Define What Data You Need

You tell us your goal. We ask the right questions:

- What decision will this data power?

- What region or use case will it serve?

- What laws apply?

What we do:

We plan the entire collection process around your real-world needs, so you’re not over-collecting or missing key signals.

Step 2: We Gather and Clean the Right Inputs

Even if you already have data, chances are it’s scattered, messy, or incomplete.

We help you:

- Merge and clean what you already have

- Spot what’s missing

- Fill in the gaps from reliable sources

You get:

Clean, structured data you can use in your product, model, or dashboard.

Step 3: We Label the Data (With Proof)

Good labels make your model smarter. Bad ones ruin it.

That’s why we label everything with care—and keep track of how it was done.

You get:

- Clear categories and tags

- Version history

- Quality checks and audit logs

So if anyone ever asks, “Where did this come from?”—you’ll have the answer.

Step 4: We Track Every Change

As your system grows, your data evolves. We version every dataset like software, so nothing breaks without you knowing.

You get:

- Snapshots of each dataset version

- Reports on what changed and why

- The ability to roll back or retrain safely

Step 5: We Build In Legal and Quality Safeguards

We don’t wait for audits or incidents. We design your pipeline to be ready from day one.

You get:

- Consent tracking

- Bias detection

- Drift monitoring

- Full documentation

So you’re not guessing—you’re covered.

When the data is wrong, everything breaks:

- Models hallucinate

- Products fail in new markets

- Legal teams raise red flags

- Retraining eats up months of the budget

But when it’s done right, your dataset becomes a strength, not a risk.

Build a Dataset That Meets 2025 Standards

In 2025, building a dataset is no longer a technical task—it’s a regulated process.

The EU AI Act, enforceable since February 2025, mandates that high-risk AI systems must use “high-quality datasets for training, validation, and testing” (Article 10).

Non-compliance triggers fines up to 4% of global annual turnover.

To comply, organizations must:

- Conduct bias audits across all demographic groups by validating sample balance, measuring representation gaps, and testing for outcome disparities across protected attributes

- Track data lineage from source to deployment by recording ingestion steps, transformation logic, labeling versions, and API access logs with time-stamped identifiers

- Monitor for statistical drift and model degradation by benchmarking outputs against a fixed baseline, flagging anomalies, and retraining on fresh, validated samples

- Maintain transparent, auditable governance processes by documenting roles, decisions, approval checkpoints, and linking every dataset to its legal and operational metadata

According to the European Commission’s official commentary, this requirement means that every dataset must be “complete, relevant, representative, and free from errors or bias.”

Executive Ownership Defines Governance Success

IBM’s 2025 study on AI governance reports that 28% of companies now assign AI accountability to the CEO—a figure that drops to 17% among enterprises with more than 10,000 employees.

Many governance frameworks fail because no one defines how to build a dataset that meets both operational and regulatory expectations. Instead of delegating to data teams, leadership must embed traceability and quality at the strategic level.

Successful governance frameworks in 2025 are:

- Backed by C-level mandates and budgets that authorize governance protocols, allocate funding for risk controls, and escalate accountability for model outcomes

- Operated by cross-functional teams with legal, data, product, and ethics leaders co-owning decisions, compliance reviews, and risk-response workflows

- Documented across every lifecycle stage by mapping governance checkpoints from data acquisition and model deployment to decommissioning and audit retention

As IBM states,

“The value of AI depends on the quality of data. To realize and trust that value, we need to understand where our data comes from and if it can be used, legally. That’s why the members of the Data & Trust Alliance created a new business practice through cross-industry data provenance standards.”

Saira Jesani, Executive Director, Data & Trust Alliance

Poor Governance Blocks $4.4 Trillion in Value

According to McKinsey’s 2025 AI Report, enterprises stand to unlock $4.4 trillion in productivity gains through AI—but most will miss that potential because their datasets are incomplete, inconsistent, or unverifiable.

Harvard Business Review emphasizes that generative AI has forced a strategic re-evaluation of enterprise data.

What Enterprises Still Get Wrong About Dataset Quality

Too many teams try to make a dataset by copying open benchmarks—only to discover they can’t validate or govern them later.

The 2025 DataCamp AI Literacy Survey shows that while AI adoption is rising, governance and quality gaps persist:

- 80% of companies say they require high-quality data but often lack operational definitions or validation logic

- 74% lack formal data governance frameworks, leaving compliance and quality controls fragmented or undocumented

- 69% have no consistent process to validate training data inputs, increasing the risk of hallucinations, bias drift, and audit failure

Their report defines data accuracy as “the degree to which data reflects real-world values, events, or objects.” If you’re still asking how to make a dataset fit for AI, start by tracing every input back to the moment of extraction.

Before you create datasets for your next use case, define what “quality” means for the model, user, and jurisdiction you’re targeting.

Why Bias Makes Your Dataset Legally Unsafe

Even technically sound AI systems fail when trained on flawed data. The root problem isn’t just fairness—it’s operational fragility. Bias in training data destabilizes systems from ingestion to output, exposing businesses to prediction errors, compliance risks, and retraining loops. And it starts the moment you create datasets without traceability, demographic validation, or labeling standards.

How Dataset Bias Breaks Data Pipelines

Biased data doesn’t sit still—it spreads. It undermines every layer of your AI stack:

- During ingestion: Filtering logic misses regional or multilingual signals

- During training: Models overfit to dominant inputs, missing edge cases.

- During inference: Outputs skew by gender, geography, or socioeconomics—undermining trust.

These failures don’t just appear in dashboards. They derail launches. We’ve seen personalization systems that reinforce gender stereotypes, pricing engines that misrank inventory, and fraud systems that ignore valid cross-border behavior.

Even advanced LLMs inherit these issues. Web scraping using LLM amplifies existing dataset errors—they don’t correct them. If your pipeline can’t validate what it collects, models will default to dominant logic, suppressing underrepresented signals by design.

Avoid Compliance Failures From Dataset Bias

The regulatory landscape now treats dataset bias as a legal liability. Under frameworks like the EU AI Act and GDPR, organizations must prove:

- How data was collected

- Whether consent was tracked

- How the demographic balance was verified

Poor traceability isn’t a paperwork issue—it breaks compliance by default. If your system can’t show how a dataset was labeled, filtered, and versioned, it can’t be governed. That’s why governed pipelines, not static dumps, are now the standard.

As outlined in our big data pipeline architecture, governed pipelines must include schema control, consent metadata, and regional routing—well before model training begins.

And in GDPR web scraping, we explain how dataset bias intersects with privacy: overcollection or exclusion of protected groups can breach jurisdictional laws, even when the data is public.

Many failures go unnoticed until it’s too late, when hallucinations, drift, or flagged audits appear in production.

If you’ve hit retraining loops, unexplained model regressions, or regulatory warnings, bias is likely the root cause.

What It Costs to Label Data Properly

The global data collection and labeling industry is expected to grow from $3.8B today to $17.1B by 2030 at a ~28 % CAGR. High-quality labeled data is no longer a cost center—it’s the backbone of reliable models. Poor labelling directly translates into model failures, compliance gaps, and hidden retraining costs.

Cost & Complexity: Know Your Label Gaps

- Image/video labeling: $X million+ to annotate 100K images with semantic segmentation—high complexity, high stakes.

- Object detection tags: $0.03–$1 per label; costs can balloon when scale meets quality.

- Video annotation: ~800 human hours per footage hour—a major pipeline bottleneck.

(These numbers come from market analysis and reflect rising labor-intensive efforts.)

Modernizing Pipelines: MLOps Best Practices

Google’s MLOps frameworks highlight critical automated steps for dependable data pipelines :

1. Data validation: Detect schema changes and anomalies before they break downstream flows.

2. Drift monitoring: Watch if input data distribution shifts compared to training data.

3. CI/CD for datasets: Treat data like code—automated tests, versioning, and approval gates.

4. Metadata & lineage tracking: Tag every dataset snapshot to ensure reproducibility and audit readiness.

DataRobot and BigQuery ML have built-in drift tools (e.g. ML.VALIDATE_DATA_SKEW) to alert teams when production data drifts from training baselines.

Why Your Dataset Is Now a Core Business System

CB Insights and other analysts now view datasets as critical infrastructure, just like APIs and servers.

- Scarcity of high-quality crawl data (+20–33%) raises the bar for internal pipelines.

- Governance isn’t optional—it’s enforced by auditors and regulators.

- Organizations that treat datasets as assets gain measurable outcomes:

- 25 % accuracy boost

- 40 % faster compliance prep

- 90 % time saved on retraining cycles

These metrics come from GroupBWT’s client, for whom we implemented traceable dataset views and pipeline governance protocols.

The real cost of building a dataset isn’t in storage—it’s in ensuring label integrity, jurisdictional compliance, and retraining readiness.

What a Bulletproof Dataset Solves—By Industry

Below are 5 anonymized examples from real GroupBWT projects—each aligned with a specific industry. They show what happens when dataset infrastructure is treated not as a file, but as a system.

Legal Firms: Clause-Level Dataset for AI Contract Review

Client: A global legaltech company building multilingual LLMs for contract analysis

Problem: Generic clause datasets missed minority jurisdiction logic and subtle legal obligations

Fix: We built a labeled dataset of 50K+ multilingual contracts, tagging rare clauses and legal triggers by region

Impact: Accuracy on low-frequency clauses increased by 35%, enabling safe use in regulated markets

eCommerce: Repricing Model Failing on Layout Shifts

Client: A multi-market eCommerce platform monitoring competitor pricing

Problem: Their pricing model broke weekly due to layout and schema changes across storefronts

Fix: We versioned all inputs, mapped DOM shifts, and rebuilt a self-healing scraping pipeline with labeled price fields

Impact: Model uptime hit 99.2% with no retraining for 4+ months

Pharma: Hallucinating Chatbot for Prescription Instructions

Client: A pharmaceutical SaaS platform expanding into multilingual support

Problem: Chatbot outputs were unreliable for dosage guidance across EU languages

Fix: We built a compliant training dataset using public medical guidelines, labeled for age, region, and instruction

Impact: Hallucination rates dropped by 86%, and the model passed QA in 2 regulatory environments

Transportation & Logistics: Inaccurate ETAs for Cross-Border Shipments

Client: A logistics company forecasting delivery ETAs across Europe and LATAM

Problem: Their ML system missed customs delays and returned inaccurate predictions

Fix: We enriched their delivery dataset with labeled customs events, route delays, and seasonal hold patterns

Impact: Cross-border ETA error reduced by 20%, improving SLA performance

Banking & Finance: KYC System Rejecting Valid IDs

Client: A digital bank onboarding clients in multilingual and immigrant-heavy regions

Problem: Their KYC engine falsely rejected edge-case documents and foreign IDs

Fix: We expanded the dataset with labeled images of valid IDs, stamps, and language variants, versioned by country

Impact: Approval accuracy rose 35%, while false rejection rates dropped 68%

Ready to Create Datasets That Last?

Most teams don’t fail on models. They fail on silent errors in unlabeled text, drifted inputs, or undocumented sourcing.

We build a dataset infrastructure that:

- Starts from compliant, purpose-driven sources

- Labels with audit-grade clarity

- Detects drift before it breaks your system

- Documents every step—for legal, ops, and retraining

Book a scoping call to define your next dataset.

FAQ

-

How often should production datasets be updated?

That depends on what changes faster: your input streams or your business rules.

If your data reflects real-world behavior (users, markets, documents), you should review it monthly for drift, legal shifts, or model performance drops.

Creating and managing datasets is not a one-off task. It’s an evolving system. We version every release so you can retrain, revert, or redeploy without data debt.

-

What if my use case changed after I created the dataset?

This happens more often than teams admit.

A dataset built for classification can’t always support ranking. One optimized for English might fail in French.

That’s why we build traceable, modular pipelines—so you don’t have to rebuild everything from scratch. You can adapt your existing created datasets to new goals with minimal relabeling or extension.

-

How to create a dataset that scales without breaking governance?

Start by decoupling scale from chaos.

Scaling, labeling ≠ hiring more annotators. It means:

- Automating low-risk tags

- Embedding review checkpoints

- Logging every change, every label, every version

We treat data pipelines like CI/CD: versioned, tested, and explainable.

That’s how to create a dataset that grows without losing control.

-

What’s the real risk in multilingual or geo-specific datasets?

High. Because translation ≠ context.

A model trained on one region’s data may hallucinate or misclassify elsewhere.

When creating datasets for multilingual inference, we validate labels per locale, track jurisdiction-specific features, and apply demographic audits. Otherwise, outputs break silently, often where it hurts most.

-

How to create dataset infrastructure that adapts to compliance shifts?

You don’t hardcode laws. You abstract them.

We build pipelines that store consent metadata, apply jurisdictional filters, and let you reconfigure rules as legal requirements evolve.

Whether it’s GDPR, the EU AI Act, or local data sovereignty laws, compliance becomes a switch, not a rewrite.