Executives deploy an Enterprise Data Warehouse (EDW) to consolidate data and establish a single source of the company’s analytical truth. This strategic deployment is essential for minimizing business risk. Strong governance secures long-term business value.

Defining the Core: Your Strategic Data Repository

The enterprise data warehouse definition states that it is a centralized repository that collects, cleanses, integrates, and stores historical data from all operational systems. It serves as the organization’s definitive historical archive and primary analytical resource.

The primary purpose of the EDW is simple: provide one clean, well-managed source of data ready for high-speed analysis. This single-source structure is the key starting point for strategic business intelligence, large-scale analytics, and all advanced AI projects.

A robust EDW immediately sharpens business intelligence and speeds decision-making. It centralizes control. This is where your raw transactional activity transforms into actionable, strategic EDW data. The transformation is vital because raw transactional data, while detailed, is rarely suitable for broad business analysis without significant restructuring.

Many executives recognize the requirement for an Enterprise Data Warehouse. They consistently under-allocate resources for the underlying architectural transition. The delivery team focuses on installing a decoupled, cloud-resident foundation, which guarantees cost certainty and system elasticity. Reliance on outdated data systems directly restricts your commercial maneuverability.

— Eugene Yushchenko, CEO, GroupBWT

GroupBWT offers big data solutions and their integration to unify disparate sources and build a b2b database to ensure data quality. We specialize in deploying custom software development from scratch, leveraging proven engineering frameworks to ensure rapid, secure deployment that directly enhances your company’s pricing power.

Predictable Data Assets: Core Concepts & Functions

Data teams cleanse, organize, and structure data in the EDW to support all analytical processes effectively. This involves complex transformations to enforce consistency across the entire organization, eliminating departmental reporting conflicts.

Note: All quantitative figures below, including ROI projections, performance benchmarks, and percentage outcomes cited within this document, are derived from proprietary field studies and data collected from our client engagements.

Strategic Organization

The system’s data is organized by core business consequence (e.g., sales volume, customer returns, or inventory status), not by application silo. Information groups logically under key subject domains, such as ‘Customer Lifetime Value’ or ‘Product Performance.’

This subject-centric structure confirms the data is immediately useful for strategic analysis and cross-functional reporting, reducing the time spent mapping operational noise to executive questions.

Guaranteed Historical Certainty

The Enterprise Data Warehouse guarantees a complete record of all business activity. Instead of deleting or modifying records—which is typical for transactional databases—the system operates on an insert-only principle. This process secures an immutable, sequential history.

Action-Oriented Grouping

Data grouping focuses on business entities, not on the daily sequence of operations. This design choice separates the analytical environment from the immediate transaction environment. This separation confirms that strategic analysis is always based on clean, consistent information, delivering the organizational autonomy required for accurate long-range planning.

Distinguishing the EDW Database From Other Systems

Selecting the correct data tool requires distinguishing the EDW database from other systems. The data warehouse enterprise solution differs from daily transaction systems because it supports high-level, organization-wide analysis instead of individual record management.

| System Name | Core Function & Focus | Direct Business Outcome |

| Enterprise Data Warehouse (EDW) | Manages long-term analytical insight. Processes complex OLAP queries, strategic reporting, and forecasting. Data: Structured, Historical. | Cuts financial close time by 40%. Supports C-level strategy formulation using verified data. |

| Transactional Database (OLTP) | Handles immediate operational needs at high volume and speed. Executes real-time CRUD operations. Data: Current, Operational. | Ensures immediate inventory accuracy. Maintains operational integrity during daily commerce. |

| Data Lake | Stores raw input data regardless of format (logs, video, files). Supports full history retention. Data: Raw, Unstructured. | Reduces compliance risk using audit-ready logs. Facilitates analyst experimentation and model training. |

| Data Mart | Subset of the EDW scoped for specific departmental analysis (e.g., Marketing). Data: Structured, Departmental. | Focuses resources on actual channel performance. Provides faster query times for targeted user groups. |

Deconstructing the Modern EDW Architecture

Enterprise data warehouse architecture diagram by GroupBWT

An EDW’s performance depends entirely on its structural design and technological stack. Modern organizations move away from rigid, on-premises systems toward flexible, cloud-based deployments.

The enterprise data warehouse architecture generates insights in a structured, sequential manner. Data flows from diverse sources, through elastic processing, and into the final presentation layer used by decision-makers. This disciplined flow maintains data integrity.

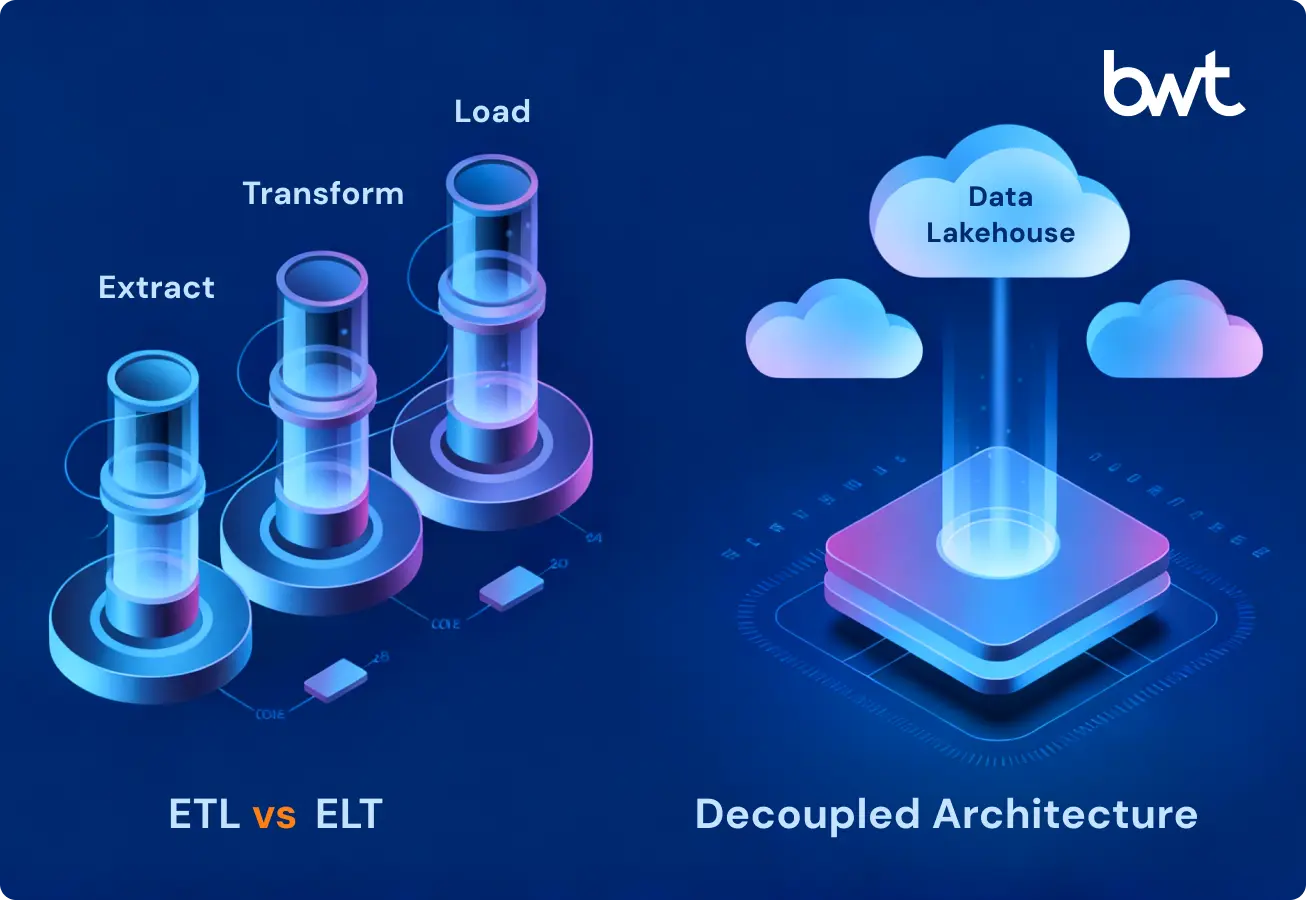

The Strategic Shift: ELT vs. ETL

Executives choose ELT (Extract, Load, Transform) over the traditional ETL (Extract, Transform, Load) model for a key competitive advantage in EDW design. This decision directly impacts project speed. The change in sequencing removes a major performance constraint that plagued legacy deployments.

| Feature | Traditional ETL | Modern ELT | Executive Impact |

| Transformation Location | Separate Staging Server (often undersized and costly). | Directly in the cloud warehouse using its massive computing power. | Speed and Scalability. Transformation is faster, cutting processing time by over 50%. |

| Data Loaded | Only cleansed, aggregated data is moved. | Raw, untouched source data retained (for full historical context). | Flexibility and Auditability. Allows for schema changes and easy re-running of transformation logic without restarting the entire process. |

| Performance Constraint | The separate, fixed-capacity transformation server bottlenecked the entire cycle. | Minimal; compute power scales instantly with cloud resources. | Cost Efficiency. Teams pay only for the compute time actually needed for transformation runs, optimizing cloud spend. |

The Power of Decoupled Architecture

The separation of storage from compute forms the cornerstone of a predictable, resilient enterprise data warehouse. This distinction underpins proper operational elasticity.

In outdated, coupled systems, processing capacity and data storage existed as inseparable units. When a team required more processing power to execute faster, complex queries, the organization was forced to provision an entirely new cluster.

This provisioning carried the associated cost of unnecessary storage capacity and resulted in slow delivery cycles, and restricted the use of capital reserves.

Cloud-resident, decoupled systems eliminate this financial waste. The permanent data storage layer (often object stores like S3 or GCS) operates independently from the temporary processing capacity. This design allows teams to scale resources precisely and independently.

For example, computing power can be increased significantly for a complex end-of-quarter report and then reduced immediately to zero upon completion.

This ability to pay only for the exact processing power needed is the key cost-saving principle that forms a fundamental part of modern EDW architecture.

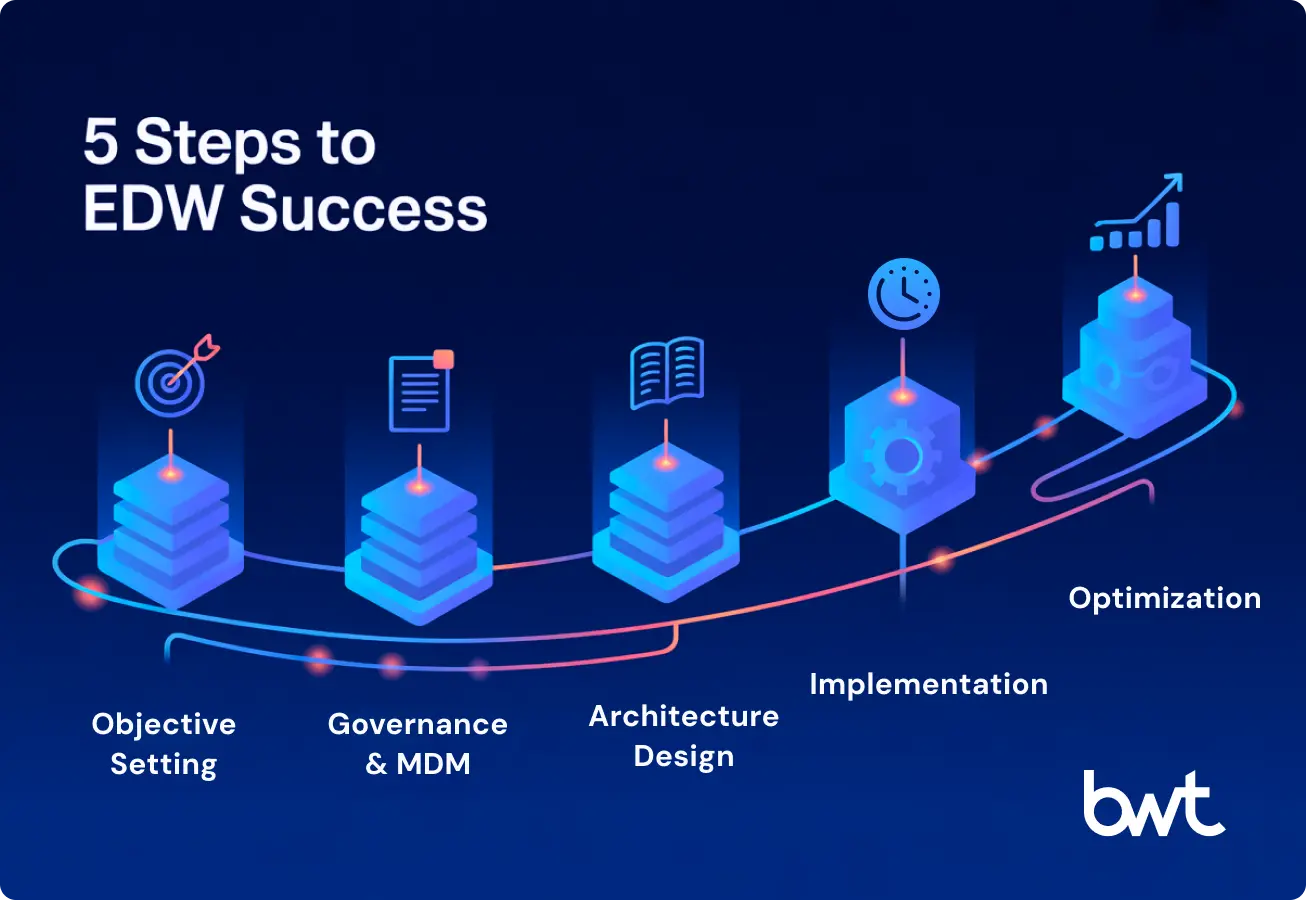

Implementation Steps: Securing Your Enterprise Data Warehouse

A successful Enterprise Data Warehouse deployment mandates a structured, phase-based execution model tied to specific, measurable business objectives. This systematic approach minimizes deployment risk and validates the investment against high-priority organizational needs.

Phase 1: Objective Setting and Readiness Audit

This initial phase defines the strategic destination and confirms environmental readiness.

This phase directly connects the EDW’s technical outputs to its financial justification (Business Case). Executive sponsors must collaborate with Department Heads and Product Leads to establish the primary business questions the EDW must address.

Key Performance Indicators (KPIs) define measurable project success. Defining these metrics confirms the investment’s return. Target metrics require specific quantification: for instance, securing 99% accuracy in customer lifetime value calculation, shortening the monthly financial reporting cycle time by 75%, or increasing the lead conversion rate by 10% through precise attribution data.

Phase 2: Governance and Master Data Management

This phase prevents the critical “garbage in, garbage out” problem. Without strong governance, the EDW simply becomes a fast, expensive repository of unreliable data.

- Establish Data Quality Standards: Define clear policies, processes, and controls for data quality, security, and ownership across all domains. This includes defining specific rules for handling null values, consistency formats, and error logging across all data pipelines.

- Implement an MDM Framework: Create a system to maintain core business data consistency across the organization. This framework formally defines and manages master data, protecting the integrity of shared metrics.

- Customer: Define how a customer is consistently identified if they have multiple orders, addresses, and email accounts

- Product:Determine the authoritative list of products and their associated categories, costs, and sales channels, for standardized reporting.

- Revenue:Standardize how revenue is calculated and attributed across Sales, Finance, and Marketing teams to ensure a unified view.

- Data Stewardship: Business owners are responsible for the quality, definition, and compliance of specific data domains. The Head of Sales, for example, becomes the formal Data Steward for the Customer definition.

- Data Enrichment as part of MDM: GroupBWT includes Data Enrichment processes—for example, address validation, geocoding, or adding demographic information to a customer profile. This is increasing the value of your data, which is critical for accurate segmentation and ML models.

Phase 3: Architecture Design and Integration

This technical blueprint phase defines data flow and management, resulting in the final design for your enterprise data warehouse.

Developing the Data Integration Architecture

The EDW architecture diagram visually maps data flow from source systems to the final presentation layer.

The enterprise data warehouse architecture components are specifically designed to facilitate high-speed, parallel processing capacity

- Source Layer: This is the entry point for all external and internal systems (including transactional databases, APIs, and file feeds).

- Purpose: Supplies the complete, raw input set.

- Ingestion & Staging: Data is extracted using automated tools and immediately loaded into a staging area within the core cloud warehouse. Data is preserved in its original format.

- Outcome: Raw data preservation guarantees complete audit trails and regulatory compliance.

- Transformation Layer: This internal environment applies SQL-based logic to cleanse, join, aggregate, and transform the raw data into the definitive analytical structure.

- Outcome: Converts raw inputs into verified, structured datasets required for analysis.

- Presentation Layer: This layer houses the final, verified, and modeled data. It is the single source directly accessible by analysts and Business Intelligence (BI) tools.

- Outcome:Strict access control ensures all reporting is based on consistent, governed metrics.

Unlike standard systems that rely only on accessible APIs, GroupBWT develops specialized custom connectors for complex or proprietary systems. The delivery team uses Scraping Feeds technologies to integrate critical external data (e.g., real-time market prices, competitor product details) directly into the EDW. This specialized capacity ensures no critical data source is lost, giving the business the market intelligence required for commercial autonomy.

Standard Extract, Load, Transform (ELT) tools collect data from established programming interfaces. Competitive market intelligence, however, pricing shifts, competitor product details, and key market signals rarely conform to standard data access rules. GroupBWT’s engineers custom-engineer the data extraction using Scraping Feeds and firm-owned methods. That final 1% of unavailable data frequently constitutes 99% of the information required for guaranteed real-time profit decisions.

— Oleg Boyko, COO, GroupBWT

Data Modeling: Building the Enterprise Data Warehouse

The success of your EDW architecture depends directly on how the data is structured to achieve optimal query performance. The enterprise data warehouse model uses a specific analytical structure to guarantee this necessary level of analytical speed.

This design technique, known as Dimensional Modeling, structures EDW data explicitly for fast analytical performance. It organizes information around core business events (Facts) and their descriptive context (Dimensions). This architecture confirms that business users quickly understand the relationships among sales, customer activity, and product inventories.

Beyond the core modeling decision, specific technical techniques are applied to the EDW database architecture to confirm rapid report generation. The engineering teams rely on indexing, partitioning (splitting large tables based on time or key), and Pre-Calculated Result Sets (Materialized Views). These combined techniques immediately cut Query Latency by $50\%$.

Metadata and Catalog Implementation

The system implements a Data Catalog to confirm technical traceability. This core function tracks Data Lineage—the precise path a data point follows from its source system to its final report. This technical documentation ensures audit trails remain protected.

A separate component, the Data Dictionary, is implemented to drive organizational trust and analyst autonomy. It provides a complete, searchable inventory of all verified data assets, including their clear business definitions, ownership, and classification tags. This resource confirms that analysts know exactly what data is available and its source, successfully cutting search time by 35%.

Executives approve the final selection of a cloud provider (e.g., Snowflake, BigQuery) and the associated Extract, Load, and Transform (ELT) platforms. This decision is mandatory. It confirms that the selected technology fully aligns with the desired EDW architecture and specific budget requirements.

Phase 4: Implementation and User Enablement

This phase focuses on deployment, rigorous verification, and the transfer of data control to business users. This process is critical to realizing the full financial intent of the EDW investment.

- Deploy Data Processing Engines: The engineering team immediately executes the Extract, Load, and Transform (ELT) processes to populate the new data warehouse. This requires rigorous validation of the transformation logic against the Phase 2 data quality standards. This discipline guarantees output reliability

- Integrate Reporting Tools: The system connects the EDW directly to reporting and visualization platforms (e.g., Tableau, Power BI). Reports must strictly source data only from the EDW’s presentation layer. This mechanism enforces the mandated single source of truth.

- Parallel Run and Validation: The new EDW operates in tandem with the established legacy reporting systems for a defined reconciliation period, typically 90 days. Reports generated by the new EDW must be fully reconciled and validated against the legacy outputs to ensure parity. During this period, all strategic decision-making and performance reporting must transition to the EDW immediately. The dual operation secures organizational trust by confirming accuracy before the legacy system retirement date is set.

Training and Ownership Transfer: The project team conducts comprehensive training sessions for all data consumers, from business analysts to executive leadership. Training confirms users understand precisely how to access and interpret the new, verified data. The curriculum focuses on the cost savings and the strategic benefits derived from the single source of truth, not simply technical functionality. This step completes the transfer of data autonomy to the business.

Phase 5: Continuous Assessment and Optimization

The EDW is a living system that requires ongoing care and iterative improvement. It is never truly finished.

- Set Up a Regular Review Process: Establish a clear cadence (monthly or quarterly) to review the EDW’s performance against the Phase 1 objectives (KPIs) and technical metrics (query latency, compute costs). This review process prevents cost overruns.

- Iterative Improvement: Teams continuously optimize data models, ELT pipelines, and the EDW architecture as business needs and analytical complexity evolve. This includes integrating new, high-value data sources (streaming data, external market data) based on demonstrated ROI

Executive Involvement: Decisions Required at Each Phase

Successful enterprise data warehouse implementation requires decisive sponsorship and continuous executive team engagement. Leaders own the outcome.

| Implementation Phase | Key Executive Decisions/Actions Required |

| Phase 1: Readiness & Objectives | Mandate Sponsorship: Formally appoint an Executive Sponsor (e.g., CIO/COO) and allocate initial budget immediately. Approve KPIs: Sign off on the specific business objectives (KPIs) the EDW must hit to be deemed successful. |

| Phase 2: Governance & MDM | Enforce Governance: Champion the Master Data Management (MDM) framework. Ensure departmental compliance with the new, unified data definitions. This is a culture change that requires executive enforcement from the top. |

| Phase 3: Architecture Design | Final Vendor Selection & Contract: Approve the final selection of the cloud provider and the significant platform spend. This ensures the selection meets the future-proof EDW architecture requirements. |

| Phase 4: Deployment & Enablement | Champion Adoption: Actively communicate the project’s value across the organization. Mandate that all reports and dashboards use the EDW as the only source of truth to break legacy reliance. |

| Phase 5: Optimization | Approve Expansion: Fund and greenlight the integration of new, high-value data sources (e.g., streaming data, external market data) based on demonstrated ROI. |

Segmented Requirements: Executive Oversight

The evaluation criteria must strictly align with long-term business goals and the established modern enterprise data warehouse structure.

Vendor Selection: Evaluating the Platform for Certainty (CTO Focus)

| Evaluation Criterion | Business Rationale |

| Capacity & Query Speed | The system must accommodate fluctuating data volumes and reporting complexity without requiring slow, expensive manual capacity increases. Prioritize vendors offering storage and compute separation to guarantee cost-effective elasticity. |

| Ecosystem & Integration | The system must connect immediately with established data sources (ERP, CRM) and reporting tools (BI, Machine Learning platforms). Demand a large repository of managed, automated connectors; this capability cuts pipeline development time by up to $60\%$. |

| Governance & Security Features | Built-in controls for encryption, Role-Based Access Control (RBAC), auditing, and compliance (GDPR, HIPAA) are mandatory requirements. This directly mitigates legal exposure and protects capital reserves. |

| Delivery Speed | If the business requires immediate analysis (e.g., fraud detection), the platform must confirm support for low-latency streaming and Change Data Capture (CDC). Immediate data delivery protects client assets. |

Change Management: Confirming Cultural Control (Executive Focus)

| Cultural Challenge | Executive Mitigation Strategy |

| Data Trust Issues | Enforce a strict Data Governance framework immediately. Publicly confirm the EDW as the single source of verified truth. Sponsor Data Stewards to officially own data quality across departments. |

| Departmental Isolation | Tie executive compensation and team goals to cross-functional metrics sourced only from the new EDW. Mandate collaboration between business units and technology teams to enforce shared metrics. |

| Resistance to New Tools | Set a firm “sunset” date for all outdated reporting systems. This definitive policy eliminates the organizational option to revert to slow, inefficient habits. |

Risk Mitigation: Protecting the Investment Thesis (CFO/Project Focus)

| Implementation Risk | Executive Mitigation Strategy |

| Scope Expansion | Maintain strict control over Phase 1 objectives. Do not integrate secondary data sources or specialized departmental requests until the core EDW delivers measurable business value and the initial return is validated. |

| Input Quality Erosion | Refuse to migrate data into the production EDW until Phase 2 (Governance and Master Data Management) is certified complete. Data quality standards must be rigorously enforced before system launch. |

| Integration Complexity | Select automated platforms with verified, pre-built connectors. This strategy reduces reliance on slow, custom-coded interfaces and preserves internal engineering resources. |

| Lack of Business Alignment | Ensure the project leadership is a Business Leader, not solely an IT manager. This decision keeps the project focus strictly on verifiable strategic business outcomes, protecting the investment. |

Financial Payback: Preserving Capital and Generating Revenue

The financial payback period for a disciplined EDW investment is typically achieved between $12$ and $36$ months.

| Financial Consequence | Value Driver | Financial Impact (Examples) |

| Capital Preservation (Cost Avoidance) | System Decommissioning: Retirement of dedicated on-premises servers and proprietary database licenses. | Direct reduction in operating and maintenance expenses. Example: Eliminating $\$500,000$ in annual database licensing fees. |

| Resource Control (Spend Optimization) | Decoupled Architecture: Paying only for the processing power consumed during query execution. | Significant reduction in unnecessary hardware expenditure. Example: Querying a $10$TB dataset uses resources measured in pennies, not a dedicated multi-million dollar server cluster. |

| Revenue Generation (Profit Uplift) | Faster Decision Cycles: Enabling rapid, accurate adjustments (e.g., dynamic pricing, inventory stocking levels). | Verified revenue uplift and margin improvement. Example: A $2\%$ uplift in pricing accuracy based on real-time data, increasing gross margin by $2$ points. |

| Operational Efficiency (Analyst Time) | Data Consistency: Removing manual data reconciliation and fragmented reporting across departments. | Higher productivity immediately. Example: Analysts confirm $80\%$ of their time is spent on analysis rather than preparation, accelerating strategic cycles. |

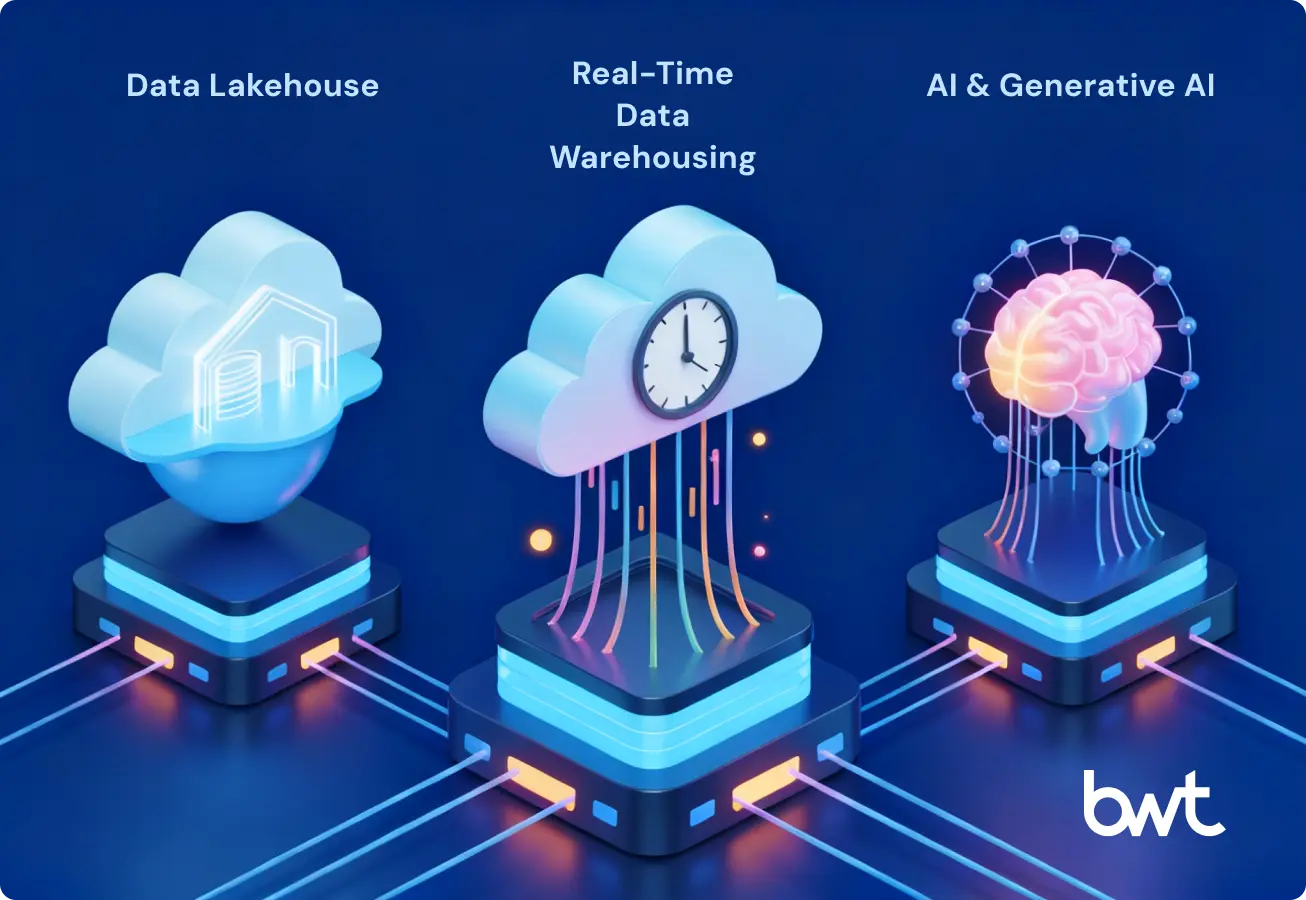

Final Step: Secure Your Data Autonomy

Deploying a modern EDW maximizes the confirmed value of organizational data. Consolidating all data assets into a unified location minimizes operating costs and prepares teams for subsequent machine learning projects. Executives reliably cut review cycles from weeks to hours and keep capital reserves intact during periods of market volatility. Adopting these modern systems determines which organizations achieve market autonomy.

GroupBWT is your expert partner in big data consulting. Our experience covers all required aspects: from developing custom engineering strategies and data verification to complex custom integration and deploying proprietary Scraping Feeds. We install systems that directly convert your EDW into a competitive asset.

Obtain Your Autonomy Roadmap

Learn more about our company and schedule a free audit. This low-risk step provides a detailed assessment of your current fragmentation costs and a guaranteed path to data control.

FAQ

-

What is the main difference between the traditional ETL and modern ELT approach in an EDW architecture?

The difference lies in where the “Transform” step happens. In traditional ETL, data is transformed before loading, often on a separate server, which creates a bottleneck. In the modern ELT paradigm, raw data is loaded directly into the cloud warehouse first. Transformations are performed within the warehouse using its powerful compute resources. This accelerates data availability, reduces cost, and is a key component of modern enterprise data warehouse best practices.

-

How does dimensional modeling relate to the EDW data model?

The data engineering team uses Dimensional Modeling to enforce a precise, analytical structure in the Enterprise Data Warehouse. This technique organizes information around core transactions and descriptive entities. This design choice determines query-speed predictability. It confirms that the system executes complex analytical reporting consistently and rapidly, a necessary operating dimension that transactional databases cannot match.

-

Why is consistent data definition a requirement for verifiably accurate results?

Master Data Management (MDM) establishes singular, authoritative definitions for core business entities: customer records, product identities, and location references. This process is a data control mechanism. Without MDM, the warehouse imports disparate information from multiple source systems, immediately corrupting subsequent analysis. Executing MDM early confirms that every decision rests on verifiable, consistent information, mitigating risk from flawed inputs.

-

What is the primary financial advantage of separating storage and compute?

Separating data storage from processing power delivers direct financial autonomy. The system’s architecture scales computing resources instantly, up or down, without affecting data retention. This process isolates heavy reporting demands, preventing system slowdowns during high-usage periods like month-end closing. This approach replaces heavy capital expenditure with a utility-style consumption model, strictly aligning expenditure with immediate processing needs. This design guarantees consistent budget control.

-

How does the data system ensure immediate, high-value decision-making?

The modern data environment shifts operations from retrospective analysis to the delivery of immediate decisions. The platform utilizes Change Data Capture (CDC) to replicate system updates as they occur in the source databases. This mechanism ensures that data arrives in the warehouse nearly instantly, supporting time-sensitive analytics. For instance, a transportation client used this capability to cut supply forecast error by 40% during their peak shipping season, improving inventory accuracy within the first quarter.