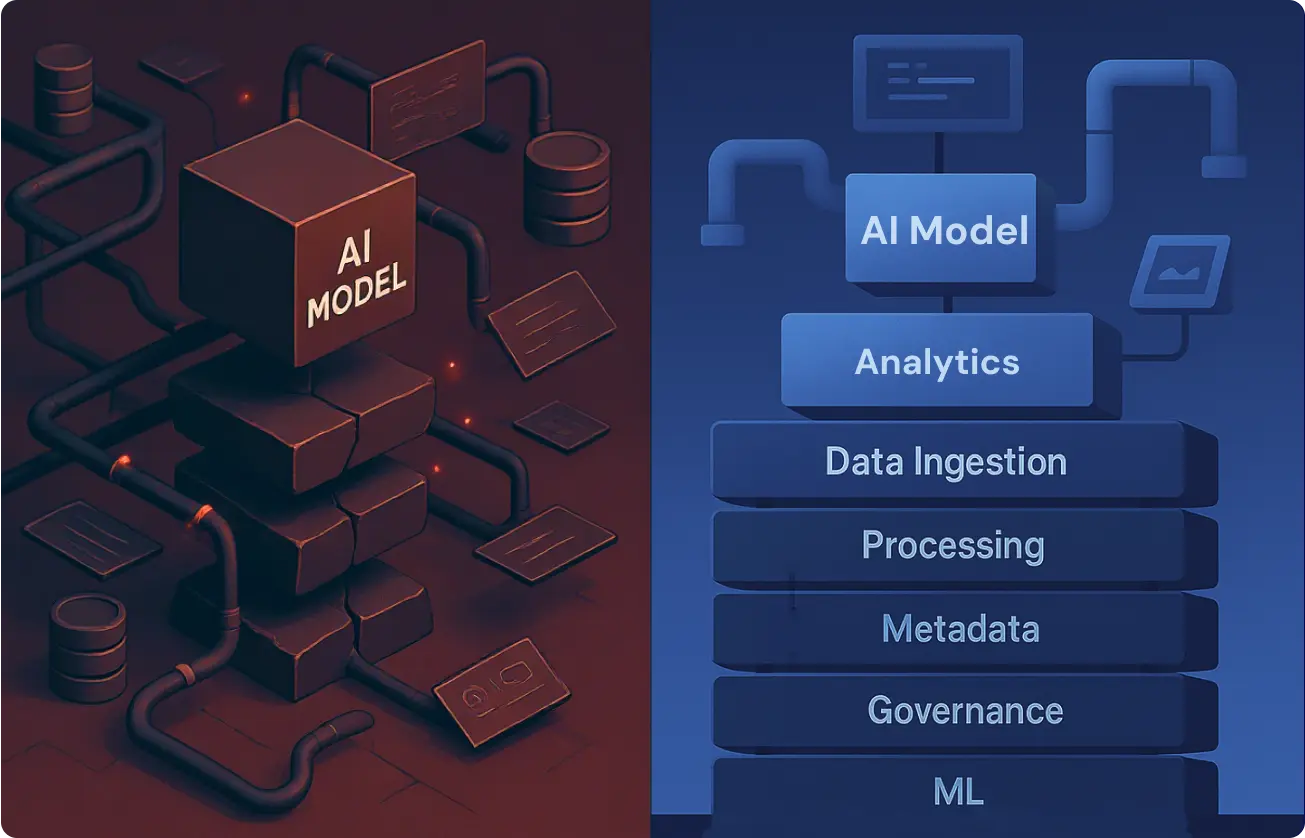

Most big data and knowledge management systems don’t fail at the model level—they fail upstream, in pipelines and infrastructure. When architecture breaks, it breaks silently: insights degrade, compliance risks spike, and costs multiply.

Without governance, orchestration, and metadata control, data becomes a liability. Big data management solutions must align pipelines with outcomes—not just store information but activate it at scale.

By 2027, half of all business decisions will be AI-driven. Yet Gartner warns that 60% of data leaders will fail to manage synthetic data, leading to model breakdowns and regulatory exposure. Early adopters of metadata-first frameworks already report 80% higher accuracy and 60% lower operating costs.

Enterprises that manage big data with infrastructure-first strategies gain execution speed, resilience, and trust. Those that don’t fall behind—silently, then suddenly.

What Is Big Data Management Today?

In 2025, data management is no longer about storing petabytes—it’s about activating structured intelligence from multi-format, fast-moving sources.

The old 3Vs (Volume, Velocity, Variety) have evolved into operational baselines. Today’s systems must manage 5Vs, or they fail silently:

- Volume: Ingest petabyte-scale data across digital exhaust, IoT feeds, logs, and APIs

- Velocity: Stream, batch, and event-based flows with millisecond-level expectations

- Variety: Blend structured (SQL), semi-structured (JSON, XML), and unstructured (PDF, HTML, video) formats

- Veracity: Maintain integrity, traceability, and quality across pipelines

- Value: Extract operational, predictive, or strategic lift—not just reports

But the “V” model isn’t a framework. It’s a failure checklist.

Three Disciplines You Must Separate to Scale

Enterprise data ecosystems collapse when roles blur. Governance without engineering creates bottlenecks. Engineering without governance creates legal exposure.

Real big data and data management separate and orchestrate:

- Data Engineering: Builds ingestion pipelines, defines schema flow, owns throughput

- Data Management: Maintains catalogs, quality, deduplication, and metadata versioning

- Data Governance: Applies access control, lineage traceability, and compliance enforcement

Together, these define your data control plane—the logic layer that governs who touches what, when, and why. Without this, you’re not managing data. You’re storing entropy.

From Legacy to Active Infrastructure: A Timeline of Big Data Maturity

| Era | Architecture | Limitation Resolved |

| 2000s | SQL + Monolithic Warehouses | Slow, centralized, fixed-schema |

| 2010s | Hadoop + DFS | Scaled batch storage lacked agility |

| 2020s | Lakehouse (Delta, Iceberg, Hudi) | Unified storage + live querying |

| 2025+ | Mesh + Federated Metadata | Domain ownership, real-time access |

Each layer fixed the previous one’s constraint—but created new responsibilities:

- Lakehouses require governance overlays

- Mesh requires metadata automation

- Federated access demands auditability and lineage by default

This isn’t tooling—it’s architecture as risk mitigation. You’re not choosing platforms; you’re choosing the failure modes you’re willing to accept.

Why Big Data Management Solutions Must Be Schema-First

Like LLM scraping, big data today breaks at the structure level. Without a schema-first approach, you get:

- Misaligned joins

- Broken lineage

- Unverifiable outputs

Modern architectures require:

- Metadata-first processing: Schema registry, lineage enforcement, field-level policies

- Orchestrated observability: DAG-based scheduling, retry logic, QA thresholds

- Modular ingestion logic: Plug-and-play sources without full-pipeline redeploys

- Federated governance: Domain-based ownership with global policy enforcement

Need to expose the same data to BI dashboards, AI pipelines, and compliance audits—without duplication or drift?

That’s not a feature list. That’s how you manage big data.

Architecture Is the New Differentiator

In 2025, Forrester named Google Cloud a category leader, not for its toolset, but for embedding automation, governance, and open standards as defaults. These are no longer competitive advantages. They are structural requirements.

“Gen AI is currently at the exciting nexus of demonstrated proof of value, rapid innovation, significant public and private investment, and widespread consumer interest… We can expect a rapid expansion of modular and secure enterprise platforms.”

— Delphine Nain Zurkiya, Senior Partner, Boston

AI systems no longer fail because of weak models. They fail because the data isn’t structured, activated, or governed at the foundation. Execution depends on architecture—not abstraction.

“Companies should concentrate on building capabilities in this domain and prioritize areas of focus to ensure they capture early value and aren’t left behind.”

— Matej Macak, Partner, London

Infrastructure Readiness Is The Overlooked Layer

The McKinsey 2024 outlook confirms the widening execution gap. AI and ML roles are growing, but the infrastructure to support them is underdeveloped.

Applied AI passed 500,000 job listings in 2023. Yet deep learning has a 6× talent surplus. Data analysis shows a 3.7× oversupply. Talent is not the blocker. Architecture is.

MLOps has moved inside the engineering core. Monitoring, lineage, and orchestration are now mandatory, not innovation layers. But DevOps and cloud engineering gaps signal deeper fragility. Teams prototype faster than they can scale.

Even with a 37% drop in software hiring, demand for CI and data engineering remains high. But the talent-to-need ratio for these roles is below 0.5×. Without modular design, versioning, and rollback logic, execution bottlenecks emerge.

Most teams know how to build a model. Few know how to scale it without failure. Where big data and knowledge management frameworks become decisive.

“Solving for gaps in automated monitoring and life cycle management of deployed AI solutions will ensure the lasting and scalable impact of AI… Industrializing bespoke gen AI solutions will require robust gen AI operational ecosystems.”

— Alex Arutyunyants, Senior Principal Data Engineer, QuantumBlack, AI by McKinsey

Governance is no longer a legal checkbox. It’s an operational control system. Without lineage, metadata enforcement, and versioned accountability, models drift or break.

According to BCG’s 2024 governance model, organizations that treat governance as strategy—not compliance—move faster and scale more reliably. Federated frameworks help manage data across silos while preserving ownership and enforcing structure.

This shift redefines the role of big data management solutions. Without orchestration, governance, and audit-ready metadata, teams can’t meet the pace or scale of modern AI systems.

Why Big Data Fails Without Proper Management

Enterprise systems rarely fail because of hardware or tools—they fail because of structural neglect. When you manage at scale without a unified architecture, governance, or metadata visibility, failure is inevitable.

This is not a tooling problem. It’s an operational breakdown. And it repeats—until you fix the foundation of big data and management.

Top 6 Failure Patterns Across Enterprises

Most enterprise collapse points aren’t random—they’re structural. In our work helping teams manage data across industries, we’ve seen these same six patterns derail systems repeatedly.

| Failure Pattern | Core Problem |

| Data Silos | Isolated systems block integration and interoperability |

| Inconsistent Quality | No QA layer, normalization, or versioning → multiple versions of “truth” |

| Lack of Ownership | No one owns the lifecycle; engineering builds, product breaks, no one maintains |

| No Metadata Visibility | Missing field definitions, no lineage, no traceability |

| Fragile Integration | Hardcoded joins, brittle schemas collapse with small upstream changes |

| No Observability Across Streams | No alerts, silent failures, stale dashboards |

These are not just common. They’re systemic, especially where big data and data management responsibilities are blurred across teams.

High-Cost Outcomes of Mismanagement

The risk of poor structure is exponential. Broken pipelines lead to faulty decisions, rising cloud costs, and legal exposure. In today’s AI-integrated systems, structure is everything, and its absence shows fast.

| Impact | Description |

| Wrong Decisions from Bad Data | Misaligned metrics lead to poor strategy, broken forecasts, and blind spots |

| Compliance Breaches | GDPR, HIPAA, PCI—violated not by intent, but by traceability gaps and missing consent logic |

| AI Hallucination Risks | LLMs trained on bad inputs produce false or biased outputs—at scale |

| Redundant Storage Cost | Duplicate data across tools and teams drives uncontrolled cloud spend |

| Dev Time Lost in Debugging | Engineers patch collapse instead of building value—pipelines become the cost center |

Every one of these is a downstream failure of upstream neglect. And every one can be solved—if you treat big data and management as infrastructure, not content.

Core Components of Big Data Management Solutions

To run data at scale, systems must be modular, observable, and enforceable by design. Below are the core components every modern data architecture must include—built not for flexibility, but for control.

Architecture Layer Breakdown

Big data systems are layered. Failure at one tier silently corrupts the next. This breakdown clarifies what each layer must deliver—and what happens if it doesn’t.

| Layer | Function | Failure Risk |

| Ingestion | Capture batch and stream data from APIs, logs, systems | Data gaps, delayed pipelines |

| Storage | Persist data in object stores, lakes, or Delta formats | Cost bloat, inaccessible formats |

| Metadata | Track schema, lineage, and semantic definitions | Field drift, broken joins, no rollback |

| Processing | Run compute tasks (Spark, Flink, SQL-on-Hadoop) | Inconsistent logic, partial results |

| Access | Expose trusted data via BI, APIs, notebooks | Analytics on stale, broken, or wrong data |

Each layer must be modular, observable, and aligned to schema logic. Without this stack, you’re building blind.

Governance and Security by Design

Compliance is not a checkbox—it’s enforced logic across the system. The table below outlines the governance layers that must be embedded—not layered on later.

| Policy Layer | What It Enforces | Tools / Methods |

| Data Classification | Tag fields for sensitivity and use case | Tags, domain rules, data maps |

| Access Control | Restrict visibility by role, region, and context | RBAC, ABAC, row- and column-level filters |

| Protection & Auditing | Secure data and log access across pipeline stages | Encryption, masking, audit trails |

| Compliance Alignment | Automate legal and retention logic | GDPR, HIPAA, CCPA policies, consent systems |

Security must be proactive, not reactive. Without enforced access and visibility controls, data becomes both a risk and a liability.

Metadata Management and Data Catalogs

Metadata isn’t documentation. It’s the system logic that defines how data is structured, discovered, and trusted. Below are the minimum requirements for metadata-driven operations.

| Capability | Purpose | Enables |

| Business Glossary | Standardize meaning of terms and fields | Cross-team clarity, semantic validation |

| Schema Versioning | Track field changes over time | Rollbacks, migration control, auditability |

| Self-Service Discovery | Searchable lineage and quality metadata | Analyst independence, reduced ticket load |

| Metadata Tools | System-level metadata orchestration | OpenMetadata, Collibra, Atlan, Alation |

If you don’t define your data at the metadata layer, the rest of the pipeline guesses—and guesses break.

The Control Plane: Aligning Governance, Observability, and Activation

In modern data systems, control doesn’t come from dashboards. It comes from orchestration—the logic layer that defines how data is validated, accessed, and used across pipelines. This is the control plane. And without it, big data becomes big debt.

Governance: From Policy to Execution

Governance only matters if it enforces outcomes. The table below outlines how to translate static rules into system-enforced controls.

| Governance Layer | Execution Mechanism | What It Prevents |

| Data Ownership | Domain-based access policies, data product boundaries | Blame diffusion, shadow pipelines |

| Lineage Tracking | Field-level source mapping with version history | Inference errors, trust breakdown |

| Compliance Enforcement | Retention logic, masking, audit trails at pipeline edge | Legal risk, breach exposure |

| Schema Contracts | Pre-defined input/output specs with hard fail logic | Silent schema drift, broken joins |

Conclusion:

Governance isn’t documentation. It’s logic that executes—or systems that collapse.

Observability: Make Pipelines Measurable

If you can’t measure your pipeline at each stage, you can’t trust it. Observability turns assumptions into signals. Below is what must be monitored by default.

| Observability Focus | What to Monitor | Tool Examples |

| Freshness | Time since last successful run | Monte Carlo, Databand, Airflow sensors |

| Schema Drift | Changes in field types, names, or order | Soda, Great Expectations |

| Volume Anomalies | Record count deviation from expected range | Datafold, custom checks |

| Dependency Failures | Upstream or downstream job breakage | DAG-based monitoring (Airflow, Dagster) |

Without observability, you don’t run data—you hope it works. Hope doesn’t scale.

Activation: Turn Data into Decisions

Data is not useful unless it’s applied. Activation is the final stage of the control plane—where validated, trusted outputs power real systems.

| Activation Layer | Primary Consumers | Design Requirements |

| BI + Dashboards | Analysts, Execs | Consistent metrics, semantic layer alignment |

| ML + AI Pipelines | Data science, LLMs | Structured inputs, schema-governed contracts |

| APIs + Microservices | External apps, partners, platforms | Real-time SLA, tokenized access control |

| Internal Apps | Product, support, ops | Row-level security, freshness guarantees |

You don’t manage big data to store it. You manage it to activate it. That only works if governance and observability are aligned upstream.

Best Practices for Big Data Management

Effective data management begins with control, not code. Teams fail when architecture decisions are made after the fact, when pipelines break silently, or when business users can’t access what they need. Below are four practices to structure, scale, and apply your data with confidence.

Strategy First, Tools Second

The system should follow the goal, not the other way around.

- Define clear use cases across departments before selecting tools

- Involve IT, business, and compliance teams early in planning

- Make real-time usage part of the roadmap, not an afterthought

To understand how to manage big data for business, start with the decisions it needs to power, not the software it uses.

Streamlined Ingestion and Integration

Where and how data enters your system determines its quality and speed.

- Load raw data directly, then transform it inside your storage layer to reduce wait times

- Keep systems in sync by capturing every update as it happens, not just scheduled batches

- Detect changes in data format early to prevent processing failures

Many big data management challenges begin here—when teams collect data fast but can’t trust what lands.

Self-Service and Democratization

Data only delivers value when people can use it—without waiting on engineering.

- Build shared data layers that let analysts explore data using business terms

- Use distributed systems that give each team control over their own data sets

- Allow no-code tools so non-developers can clean and combine data on their own

Access without structure leads to chaos. Structure without access leads to bottlenecks. The right system gives you both.

Monitor What Matters

If you can’t measure what’s happening in your pipelines, you can’t manage the risk.

- Check how recently each dataset was updated, and whether the volume matches expectations

- Set alerts for missing values, delays, or structure changes

- Build recovery steps that trigger automatically when problems are detected

Without visibility at every stage, your data system becomes a guessing game—and guesswork doesn’t scale.

Big Data Management Case Studies

Below are two real-world deployments of big data management solutions by GroupBWT: one in retail, the other in pharma. Both solved structural data problems that couldn’t be addressed through tooling alone.

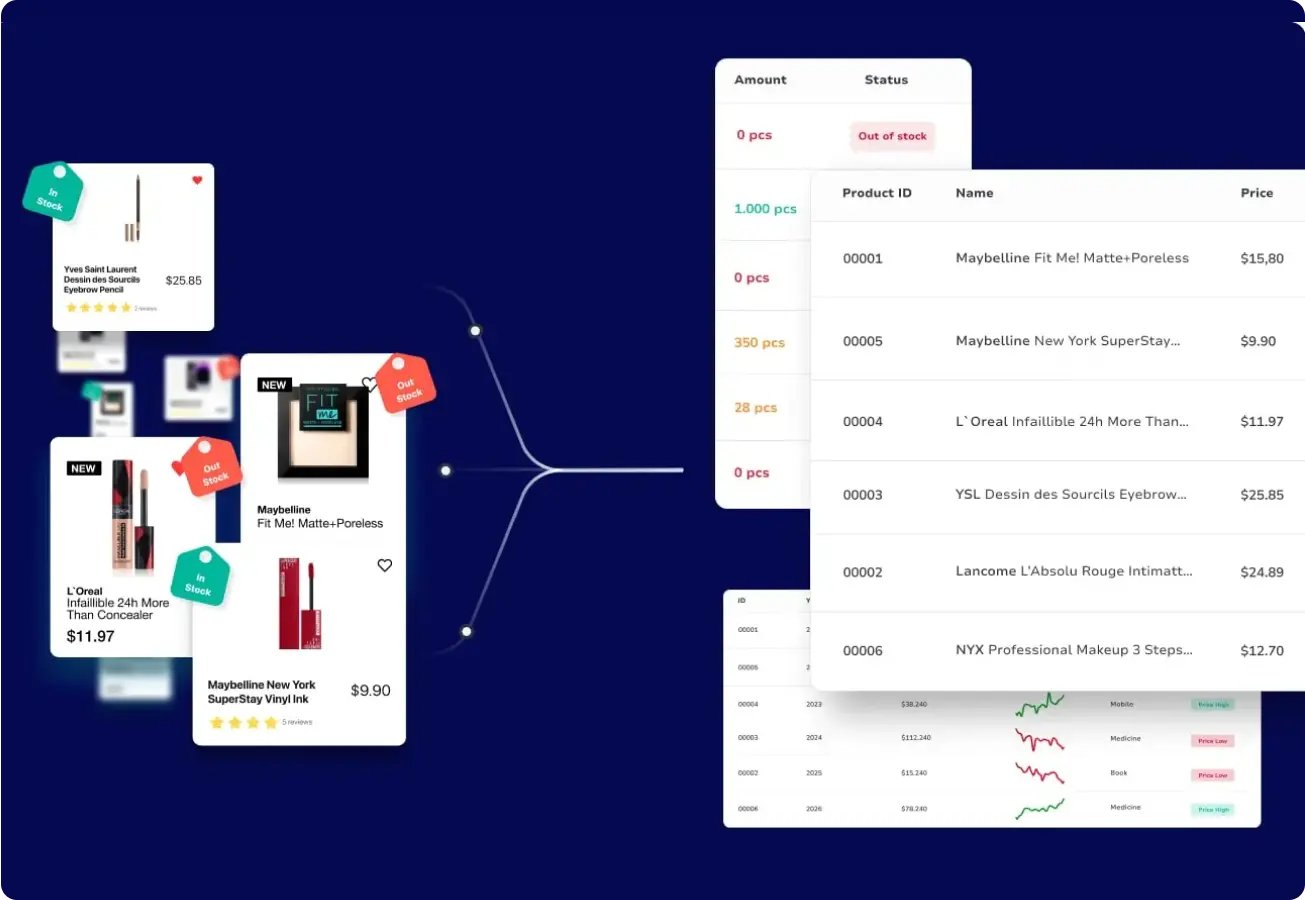

Case 1: Improving Assortment Precision in Beauty Retail

About the Client:

A U.S.-based beauty retailer with thousands of SKUs struggled to predict demand. Fast-selling items went out of stock. Others sat unsold, driving up holding costs.

The Problem:

Manual assortment tracking led to gaps in competitor visibility. Teams couldn’t adapt stock strategy fast enough. Forecasting errors grew, and profits shrank.

Our Solution:

We built a compliant, modular scraping system that delivered:

- Real-time inventory data from competitors by simulating bulk purchases

- A live dashboard that mapped SKU movement and exportable trends

- Proxy-based sourcing and regulated timing to ensure system stability and site-friendly requests

The Outcome:

- +85% forecast accuracy for high-velocity SKUs

- Revenue doubled in 4 months from better stock timing

- Profit margin rose 47% through optimized procurement and markdown reduction

Case 2: Capturing Market Sentiment for Pharma Expansion

About the Client:

A European pharmaceutical leader preparing for a market expansion needed to understand consumer sentiment around drugs and components before launch.

The Problem:

Manual review analysis couldn’t scale. Data from Amazon, Reddit, and pharmacy chains was fragmented. Insight quality was low, and strategic decisions stalled.

Our Solution:

We deployed a centralized data extraction pipeline with:

- Automated review scraping across Amazon, Walmart, CVS, and more

- NLP sentiment analysis on Reddit threads, comments, and third-party content

- A decision layer converting raw feedback into digestible graphs and strategic metrics

The Outcome:

- 15% increase in brand partnership opportunities within 1 quarter

- NGo-to-market content aligned with consumer sentiment and unmet needs

- Internal research cycles reduced by weeks, unlocking faster decisions

Why Big Data Management Solutions Define Execution in 2025

Big data management is an execution system. Without structure, orchestration, and control, no team can scale AI, support compliance, or trust its output. Architecture is the difference between insight and incident.

If your pipelines lack visibility, governance, or schema enforcement, you’re not managing data—you’re absorbing risk. GroupBWT helps teams turn scattered systems into real infrastructure: modular, governed, and ready to activate intelligence across business and AI layers.

Want to assess your current architecture or design a future-proof control plane?

Request a technical audit or architecture consultation with GroupBWT.

FAQ

-

What are the biggest big data management challenges for modern enterprises?

Most teams don’t fail because they lack tools—they fail because structure breaks upstream. The real big data management problems involve lineage, broken schema, poor versioning, and no QA across ingestion layers. Without clear roles and a schema-first foundation, execution always stalls.

-

How can companies implement big data management and governance that scales?

It starts by separating control from access. Teams must embed enforcement at the pipeline layer—retention logic, audit logs, masking, and schema contracts. Scalable big data management and governance means real-time accountability, not policy slides.

-

How to manage big data for business when sources, teams, and goals constantly shift?

Treat your data system as infrastructure, not content. To manage big data for business, you need versioned inputs, modular logic, and enforced lineage across domains. Stop patching scripts—build a governed control plane that flexes as your teams grow.

-

Why do most data systems break without warning?

Because failure rarely announces itself. Dashboards keep updating even when pipelines stall upstream. Without observability at the control layer, teams don’t see the problem until it’s already in production.

-

What’s the real cost of not fixing broken pipelines?

More than cloud bills—it’s lost trust, wasted time, and faulty decisions. When data is wrong and no one knows why, teams stop relying on it. Recovery costs far more than prevention.

-

Why doesn’t automation help if the architecture can’t handle the load?

Automation just scales the error if the structure is weak. Without versioning, checkpoints, and QA enforcement, every run deepens the chaos. Speed without stability isn’t efficiency—it’s accelerated decay.

-

Why does flexibility without rules cause more harm than good?

Without contracts, access control, or ownership boundaries, “flexible” systems become ungovernable. Everyone can touch everything, so no one can trace anything. At scale, the issue isn’t failure—it’s the lack of control.