If you’re still stitching reports manually or paying per data source, you don’t have a system—you have a budget leak and a decision gap.

According to the Business Research Company’s 2025 report, the global data analytics market is set to reach $94.9 billion this year and climb past $258 billion by 2029, driven by AI adoption, compliance mandates, and a surge in real-time use cases. At the same time, global data volume is projected to hit 182 zettabytes, up from 120 ZB just two years ago.

That’s why companies are replacing brittle scraping scripts and black-box vendor tools with owned big data aggregation solutions that are modular, jurisdiction-aware, and resilient to drift upstream. In this guide, we’ll show how these systems are built, where they deliver ROI, and why they’re now a foundational layer for enterprise growth.

In this guide, GroupBWT will show you exactly how data aggregation frameworks work, where most systems break, and what it takes to build a resilient, modular architecture that scales with your business.

Data Aggregation Overview: Turning Source Noise into Decision-Ready Datasets

Data aggregation is not “joining tables.” In enterprise systems, it is the control layer that turns unstable inputs into a dataset you can defend in dashboards, forecasts, and audits—without forcing analysts to fight messy spreadsheets, inconsistent APIs, and scraping scripts that break without warning.

In practical terms, data aggregation means pulling data from multiple sources, aligning their structure, and transforming it into one clear, unified dataset—whether your input comes from HTML tables, API feeds, PDFs, or CSV dumps. The difference between a report and a system is whether you can repeat the result tomorrow with the same rules and traceability.

“Scraping systems don’t fail because the code is bad; they fail because the architecture treats platform changes as exceptions rather than the rule. We design aggregation loops that anticipate drift, building self-healing logic into the ingestion layer so that your ‘source of truth’ remains stable even when the web gets messy.”

— Alex Yudin, Head of Data Engineering, GroupBWT

The GroupBWT Aggregation Control Loop

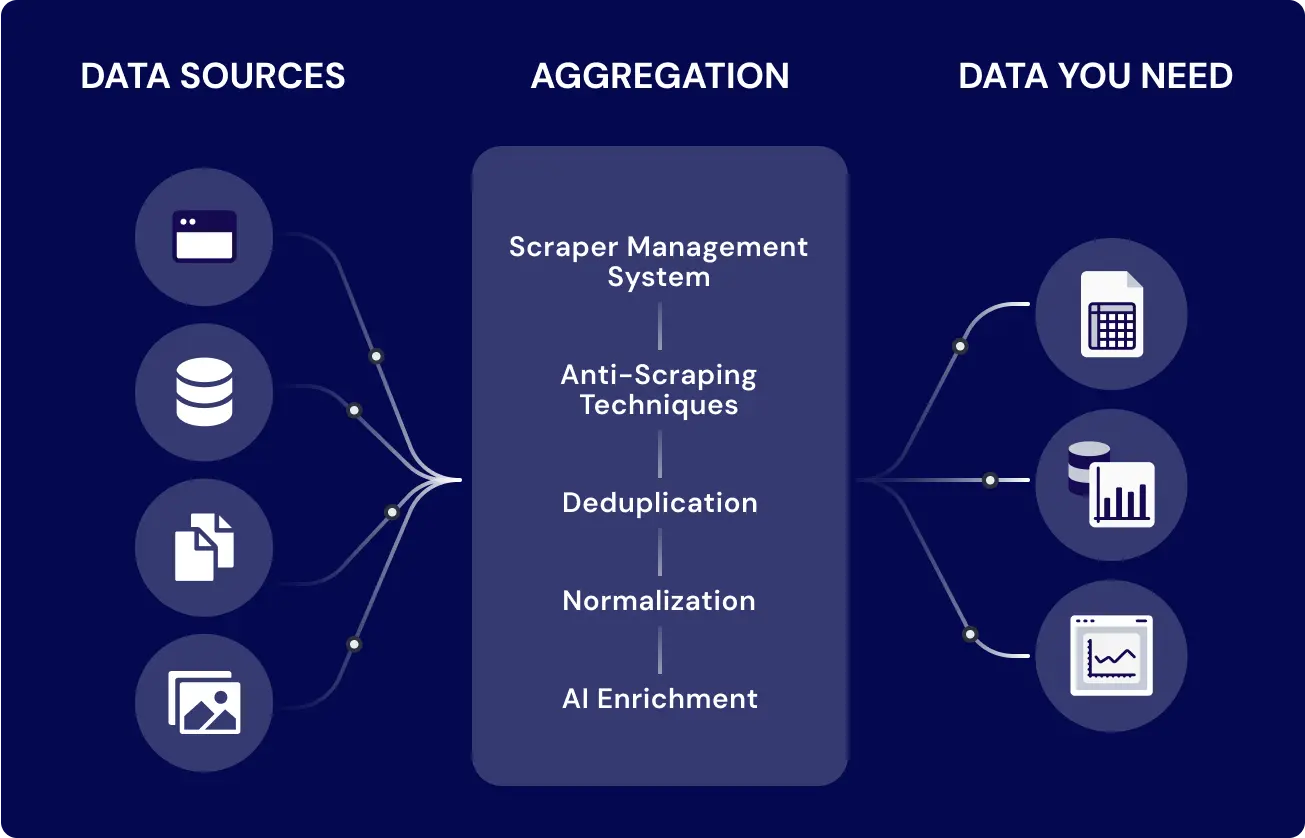

In our custom data aggregation framework, aggregation goes beyond merging rows. We design pipelines as an Aggregation Control Loop with five non-negotiable outputs:

- Ingest: handle multi-source data ingestion at scale across formats and endpoints.

- Access: bypass anti-scraping barriers legally and efficiently when sources are dynamic or restricted.

- Reconcile: apply real-time deduplication across feeds so metrics stop drifting by source.

- Normalize: normalize fields for format consistency so downstream BI stops carrying cleanup debt.

- Enrich: enrich context via AI-based logic, external links, or classification models so the dataset answers business questions, not only stores values.

Fast self-check: Are you aggregating or patching?

Use this checklist before you pick an approach (historical, scheduled, or real-time):

- Do you have a defined “source of truth” per field (and a rule for conflicts)?

- Can you detect a schema change before it hits dashboards?

- Do you deduplicate across sources using stable identifiers?

- Can you explain every transformation step to BI and compliance stakeholders?

- Can you add a new source without rewriting the pipeline?

Next, choose the aggregation type based on freshness, volatility, and operational risk.

This creates an owned data aggregation solution that’s accurate, scalable, and built to survive drift or source changes—unlike brittle no-code tools or single-purpose web scraping solutions. Our

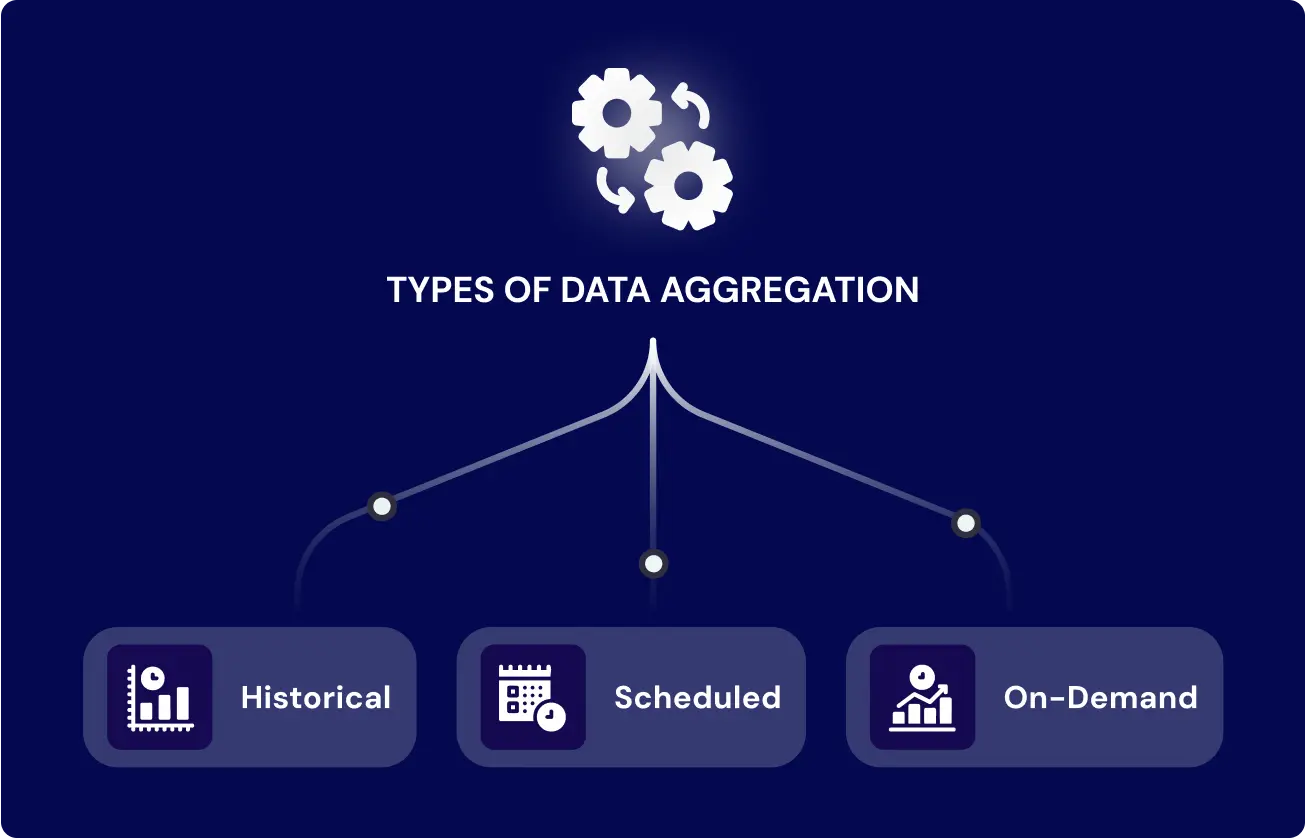

Types of Data Aggregation

Not all data arrives in real time, and not every system needs it to. Depending on the use case, teams may choose from historical, scheduled, or on-demand data collection solutions. Each has tradeoffs in latency, complexity, and completeness.

Here’s how data aggregation frameworks typically handle these three approaches:

Historical Aggregation

Aggregates data that already exists. Typically used for long-term trend analysis, model training, or regulatory auditing.

Example: A retail brand scrapes past 12 months of public listings across marketplaces to track product lifecycle and pricing trends. This forms a time-series aggregation layer in their BI platform. Our case study on digital analytics for Kimberly-Clark provides a deep dive into this kind of use case.

Scheduled Aggregation

Gathers data at defined intervals—hourly, daily, weekly.

Useful when data sources don’t change frequently or when real-time updates aren’t needed.

Example: A logistics provider pulls route pricing and fuel cost data every night from 8 sources. This builds a stable daily snapshot for BI users by 7 am each morning. We have a specific case study on delivery pricing scraping that demonstrates this capability.

Real-Time (On-Demand) Aggregation

Pulls data live, at query time, or near real-time using streams or event triggers.

Used when:

- Fraud detection systems

- Trading platforms

- Marketplaces where content decays fast (e.g., hotel listings, auctions)

Choosing the Right Approach

Each method has tradeoffs. Historical aggregation offers volume, but not freshness. Scheduled pipelines reduce load, but miss critical spikes. Real-time data brings accuracy, but adds infrastructure and risk.

In well-architected custom data aggregation solutions, we often combine all three layers for reliability, cost-efficiency, and speed.

Core Principles of Resilient Aggregation Systems

A working data aggregation framework is a production-grade system built on foundational principles that ensure it scales with the business.

Foundational Principles

These principles ensure the system remains reliable and adaptable:

- Modularity: Each stage—ingestion, transformation, and delivery—must be independently deployable and replaceable. This enables faster iteration, isolated testing, and zero-downtime updates.

- Schema Evolution: The system must support version-controlled schemas so that changes in source data structure don’t immediately break downstream pipelines.

- Observability: Logs, traces, metrics, alerts, and alerts are integrated from the start to make all failures immediately actionable and visible.

- Jurisdictional Compliance: Field-level masking and routing by region are embedded in the pipeline to enforce privacy regulations like GDPR and CCPA automatically.

- Scalability: The system must support both vertical and horizontal growth. Whether scaling big data management volume, team usage, or source complexity, no core rewrite should be needed.

- Error Recovery: Fallbacks, retries, and rollback-safe logic guard against upstream volatility. Resilience is built into every pipeline segment by default.

- Security and Access Control: Role-based access control and immutable logs are used to maintain trust boundaries for sensitive data.

- Transparency: Every transformation is testable, versioned, and auditable. This eliminates black-box behavior and ensures data lineage is explainable to business and legal teams.

How Data Aggregation Frameworks Work

Most people think data integration for enterprises means joining tables from a few APIs. In production systems, that logic breaks fast. Real frameworks must handle unreliable sources, shifting layouts, schema mismatches, and legal constraints—all without breaking the output pipeline.

A custom data aggregation framework solves this with a modular architecture that breaks the job into three distinct layers: ingestion, transformation, and delivery.

Ingestion: Collecting Data from Diverse Sources

Data doesn’t always come from structured APIs. Aggregation frameworks must ingest:

- Dynamic websites with JavaScript rendering

- Static formats like CSV, XLS, and PDF

- Unstructured HTML and metadata

- Internal databases and third-party tools

- REST and GraphQL APIs with custom logic

Instead of hardcoding one parser per source, mature systems rely on connector logic, retry mechanisms, a rotating proxy for scraping handling, and fallback strategies. These make ingestion resilient even when upstream sources change.

Transformation: Cleaning and Preparing the Data

Once ingested, raw data must be turned into something analytics-ready. This involves:

- Cleaning: removing layout noise, junk fields, formatting artifacts

- Deduplication: identifying and eliminating overlapping records

- Normalization: aligning inconsistent formats and naming schemes

- Enrichment: adding classifications, cross-source context, or NLP insights

- Validation: ensuring schema compliance, field presence, and referential integrity

These steps form a structured ETL and data warehousing concepts pipeline that filters out inconsistencies before they affect downstream analytics.

Delivery: Serving Structured and Compliant Outputs

The final layer turns processed data into usable output. This might mean:

- Loading datasets into BI dashboards (Tableau, Power BI, Metabase)

- Passing records to internal ML systems

- Syncing with CRMs or ERPs

- Exposing JSON or CSV downloads via API

The architecture often relies on an event bus (like RabbitMQ) to decouple modules, allowing for isolated testing and zero-downtime updates.

Why Choose Custom Vs Ready-Made Aggregation

Off-the-shelf aggregation tools can be useful when you have one or two predictable data sources, and low risk if something breaks. But for enterprise workflows, their limitations quickly become bottlenecks.

Generic Tools Aren’t Built for Drift

Most ready-made platforms rely on static logic: fixed field maps, hardcoded selectors, and limited retry logic. They often fail silently when:

- Source structure changes (new HTML, API versioning)

- Response logic varies by region or language

- More than one feed requires merging or deduplication

And when they fail, they don’t explain why. You’re left debugging outputs without context or traceability.

Custom Systems Adapt by Design

A custom data aggregation framework works differently. It’s built around your infrastructure, sources, and compliance constraints. It doesn’t assume what your data should look like—it confirms it.

In practice, this means:

- Schema mapping is version-controlled and extensible

- Field validation is contextual, not generic

- New sources or endpoints can be added without breaking the pipeline

- QA logic is tied to actual use cases, not placeholder datasets

You don’t adjust your process to fit the tool. The tool is built around your process.

Lower Maintenance, Faster Change Cycles

When your aggregation logic is purpose-built, you don’t need workarounds or patch layers. You can:

- Ship updates faster

- Track failures with precision

- Scale across markets without creating tool fragmentation

And most importantly, you own the logic. You can test it, tune it, and evolve it as your business evolves.

What Challenges Custom Aggregation Solves

| Problem You Face | How Our Framework Fixes It |

| APIs silently fail on layout changes | Schema drift is auto-detected and versioned |

| You pay per feed or row | Connect unlimited sources at no extra cost |

| Compliance is retrofitted late | Jurisdiction-level rules are built into pipelines |

| QA is manual and error-prone | Validators, logs, and alert rules run by default |

| Vendor tools force format constraints | You control schemas, logic, and delivery flow |

Stop patching third-party tools. Start shipping data pipelines that match how you work.

Why Legal-Grade Compliance Needs Custom Architecture

Out-of-the-box tools can’t guarantee auditability. They’re built for extraction, not regulation. But in regulated industries, that’s a risk you can’t afford.

Only an owned data aggregation solution can embed compliance at the source—field by field, record by record.

Our framework embeds compliance into the pipeline at every stage:

- PII detection and masking by field and region

- Role-based access to sensitive fields

- Immutable logs for every query, transform, or export

- Infrastructure routing by jurisdiction (e.g., EU-only data stays in the EU)

- SOC 2- and GDPR-ready design from day one

If you can’t trace where your data came from or how it was processed, regulators will ask why. We give you answers.

These limitations are only the start. Let’s look at what custom data aggregation actually unlocks.

Benefits of Custom Data Aggregation

A custom data aggregation framework delivers far more than flexibility—it builds structural resilience, cost control, and legal confidence at scale.

You Own the Logic—Not the Vendor

In custom setups, your team controls exactly how data is pulled, processed, and delivered. This eliminates black-box behavior and aligns outputs with evolving needs.

It Scales Without Breaking

Unlike rigid platforms, a modular framework doesn’t fall apart when new sources or formats appear. Full-scope big data services require this level of agility.

Fewer Workarounds, Less Firefighting

With custom data aggregation, your system doesn’t collapse every time a website changes or a field goes missing.

Compliance Isn’t an Afterthought

Our framework has helped enterprise clients pass GDPR, CCPA, and SOC 2 audits by embedding PII filtering, field-level lineage, and immutable logging into every pipeline.

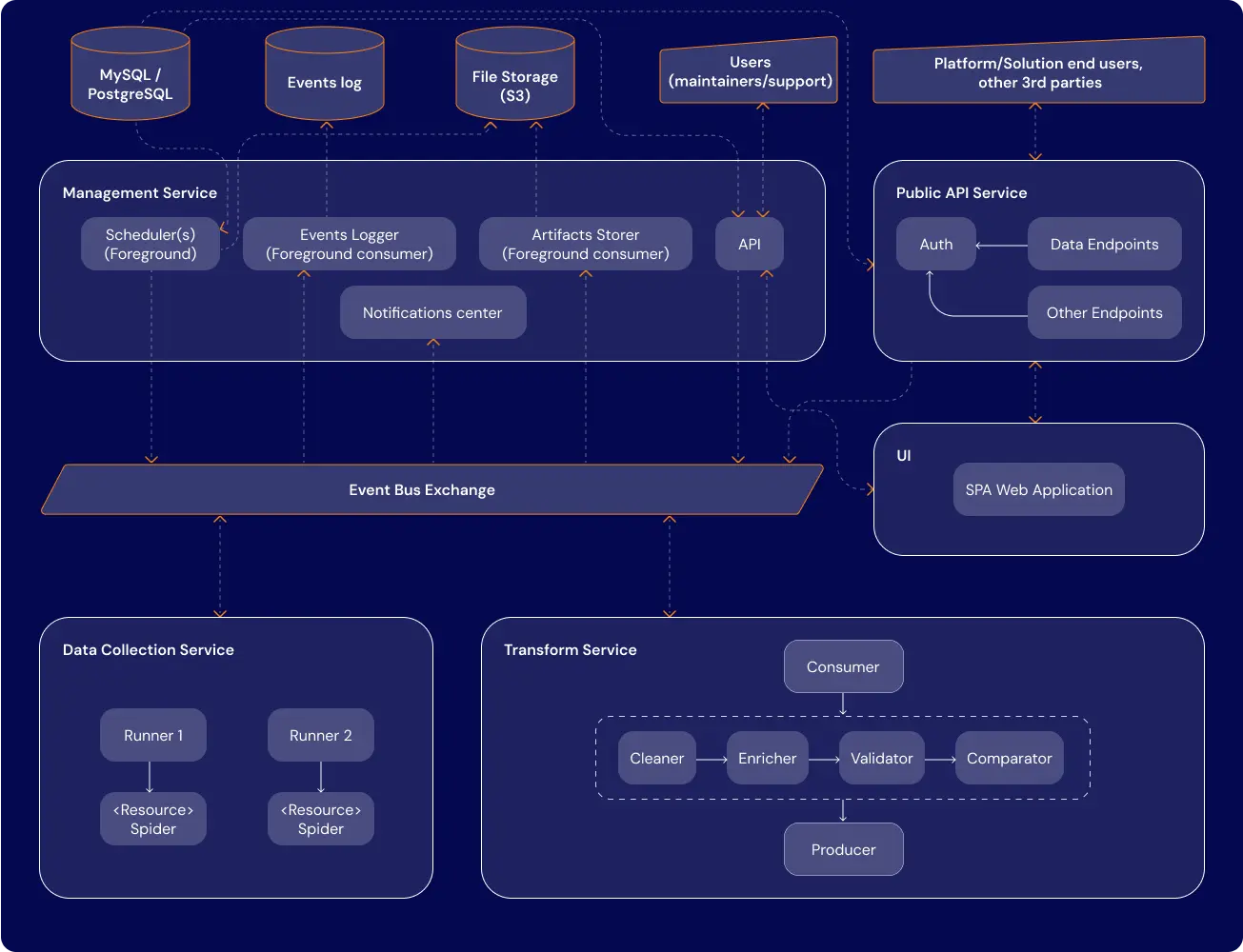

Framework Architecture Overview

The diagram illustrates how our event-driven architecture orchestrates ingestion, transformation, validation, and delivery—modular, scalable, and audit-ready.

Each module is decoupled yet synchronized via the event bus exchange, enabling zero-downtime updates, flexible deployment, and per-component observability.

This isn’t just about ingestion. It’s a system that guarantees:

- Consistency of data across modules

- Adaptability to source-level change

- Legal traceability from source to output

- Configurable performance by volume, speed, or frequency

Below is how we build that.

1. Event Bus: The Routing Core

At the center is RabbitMQ, used to propagate all tasks and events.

- Every module publishes or subscribes via strict contracts

- No hardcoded calls—just schema-based events

- Modules stay decoupled but synchronized

This lets us replace, scale, or isolate modules with zero downstream breakage.

2. Modular API System

Each API serves a single purpose:

- Management API: Controls crawls, config, and system ops

- Data API: Serves curated outputs, JSON/CSV formats

- Auth API: Role-based access and token control

- API Gateway: Entry point that hides internal complexity

Result: clean interfaces, permissioned access, and no manual overrides.

3. Collection + Transformation as Services

Ingestion supports HTML, APIs, PDFs, and file dumps. The processing chain includes:

- Cleaning: layout noise, rogue characters, null fields

- Schema Mapping: unify formats, align taxonomies

- Enrichment: NLP models, cross-source lookups, external APIs

- Validation: rule checks, QA snapshots, trace logs

Each stage is containerized and project-configurable.

4. Storage Is Local, Not Centralized

Instead of a single warehouse, each module handles storage:

- High-speed reads for live dashboards

- Snapshot-ready data for BI

- Segment isolation for sensitive inputs

This reduces latency, increases modularity, and avoids format bottlenecks.

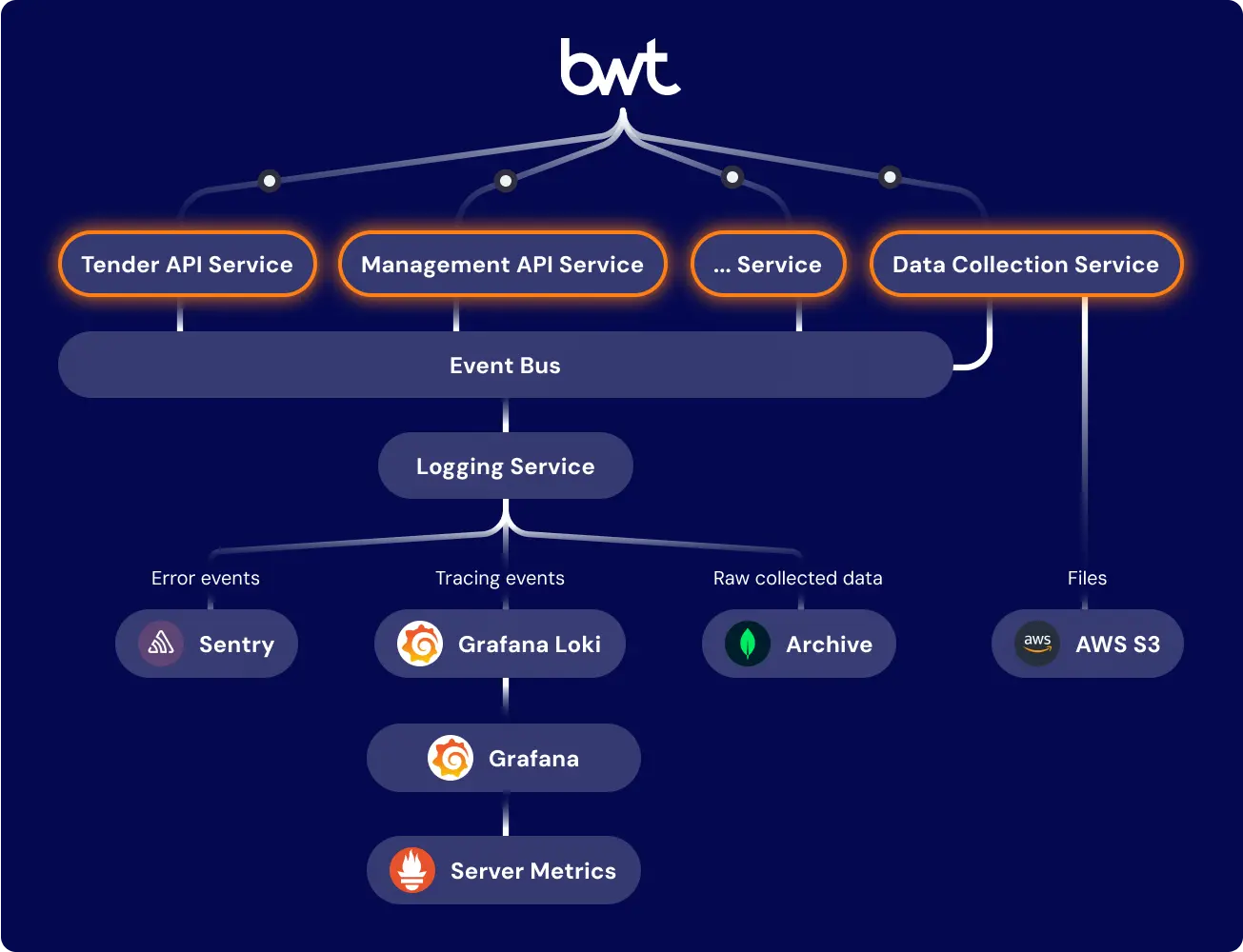

5. Observability Is Built-In

We use Grafana + Loki for logs, Sentry for error capture. Teams can:

- View system metrics in real time

- Monitor collection lag, API usage, and error trends

- Receive alerts tied to source status or schema change

Observability is not an afterthought—it’s a design requirement.

6. GitOps for Deployment and CI/CD

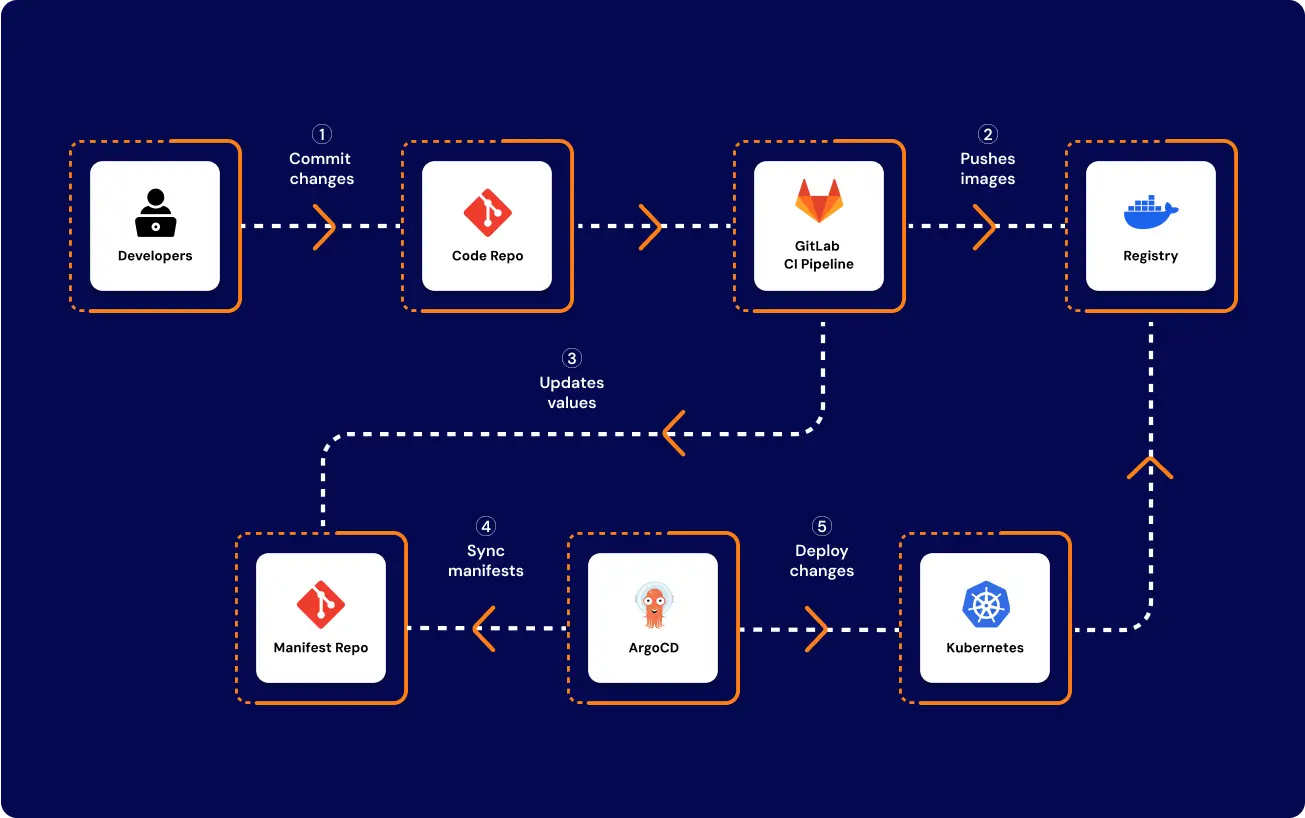

This CI/CD diagram illustrates how GitOps pipelines with ArgoCD and GitLab accelerate deployments, reduce ops workload, and ensure consistency across environments.

Terraform, Kubernetes, ArgoCD, and GitLab CI all orchestrate deployment.

- Push-to-deploy workflows

- Environment parity (dev/stage/prod)

- Instant rollback and zero-downtime updates

This eliminates manual ops and accelerates delivery.

7. Optional, Not Monolithic

You can trim the system for leaner deployments:

- Disable UI, rely on API only

- Merge the transform and ingestion stages

- Replace Auth with static firewall controls

The core always remains: event flow, validation, and delivery.

Compliance & Governance by Design

In high-stakes industries, compliance isn’t optional—it’s operational. Data scraping with AI or traditional data extraction services company need to embed governance from the start.

Hidden Risk in Uncontrolled Pipelines

Generic aggregation tools often skip compliance logic entirely. That leads to:

- Personal data leakage across regions

- No visibility into how fields are used or exposed

- No audit trail for transformations or errors

- Delays when responding to legal inquiries or regulators

These oversights create legal risk, not just for the data team but for the business.

Field-Level Controls and Jurisdictional Logic

Our data engineers apply governance policies at the early stages of aggregation. Not just per source, per record, per field.

We’ve already helped enterprise clients pass GDPR, CCPA, and SOC 2 audits by embedding the following into every pipeline:

- PII filtering and masking by jurisdiction

- Field-level lineage: track where every value came from

- Immutable logging: every query, transform, and export is recorded

- Role-based access control: only authorized apps or users can access sensitive fields

- Policy-based routing: e.g., EU data stored and processed in EU infrastructure only

Nothing is retrofitted later. Everything is auditable by design.

Passed External Audits Without Rework

This framework has supported live systems across healthcare, finance, and public sectors, where audit-readiness is non-negotiable.

One of GroupBWT’s healthcare clients reduced legal review time by 70% by leveraging our per-jurisdiction trace logs. Another passed a government procurement review without additional documentation.

If compliance feels like an afterthought in your current system, it probably is. This model fixes that.

TCO & ROI: Custom vs Off-the-Shelf

Most prebuilt aggregation platforms promise low setup time. But over time, they cost more in rework, licensing, incident handling, and vendor lock-in. Here’s what enterprise teams pay for.

Hidden Costs Compound Fast

What looks “cheap” in year one often leads to:

- Extra dev hours for every new source

- Third-party plugins for deduplication or cleaning

- Support delays when the platform logic fails

- Annual licensing + usage fees with no ownership

- Downtime and data loss from vendor changes

None of this appears on the pricing page, but it shows up in your monthly backlog and budget reports.

Lower TCO Through Custom Ownership

With a custom framework, you control the logic and the cost. We build once, then you adapt, extend, and reuse.

- No per-source fees connect new endpoints without paying more

- Lower the change cost update logic without rewriting pipelines

- Fewer QA cycles, validators catch schema drift before it breaks

- Zero platform lock-in, move infrastructure as needed

This doesn’t just reduce spend. It shortens time-to-update and lets teams launch faster.

It’s one of the clearest benefits of custom data aggregation—full control over cost, speed, and evolution.

60% Drop in Vendor Spend + Incident Losses

One logistics client replaced three tool licenses with a single internal system, cutting platform costs by $80k and reducing failure incidents by 40%.

Another eCommerce team went from 3 weeks to 2 days to integrate a new marketplace feed—no downtime, no workarounds.

If your aggregation tool needs workarounds for every change, you’re already paying for it.

See How Teams Across Industries Use Custom Aggregation

GroupBWT has implemented this system across multiple verticals. Web scraping in data science is a key capability we bring to these projects.

eCommerce: SKU Sync Across Channels

Challenge: Product teams couldn’t track price and stock across 7 marketplaces in sync

What we built: Real-time ingestion with 3-minute deltas, normalized across regions and languages

Result: Pricing logic aligned across 4 product lines, reducing mismatch errors by 88%

Finance: Audit-Ready Market Intelligence

Challenge: Analysts lacked traceable feeds to support pricing and risk models

What we built: Daily aggregation from 5 sources with source-level logging and jurisdictional data masking

Result: Cleared internal audit and reduced false signals in dashboards by 32%

Logistics: Delivery Pricing Benchmark

Challenge: Teams were manually benchmarking delivery fees across regions

What we built: Scheduled overnight aggregation from 8 couriers, with full metadata tagging and fallback logic

Result: 96% success rate on daily jobs, pricing triggers delivered by 7:00 AM

Healthcare: Provider Directory Aggregation

Challenge: CMS compliance required full, deduplicated listings with service taxonomy

What we built: Multi-source ingestion with on-the-fly deduplication and specialty classification

Result: Passed third-party audit and integrated with the provider search app in under 2 weeks

Travel: Hotel Listings for Dynamic Offers

Challenge: Hotel partners updated availability and promos too fast for daily sync

What we built: Real-time aggregation with event triggers and alert thresholds

Result: 5-minute refresh cycle, powering live discount offers with 99.9% accuracy

From e-commerce SKUs to healthcare provider listings, our custom data aggregation framework adapts to data chaos, legal rules, and operational urgency.

Public Procurement: EU Tender Aggregation

Challenge: The client was tracking tenders across multiple national procurement portals, but lacked a unified, real-time view. Fragmented scraping scripts, inconsistent formats, and missed deadlines created legal risks and internal friction.

What we built: We deployed a multi-source aggregation pipeline that ingested from TED, regional portals, and open contracting feeds. The system normalized tender formats, enriched with NLP-based classification (CPV codes, deadlines, buyer type), and applied field-level jurisdictional filters per EU and local laws.

Result: The platform now delivers daily synchronized tender data across 27 countries, with alerts for high-value listings and deadline changes.

This allowed the client to transition from a patchwork of scripts to an owned data aggregation solution with full jurisdictional control.

External Market Signals

We helped a global analytics team create a custom data core of external intelligence to track market signals, replacing manual and brittle processes. Another client utilized our Web scraping for automotive market insights to gain a clear view of their market.

Ready for an Owned Data Aggregation Solution?

Whether you’re stuck with a brittle tool, debating an in-house build, or scaling an overgrown MVP, this framework gives you a path forward. If you want to know more about GroupBWT and our offerings, you can contact us.

- Replace a failing vendor without starting from scratch

- Rebuild your stack with reusable modules

- Expand pipelines to meet compliance or performance goals

Audit your current aggregation setup with our engineers. We also offer SLA-backed support teams to operate, monitor, and adapt your pipelines in real time.

FAQ

-

What makes a data aggregation framework “custom”?

A custom framework is purpose-built around your exact data sources, compliance rules, and operational flows. Unlike generic tools, it doesn’t assume what the data looks like—it confirms and enforces structure as it moves through the pipeline. Each module is modular and adjustable: ingestion, transformation, validation, and delivery. This makes the system reliable, explainable, and scalable under real-world constraints.

-

Which Aggregation Type Fits My Use Case?

It depends on how fresh, complete, and reactive your data needs to be.

Historical aggregation works when you’re training models, analyzing trends, or migrating legacy data—volume matters, not speed.

Scheduled aggregation suits daily reports, marketplace syncs, or logistics updates—data isn’t urgent, but must stay consistent.

Real-time aggregation is for fraud detection, auctions, or travel listings, where every second of latency costs money or accuracy.

In practice, resilient systems combine all three to balance freshness, load, and cost—custom logic routes data based on priority and volatility.

-

Why do most off-the-shelf aggregation tools fail at scale?

They rely on rigid schemas, static logic, and fixed field maps that break when websites or APIs change. These tools are fine for simple scrapes, but collapse when you need jurisdictional logic, audit trails, or merging of non-uniform sources. They also lack version control, role-based access, and field-level masking, leaving you exposed to compliance risk. A production-grade system must evolve with your inputs, not break on change.

-

What is an event-based data-driven application?

An event-based data-driven application is a modular system that reacts to real-time events—like a price change, new record, or user action—by triggering automated workflows. Each event becomes a data packet that flows through ingestion, transformation, validation, and delivery layers.

Unlike linear scripts or batch logic, this architecture ensures asynchronous, decoupled execution, which scales without blocking and adapts without rewrites.

These applications rely on an event bus (e.g., RabbitMQ or Kafka) to coordinate services, maintain consistency, and guarantee message delivery across modules.

The result: data systems that respond to change instantly, stay resilient under pressure, and meet both operational and legal requirements in dynamic environments.

-

How does your framework ensure compliance with GDPR and similar laws?

Compliance is embedded from the start through field-level masking, per-jurisdiction routing, immutable logs, and permissioned access. Every action is recorded, every export is traceable, and every sensitive field has controls based on geography and role. We don’t “add compliance later”—it’s part of how data flows from source to output. This architecture has already passed audits in finance, healthcare, and public procurement sectors.

-

What’s the ROI of building a custom aggregation pipeline?

You reduce costs tied to vendor fees, incidents, and manual QA. You gain control over every step—schema evolution, pipeline logic, compliance logic—without waiting on platform support. You also reduce the time to onboard new sources or markets, accelerating launches and BI availability. Clients have seen 60% drops in tool spend and 3x faster update cycles after switching to custom aggregation.