At GroupBWT, we work with pricing teams who don’t lose because their offers are weak, but because their big data breaks before their strategy does.

Most pricing teams don’t lose deals because their offers are weak.

They lose because they react too late, based on stale, partial, or misaligned data.

The challenge isn’t access. Prices are online. Listings are public.

The real issue is that scraping competitor prices isn’t about collecting numbers—it’s about extracting context, resolving taxonomy, and operating inside legal boundaries at scale.

But that’s not how most teams approach it.

Scripts run weekly on 5–10 domains. Classification is shallow, and currencies mismatch. Product bundles get misread.

Outliers are flagged manually—until someone patches the logic again next quarter.

This article reframes competitor price scraping as a systems decision:

Not to gather data, but to stay aligned, adaptive, and legally compliant in dynamic markets.

Because in pricing, latency costs a margin. And speed without structure corrupts outcomes.

Why Do Most Web Scraping Competitor Prices Strategies Break at Scale?

The pricing war isn’t lost in reports. It’s lost upstream, when scripts collect mismatched SKUs and misread discount structures, and no one notices until the margin vanishes.

Most teams think they’re tracking the market. However, true strategic oversight requires a comprehensive approach to benchmarking and competitive analysis that uses structured data, not assumptions.

In reality, they’re reacting to distorted signals built on logic that hasn’t been reviewed since the last fiscal.

What Happens When Pricing Scripts Don’t Account for Context?

Most teams start with good intentions, a script—a few lines of code, a target site, a scheduler—and a promise to revisit it “if something breaks.”

But here’s what breaks first:

- Products aren’t matched by logic—they’re matched by string.

A red t-shirt with free shipping gets mapped to a different SKU because the title uses “tee.”

- Discount logic is ignored.

The scraper sees $59.99 but misses the “2 for $100” promo, two lines down in a dynamic module.

Currency conversion goes unchecked.

The pipeline assumes USD. The competitor switched to region-based pricing last month.

What you’re left with isn’t pricing intelligence. It’s a spreadsheet of assumptions that only look accurate at first glance.

This isn’t a scraping failure. It’s a systems design failure.

Why Weekly Scrapes and One-Time Scripts Quietly Create Pricing Drift

Speed isn’t the problem. False certainty is.

When a system doesn’t break visibly, it’s easy to assume it’s working. But behind that quiet interface, the data starts shifting—and the strategy shifts with it.

Here’s how it plays out:

| System Behavior | Strategic Consequence |

| Weekly scraping frequency | Missed flash sales, bundles, and dynamic offers |

| Static HTML selector | Broken logic when page layouts update silently |

| Manual override of outliers | Analyst time wasted on edge cases that should’ve been modeled |

| One-size-fits-all taxonomy | Competitor SKUs misclassified across segments |

These issues don’t surface in one report. They accumulate silently until pricing decisions start contradicting revenue goals.

The fix doesn’t involve scraping more. It consists of continuously scraping smart, with the correct structural logic.

What Fails When Legal and Product Aren’t Aligned on Price Monitoring?

It’s easy to assume that because pricing is public, scraping it is low-risk.

That assumption fails when the legal team is looped in too late, or never. By then, violations aren’t theoretical. They’re logged, flagged, and sometimes escalated.

Common violations teams overlook:

- Ignoring robots.txt directives (or assuming they don’t matter)

- Exceeding crawl limits without proper rotation

- Collecting user-level metadata (e.g., cookie-based location pricing) without filtering

When pricing teams operate in silos, they unintentionally open risk fronts the business wasn’t prepared to defend.

How Do Modern Competitor Price Scraping Systems Work Without Breaking?

Most scraping fails aren’t caused by destructive code.

The failure isn’t in the code—it’s in the assumptions: that pages don’t change, categories stay static, and broken selectors throw errors.

Real systems are built for change.

They don’t scrape harder. They scrape smarter, with memory, governance, and logic designed to bend without breaking.

What Layers Make a Scraping System Resilient?

Every modern pricing intelligence system that holds at scale shares five critical traits:

- Session logic with context memory

Each run tracks its own flow—what was clicked, what loaded, and what failed. There is no guesswork, and there are no reruns.

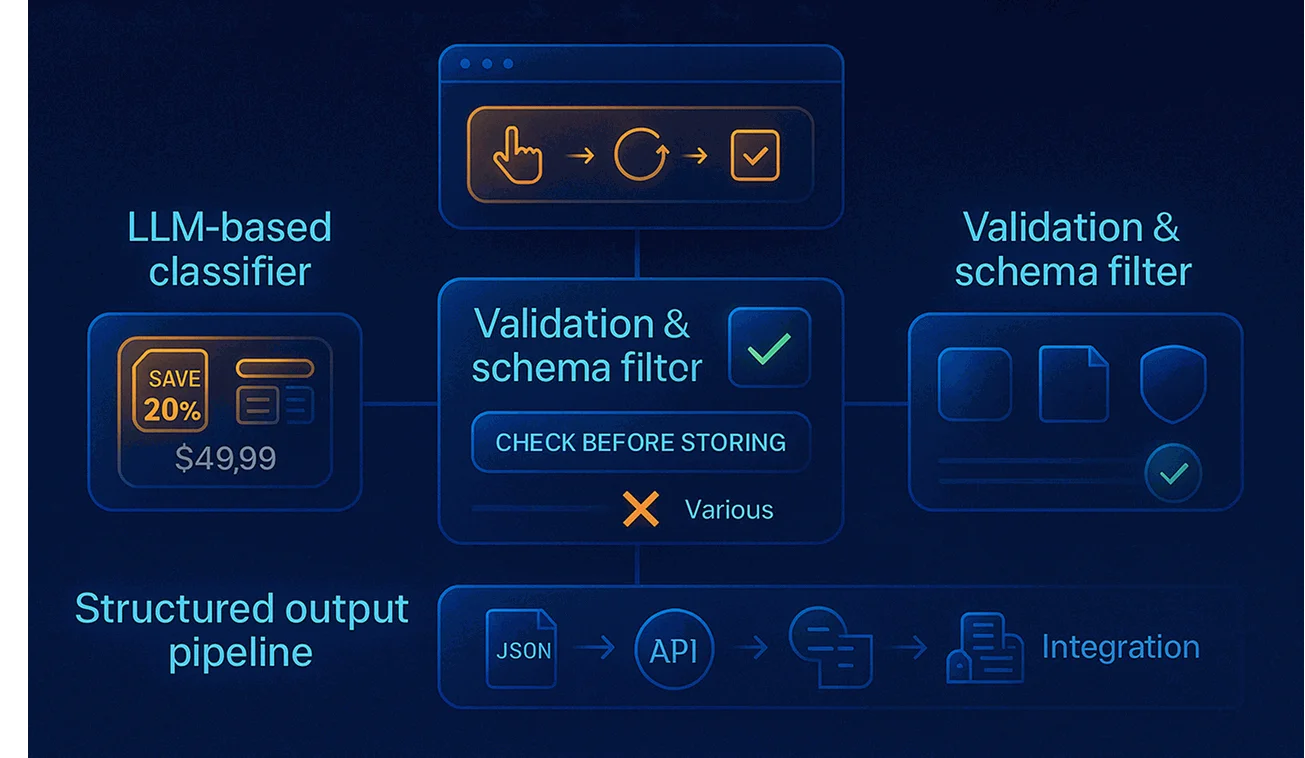

- Classifier trained on pricing logic.

An LLM-based model distinguishes between list prices, dynamic discounts, bundles, and tiered promos.

- Validation layer.

Extracted values are compared against schema rules before they’re stored—not after the dashboard breaks

- Compliance screening at point-of-capture.

Geo detection, rate limiting, and robots.txt review happen before the first request, not as damage control.

- Structured delivery pipeline.

Outputs don’t land in spreadsheets. They’re delivered as JSON or API feeds, versioned and tracked.

Web scraping competitor prices isn’t just data collection — it’s pricing observability—a system that adapts as fast as the market moves.

What Is the Role of LLMs in Competitor Based Pricing in Scraping?

Large language models aren’t just hype—they’re now functional inside well-architected scrapers. But only when grounded.

Here’s how they’re used properly:

| Function | Role of the LLM | Risk of Missing |

| Product name classification | Detects bundles, variants, and promotional phrasing | Mislabeling and mismatches |

| Discount type parsing | Interprets “Buy 2 Get 1” vs “25% off for subscribers” | Flat price capture misses context |

| Regional pricing segmentation | Distinguishes location-based adjustments | Incorrect pricing logic by region |

| Description scoring | Determines category alignment or overlap | Weak cross-site matching logic |

When used correctly, LLMs enable competitor pricing in scraping workflows by bridging raw HTML with actual commercial logic.

When used incorrectly, they hallucinate, overfit, or misclassify confidently, and no one notices until it’s too late.

What Makes Compliance Structural—Not Just a Disclaimer?

Compliance doesn’t live in a footer. It lives in code.

Scraping systems with legal integrity treat compliance as a constraint, not a guideline.

Key protections embedded inside resilient systems:

- Rate throttling is tied to site policy, not system default.

- Automated request tagging for audit traceability.

- PII filters that exclude user-level pricing metadata.

- Consent-aware logic for regions like the EU and California.

It’s how modern teams scale intelligence without legal friction.

What Business Problems Does Competitor Price Scraping Solve?

Scraping isn’t about collecting numbers—it’s about reclaiming strategic timing.

Executives don’t need another source of noise. They need pricing intelligence that surfaces when it matters, fits their taxonomy, and guides decisions without second-guessing.

This section dispels the illusion that scraped data is tactical. When done right, it becomes infrastructure for margin, positioning, and risk control.

How Does Price Intelligence Protect Profit Margin in Competitive Markets?

Most margin erosion doesn’t come from the initial price.

It comes from holding a price while competitors move past you—quietly, algorithmically, and without signaling it’s happening.

Real-time competitor price scraping allows teams to:

- Adjust bundle pricing before being undercut.

- Identify price compression across product variants

- Catch promotional cycles before revenue loss compounds.

Instead of responding quarterly, you respond daily. The necessity for continuous, high-volume data streams in this sector confirms the critical role of specialized ecommerce scraping solutions.

And when done with structure, those adjustments aren’t gut calls. They’re system-triggered.

What Happens When Pricing Teams Have to Work Without Confidence?

When price data is scraped without system design, teams compensate with meetings, overrides, and audits.

Symptoms:

- Teams rerun pricing reports weekly “just to check.”

- Revenue leads defer decisions until they get manual confirmation.

- Analysts spend hours reconciling conflicting fields across dashboards.

That’s not analysis. That’s failure recovery disguised as work.

This is where competitor-based scraping pricing isn’t a feature—it’s a foundation.

A structured flow creates alignment across data, logic, and response time.

What Does Structured Price Intelligence Look Like in Practice?

Here’s what a structured delivery pipeline looks like when scraping systems are built for enterprise use:

→ Raw Extraction Layer

Scrapes product pricing, discount logic, SKU ID, and metadata

→ Classification & Tagging

LLM applies field logic: price type, bundle logic, discount type

→ Validation Filter

Rules engine checks for missing fields, mismatched units, or schema violations

→ Geo & Compliance Enforcement

Applies region-specific logic (e.g., EU price visibility, rate caps)

→ Structured Output Layer

Delivers clean, deduplicated, and aligned data to:

- Dynamic pricing engines

- Competitive dashboards

- Category managers

- Revenue operations teams

This isn’t a report.

Pricing architecture holds its shape, even when competitors change theirs.

What Breaks When Web Scraping Competitor Prices Process Is Built on Scripts?

Scripts don’t warn you when they fail.

They just keep running, collecting the wrong prices, missing discounts, and producing “valid” data that derails decisions.

The failure isn’t loud. It’s incremental.

And by the time it shows up in your pricing strategy, the data that caused it is already archived, unaudited, and considered trusted.

This section breaks down what breaks—when teams rely on scraping methods that don’t evolve with the market.

What Silent Failures Scripts Introduce That Dashboards Never Sho

There’s no alert for a mismatched SKU. No red flag for misclassified discount logic.

Scripts don’t break like APIs—they break like logic drift.

Common failure types:

- Field dependency.

The price is pulled from a specific div. That div moves. The scraper still runs.

- Currency or region hardcoding.

Competitor switches from USD to CAD. Your dashboard still shows $89.99—wrong country, wrong margin.

- Session timeout logic ignored.

Dynamic pricing is shown only after the login is missed entirely. Your system assumes out-of-stock.

The result? The dashboard populates.

But what it’s showing isn’t reality—it’s residue.

How Manual Cleanup Creates Delays, Rework, and Strategy Decay

When competitor scraping lacks structure, the cost doesn’t appear as a bug.

It shows up in meetings.

- Analysts rebuild dashboards weekly.

- Pricing leads send Slack messages asking, “Are we sure this is right?”

- Revenue operations manually reconcile last quarter’s strategy against scraped data that drifted.

None of this is automation. Its manual assurance loops are caused by scripts pretending to be systems.

Why Tactical Scripts Can’t Support Strategic Pricing Models

A one-time scraper doesn’t adapt. It doesn’t monitor. And it doesn’t correct.

That’s fine—until:

- You add 15 more competitor domains

- You enter a new market with a different pricing logic.

- Your compliance team needs logs for audit, and your system has none

This isn’t a tooling problem. It’s a strategic blind spot. For strategic risk modeling, the depth of insight needed is similar to the requirements for big data in finance industry, compliance, and algorithmic trading.

When pricing depends on brittle methods, competitive agility becomes a guess.

The solution isn’t to hire more analysts.

It’s to build a system that produces pricing data that doesn’t need translation.

How to Monitor Prices of Competitors Without Falling Into Legal or Operational Traps

Most teams know why they need to track the market.

The more complex question is how to monitor competitor prices without introducing fragility, duplication, or compliance risk.

This section breaks down what responsible, scalable tracking looks like—and where most systems fall short.

Why Traditional Monitoring Approaches Don’t Hold Up Under Pressure

When scraping is treated like reporting, not infrastructure, tracking turns into a patchwork:

- Analysts export daily screenshots into spreadsheets.

- Developers run scripts manually to “check if anything changed.”

- Pricing managers flag anomalies after the fact, not before they hit the forecast.

What Does a Real-Time Monitoring Architecture Look Like?

Monitoring competitor prices in a real enterprise system means observing price shifts like a telemetry feed, not a static snapshot.

Key components:

- Scheduled extraction with dynamic frequency controls

- Real-time diffing engines that track price movements by product and category

- Alert layers that notify ops when bundles shift or region-based logic changes

- LLM-backed classification to distinguish price types, not just numeric value

This turns web scraping competitor prices from a scraping task into a synchronized awareness system across SKUs, currencies, and categories.

How to Monitor Competitor Prices Online Without Creating Legal Exposure

The line between competitive intelligence and compliance breach is thinner than most teams realize.

To monitor competitor prices online without triggering legal or reputational risk:

- Respect robots.txt directives and crawl-delay rules.

- Avoid scraping pages behind login or dynamic cart walls unless permitted.

- Strip session or user-level metadata before storage.

- Implement geo-detection logic to avoid region-specific violations.

And most critically:

Don’t just scrape. Log, version, and document every logic chain in your monitoring system.

What Does Responsible Competitor Price Tracking Require

![]()

Trusting the data—and acting on it before it’s outdated—is where most systems fail.

To scale price intelligence without risking compliance, delay, or distortion, teams must stop thinking in scripts and start thinking in systems. This systemic thinking is essential not just for pricing but also for HR, as demonstrated by successful approaches on how to use web scraping for recruitment.

That’s what competitor price scraping becomes at scale: not data pulling, but real-time decision architecture.

GroupBWT Case Study: How a Cybersecurity Company Replaced Manual Audits with Real-Time Pricing Logic

The company:

A global cybersecurity vendor offering endpoint protection, antivirus, and privacy tools across international markets.

The challenge:

The team needed to monitor competitors’ prices, bundles, and promotional logic across nine major vendors, each with more than ten active SKUs per region. Previously, this tracking was handled manually through spreadsheets and screenshots. But by the time one cycle of competitive analysis ended, the inputs were already outdated.

The problem:

Manual workflows couldn’t keep pace with:

- Frequent pricing plan adjustments

- Region-specific offers and discounts

- Repackaging of identical products under new names

As a result, pricing decisions lagged behind market movement, leading to missed windows and margin erosion.

The solution:

We engineered a custom competitor price scraping system that:

- Extracted product, variant, and discount logic from all tracked domains

- Captured changes in real time using dynamic scheduling and validation

- Disengagement

The outcome:

- Time-to-decision shortened from 7+ days to under 12 hours

- Bundled promotions were classified accurately and tracked across SKUs

- Regional discrepancies were automatically flagged and normalized

Strategic impact:

Pricing shifted from reactive to adaptive. Instead of manually rechecking dashboards, stakeholders received verified changes directly embedded into their decision flow.

Ready to Stop Scraping Like It’s 2016?

You’re not tracking the market if your pricing logic still depends on weekly scripts, static reports, or post-hoc audits. You’re reacting to it.

At GroupBWT, we don’t build bots.

We engineer price intelligence systems that monitor, classify, adapt, and hold across jurisdictions, SKUs, and market volatility. This resilient architecture is necessary to support strategic outcomes, mirroring the complexity of data warehousing and ETL pipelines.

If your business depends on pricing decisions, it’s time to replace uncertainty with infrastructure.

Contact us to build a competitor price scraping system that works like your business depends on it—because it does.

FAQ

-

What’s the difference between real-time pricing feeds and scraping-based intelligence?

Real-time feeds push data as events happen—great for your systems, but rarely available for competitor data. Scraping, on the other hand, pulls information from public-facing platforms. But when engineered correctly, modern systems mimic real-time behavior through adaptive scheduling, change detection, and alerting, without relying on brittle polling or batch jobs.

-

Can price intelligence systems handle region-based or currency-specific adjustments automatically?

Yes—if they’re designed for it. Resilient systems include geo-aware logic, currency normalization, and localized rule enforcement. That way, a product priced in CAD for Toronto isn’t mistakenly compared to its USD equivalent in Texas. Anything less invites false deltas and strategy misfires.

-

What should legal and compliance teams check before collecting competitor data?

A few non-negotiables:

- Has the site’s robots.txt been reviewed and respected?

- Is rate limiting aligned with crawl guidelines?

- Are we capturing user-identifiable pricing content (like logged-in offers)?

- Do logs track every request in case of a review or inquiry?

If the answers aren’t system-enforced, compliance isn’t structural. It’s accidental.

-

How do companies maintain trust in scraped data over time?

They don’t rely on trust. They implement verification loops.

Modern systems include schema validation, confidence scoring, anomaly detection, and audit history. If a scraper silently fails or drifts, the system catches it before humans must.

-

What happens when product listings are inconsistent across platforms?

This is where unsupervised scraping fails—and systems thinking wins.

Intelligent scrapers use LLMs to recognize product lineage, bundle variations, and labeling inconsistencies. That means “Nike Air Max 270 – Blue – Size 10” and “Air Max 270 – Navy – Men’s 10 US” won’t be treated as unrelated listings. A real system maps them through logic, not string matching.