The Client Story

A regional insurance platform expanding into new markets faced rising support volumes and strict regulatory demands. Their legacy chatbot couldn’t handle legal queries, missed compliance checks, and lacked system integration. Clients received generic replies to complex questions—on policies, claims, and renewals—while agents fell behind.

They needed more than a chatbot: a compliant, real-time AI system that could interpret legal context, cite documents, automate low-risk tasks, and escalate when needed. GroupBWT built and deployed a modular GPT-based system with audit logs, TTL (time-to-live) retention rules, and full data privacy—by design.

| Industry: | Insurance |

|---|---|

| Cooperation: | 2025 |

| Location: | US / EU |

“We needed automation, but with accountability. A generic chatbot wouldn’t cut it in a regulated environment.” — Director of Customer Experience

“GroupBWT’s system doesn’t just reply—it reads, routes, and remembers. It’s embedded in our operations now.” — VP of Product and Technology

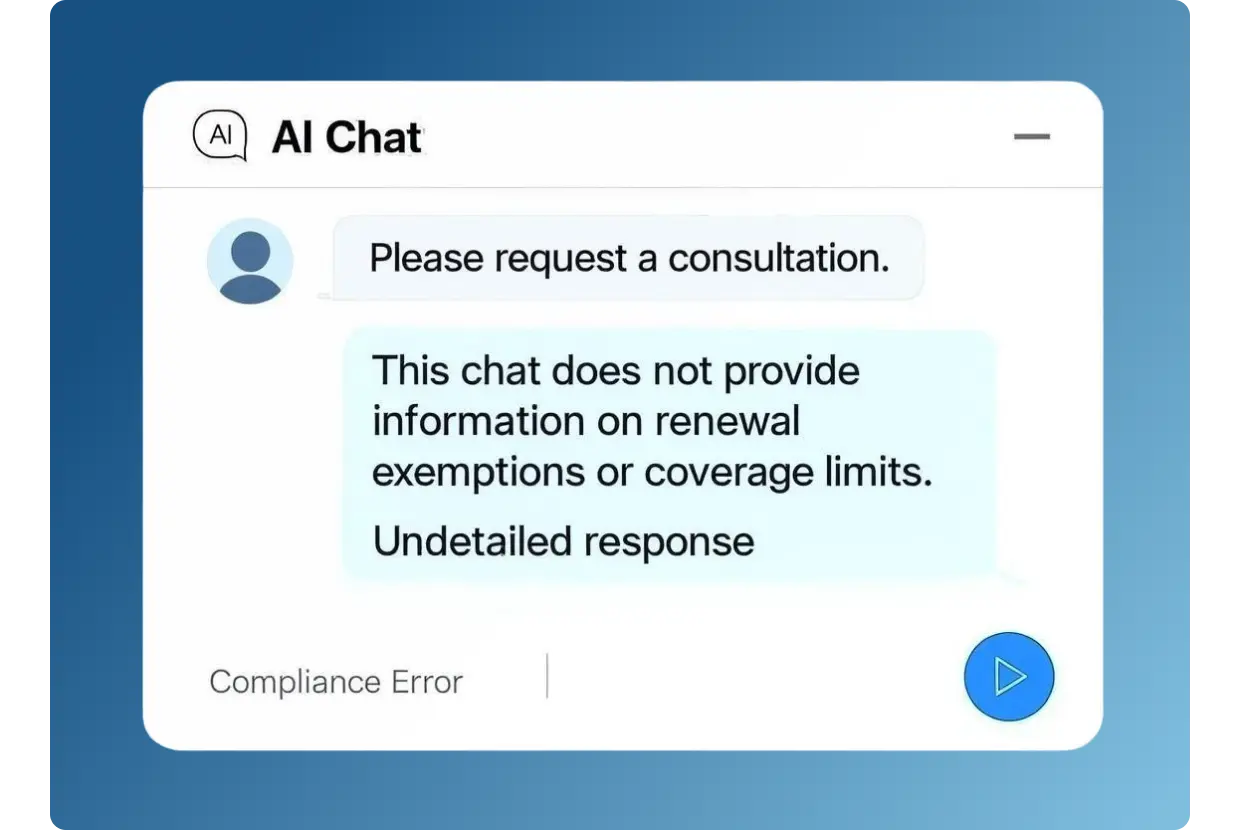

Why 9 of 10 Insurance Chatbots Fail

The regional insurance platform’s expansion into new markets overwhelmed its support systems, revealing critical gaps in compliance, automation, and policy-level reasoning.

Their old chatbot couldn’t:

- Answer real questions about specific claims or policy clauses

- Connect to internal systems to give status updates

- Track or log what it said to users

- Route complex issues to the right teams

The consequence?

- Customers received generic, useless replies

- Legal flagged gaps in compliance logging

- Agents handled the exact repetitive tickets manually

- Policyholders dropped off mid-process or churned

This wasn’t a chatbot issue—it was a system architecture failure.

They needed a legally-compliant, workflow-aware support system that could:

- Read and route policy questions

- Pull answers from contracts in real time

- Log every answer with timestamps and user ID

- Update when policies changed

- Learn from flagged conversations to improve clause matching, routing, and compliance behavior over time

They hired GroupBWT to build that system.

Rebuilding Insurance Support with Clause-Based AI Chatbot Logic

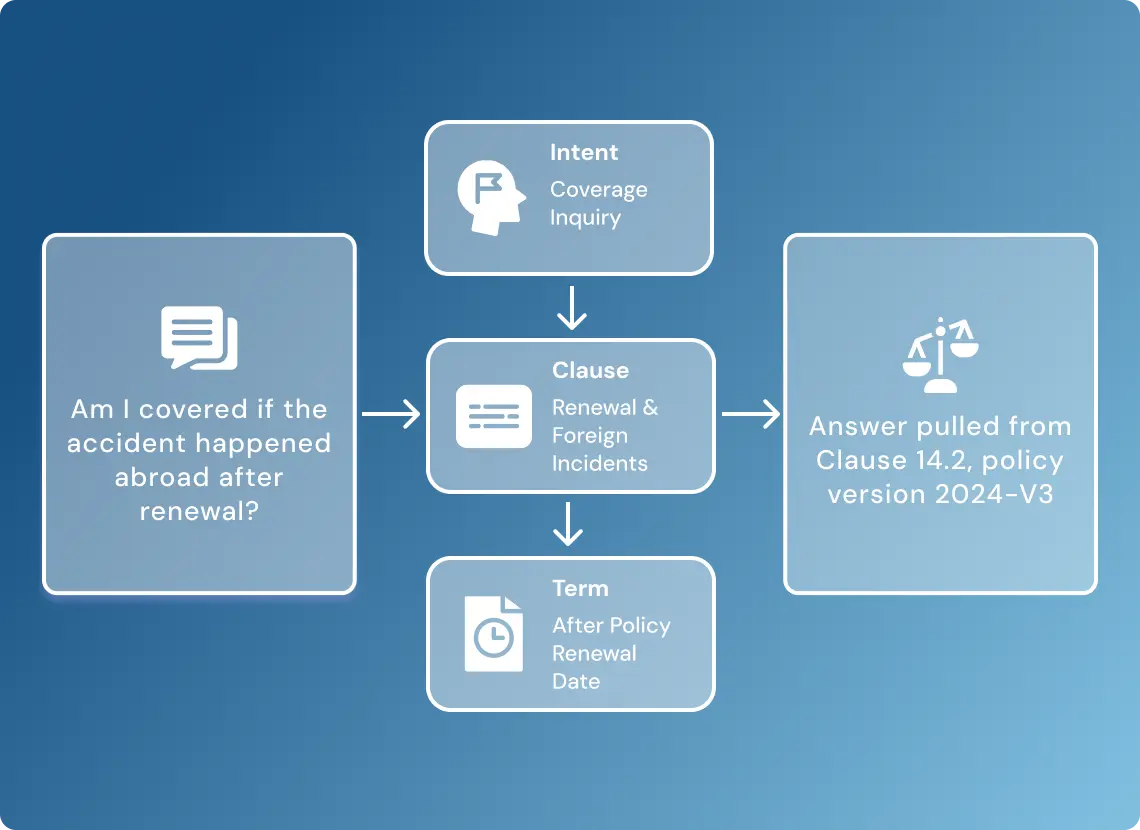

1. Structured Language Processing for Policy Queries

An off-the-shelf chatbot couldn’t process queries involving legal phrasing, date ranges, or exception clauses. GroupBWT engineered a system based on large language model refinement that:

- interpreted clause-specific questions across multiple languages

- mapped user queries into structured parameters (intent + clause + term)

- distinguished between general inquiries, exceptions, and regulatory triggers

Each user input was converted into a traceable query path with known fallback and escalation behavior.

“We engineered a dual-layer chatbot that combines legal rules with GPT-powered intent recognition. The system improves through supervised feedback loops, flagged chats feed monthly fine-tuning, and new policies are uploaded as structured updates to the retrieval base.” — Technical Lead, GroupBWT

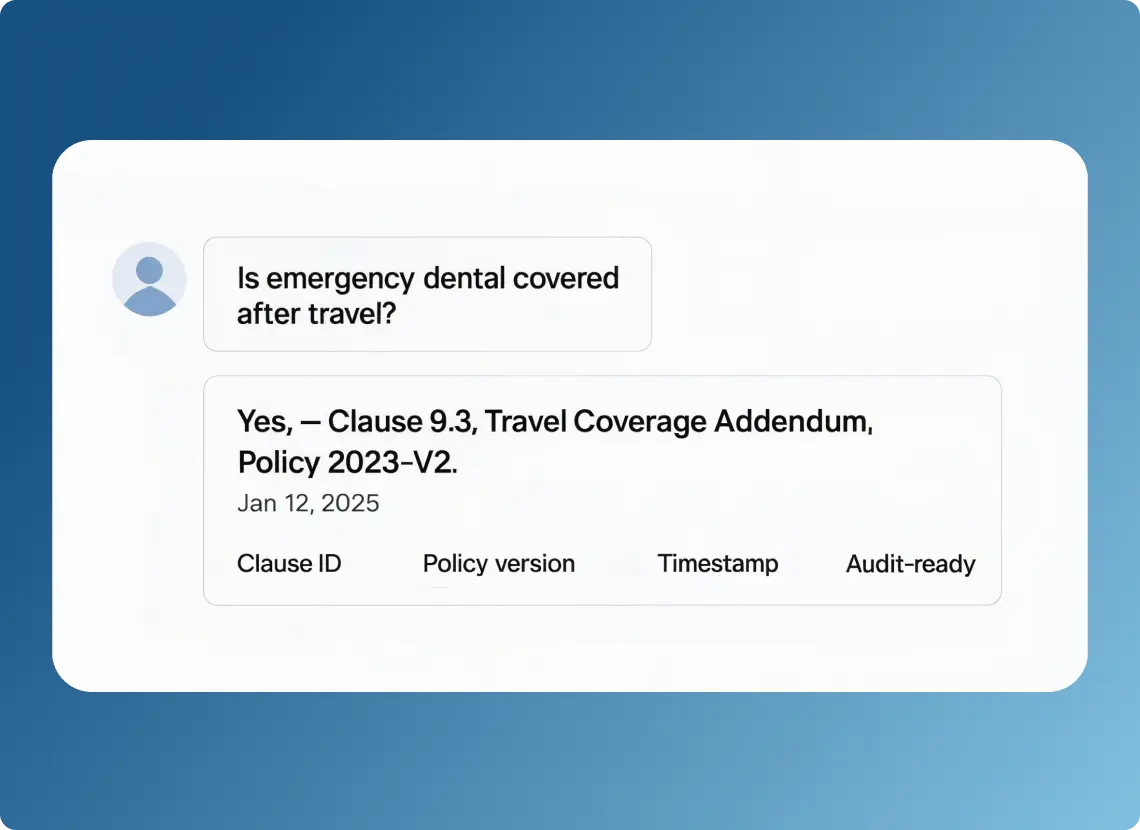

2. Document Retrieval with Version-Linked Responses

To prevent answer drift and maintain compliance accuracy, the system did not generate text freely. Instead, it extracted matching sections from:

- a structured contract knowledge base (policy clause library)

- eligibility and renewal datasets, updated weekly

- user-specific limits and coverage (fetched via claim ID or session context)

Every output cited the clause ID, timestamp, and document version. All answers were verifiable and bound to internal audit policies.

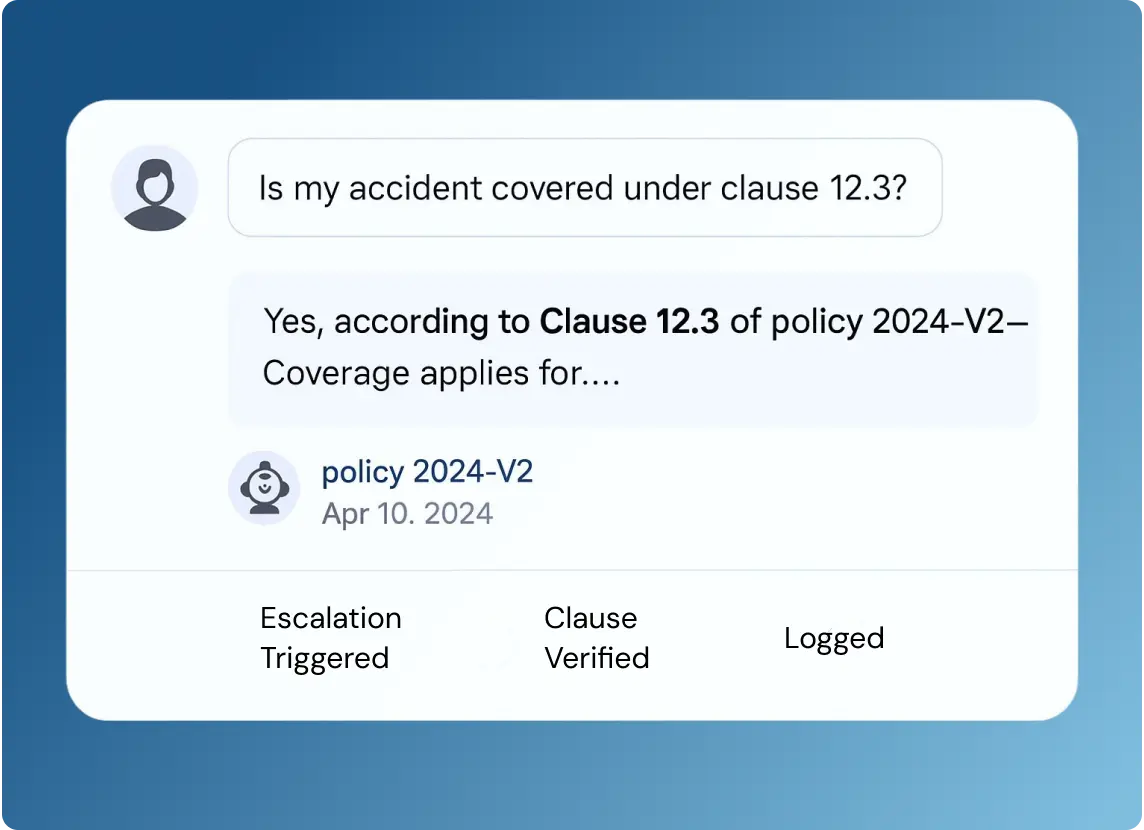

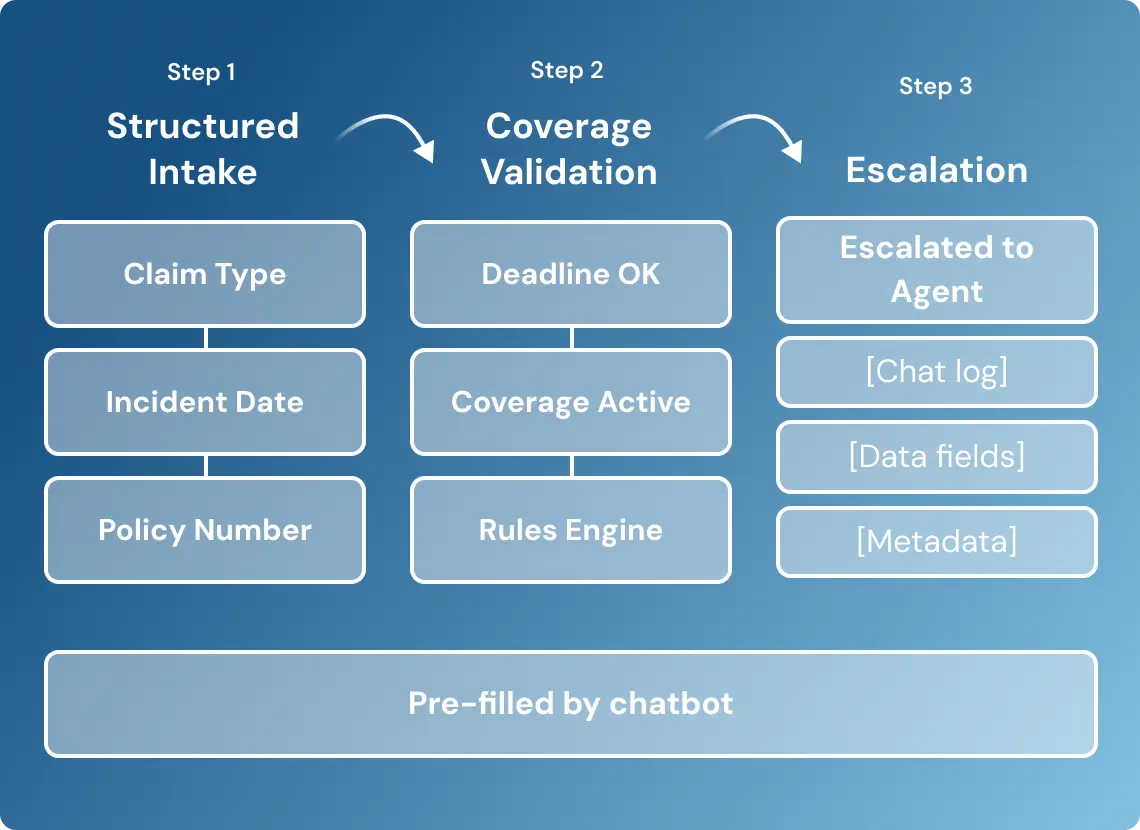

3. Pre-Claim Intake and Escalation Pathways

The chatbot acted as a structured intake layer, not just a responder. It:

- collected all pre-claim inputs using preconfigured forms

- validated policy coverage and deadlines using internal rule sets

- triggered escalation only when user intent or data exceeded predefined bounds

Escalated cases were passed to live agents with full chat logs, extracted data fields, and compliance metadata attached.

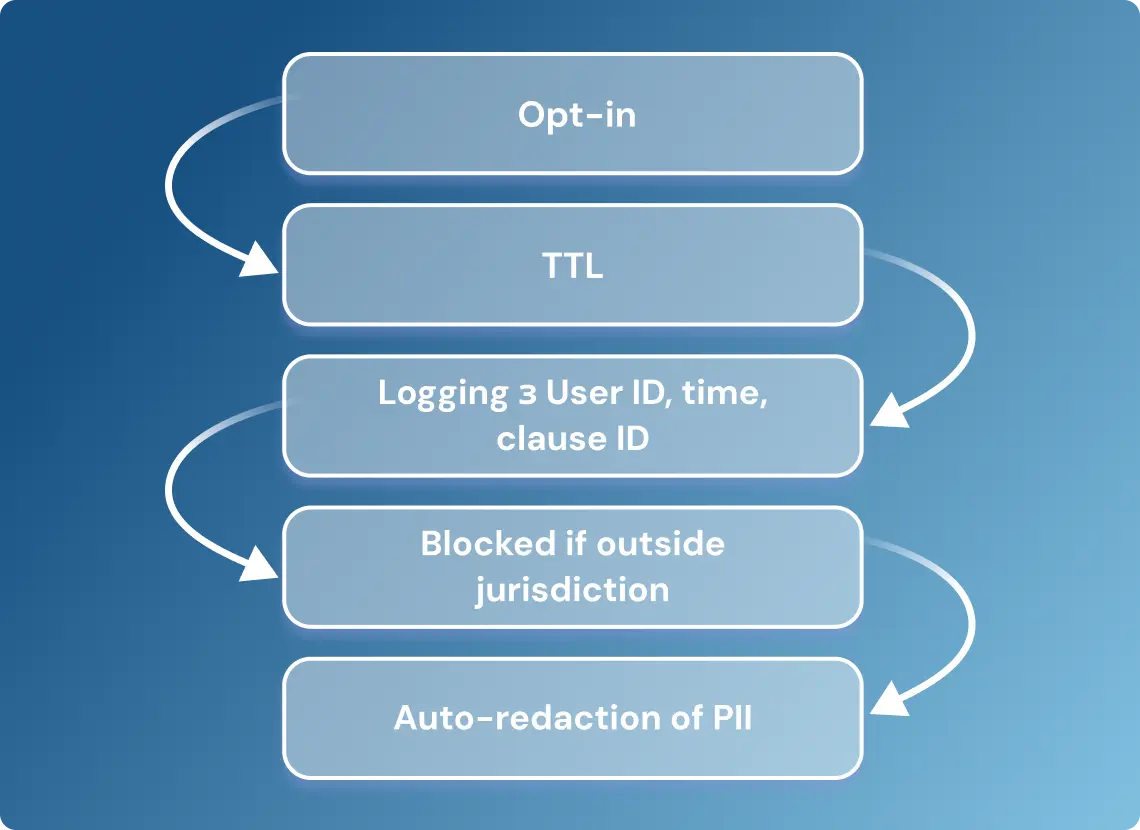

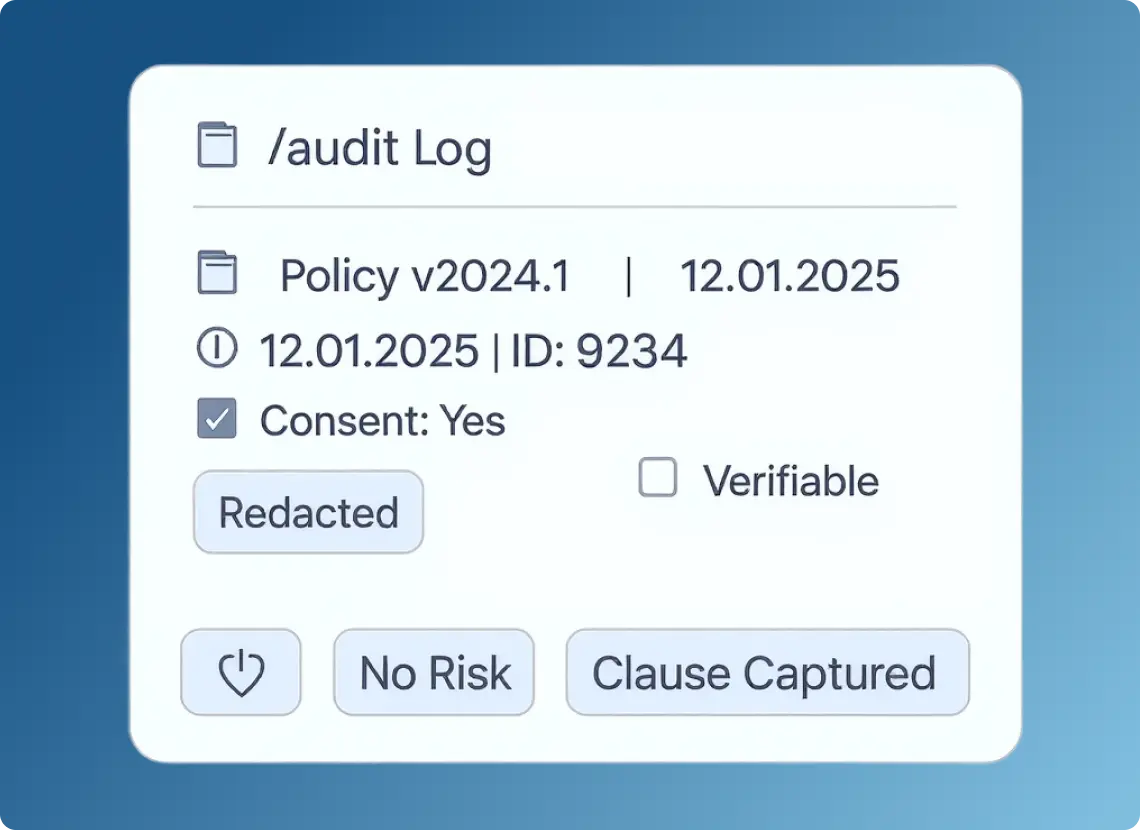

4. Built-In Compliance and Logging Engine

The chatbot’s operation was embedded in a privacy-first framework. Every interaction triggered:

- opt-in logic for data processing with explicit TTL (time-to-live)

- auto-tagging of personal data for redaction and retention

- logging of each answer with source traceability, user ID, and consent status

- blocking of clause-level answers if conditions weren’t met (e.g., jurisdictional limits)

The LLM operates in a private, isolated environment—no user data is shared with external providers. GroupBWT deployed the model using secure infrastructure to guarantee full compliance with data residency and transfer restrictions.

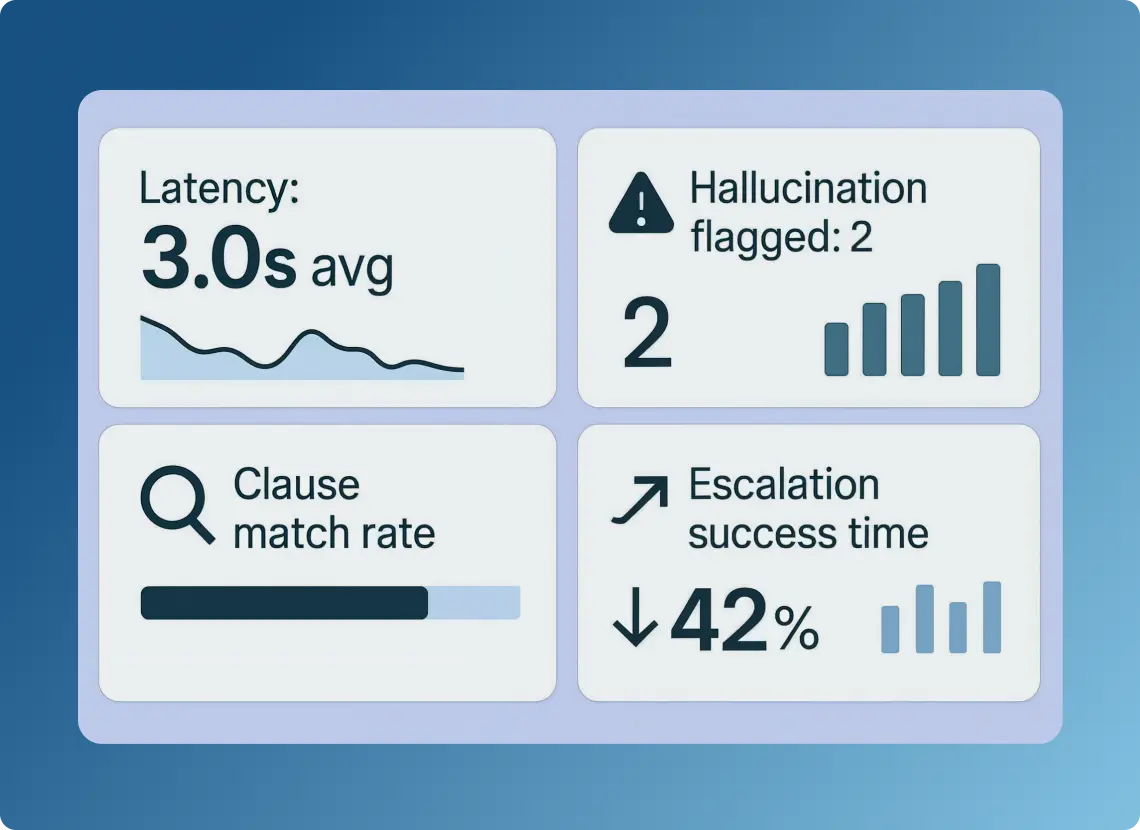

5. Live Monitoring, Clause Coverage, and Behavior Flags

To maintain quality and preempt errors, GroupBWT implemented a monitoring layer across:

- system response latency per message

- hallucination or unverifiable output detection

- missed clause coverage and feedback-triggered flags

- escalation and resolution times

Monitoring metrics were streamed into Power BI dashboards via a secure API and ETL layer—enabling real-time visibility and automated feedback routing to monthly model retraining cycles.

Turned Static Scripts into Adaptive Workflows

This system replaced a high-risk support gap with a modular, traceable, clause-based interaction layer that protects both the business and its policyholders.

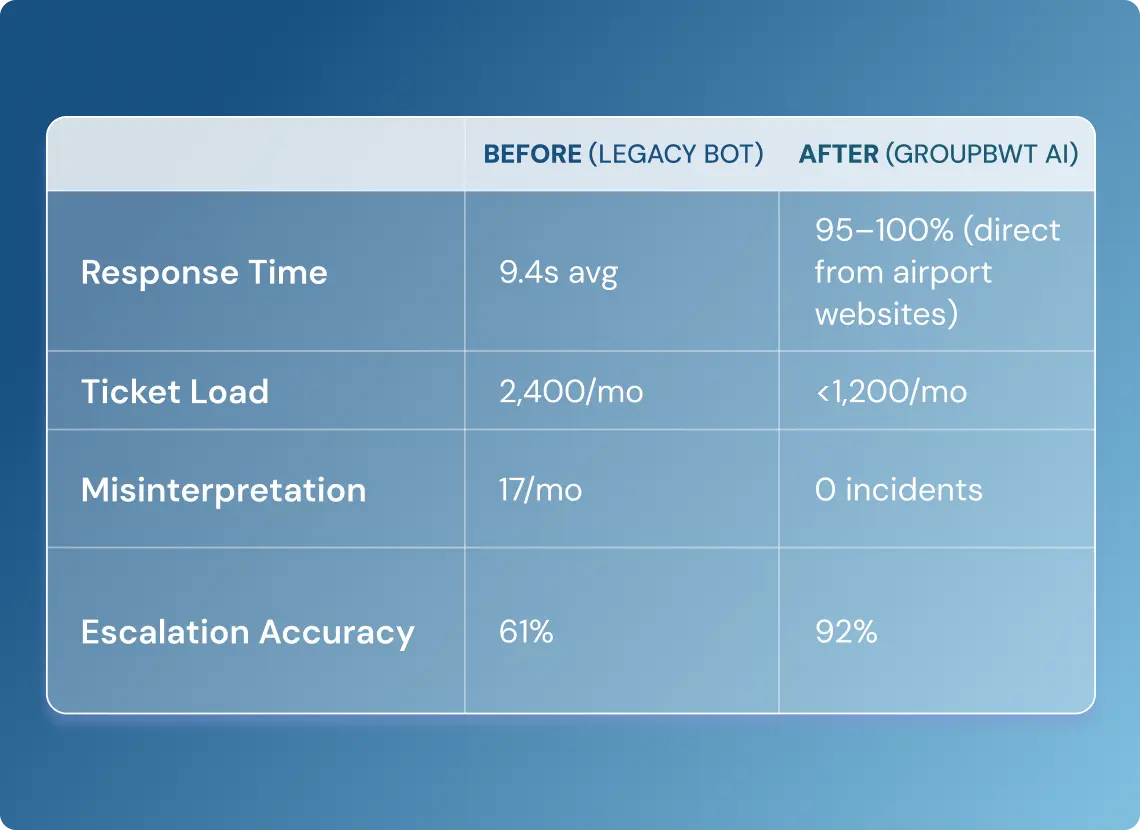

- Response Completion Time Improved by 68%

Average user query resolution time decreased from 9.4s to 3.0s, with fallback to agents in under 5% of sessions.

Source: client’s internal LLM routing benchmarks

- 1,200+ Repetitive Tickets Removed Monthly

Clause lookups, policy checks, and eligibility queries were automated, reducing monthly manual workload by over 50%.

Source: client’s pre- and post-automation support logs

- Zero Policy Misinterpretations Logged Post-Launch

All chatbot replies cite version-controlled contract clauses, with no misinterpretation cases recorded after go-live.

Source: internal QA audit reports provided by the client

- Full Legal Audit Traceability Achieved

All interactions are logged with clause IDs, timestamps, and PII redaction—enabling compliance with GDPR, HIPAA, and CCPA.

Source: client’s compliance dashboards and internal audit logs

- 24% Improvement in Model Accuracy from Feedback Loops

Monthly fine-tuning on flagged sessions improved clause matching, intent detection, and escalation routing.

Source: internal model training reports derived from client-side analytics

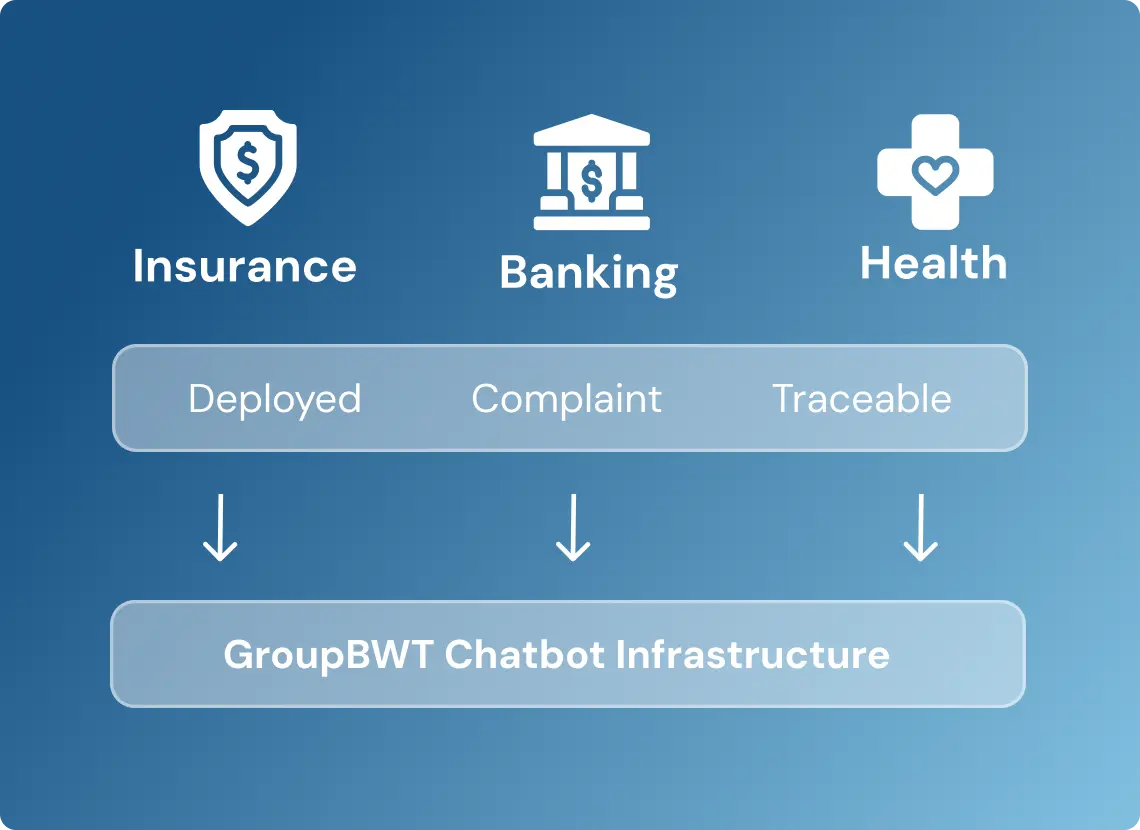

- Scaled Across Two Regulated Verticals in <90 Days

The solution is now deployed in both insurance and banking, meeting policy-specific automation and compliance criteria.

Source: enterprise rollout documentation from client deployments

This infrastructure now supports legally grounded, clause-aware automation across insurance and finance verticals—scalable, safe, and built for regulated environments.

What began as a support bottleneck turned into an AI transformation. GroupBWT’s compliance-first chatbot infrastructure gave the client a scalable system that didn’t just answer faster—it answered smarter, safer, and with full legal traceability.

These deployments are fully compliant with EU and US regulations (GDPR, HIPAA, GLBA), with the chatbot operating in secure environments that ensure no data is shared with LLM providers like OpenAI.

Users’ personal information is never exposed, and every session is auditable, consent-tracked, and protected by retention rules. All metrics validated by internal stakeholder reviews.

Ready to discuss your idea?

Our team of experts will find and implement the best eCommerce solution for your business. Drop us a line, and we will be back to you within 12 hours.

You have an idea?

We handle all the rest.

How can we help you?