Web scraping is a relatively complex topic, from definitions to potential commercial uses and their potential to influence the direction of data-driven organizations. Web crawling is another phrase frequently used in this context. Although these phrases are occasionally used synonymously, it is important to know the distinctions between web crawlers and web scrapers.

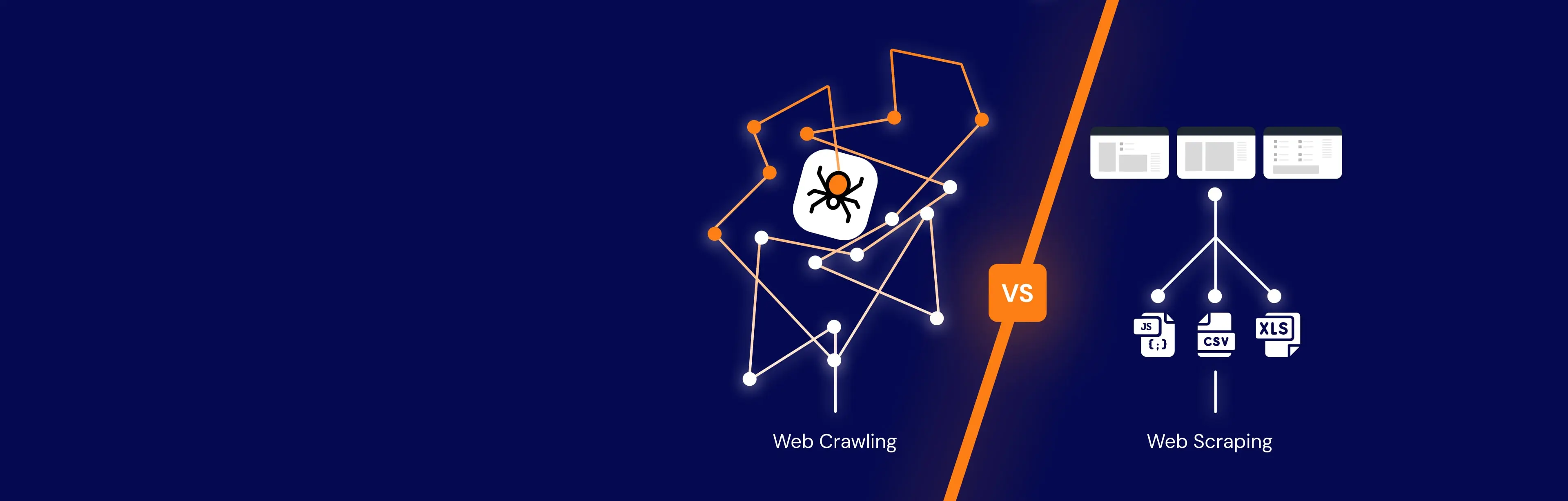

Web crawling collects pages to build indices or collections, which search engines frequently utilize to index the web. Conversely, web scraping involves downloading websites to extract particular data sets for analysis, such as price information, product details, SEO data, or any other crucial data set.

In short, web crawling finds pertinent URLs or links on a website, but web scraping collects particular data from one or more websites. In the process of acquiring data, crawling and scraping frequently work together. For example, you may use a web scraper to visit URLs you find through web crawling, download the HTML, and extract the information you want.

Data scraping: what is it?

The practice of obtaining any publicly accessible data and importing it into a local file or database for usage is known as data scraping. This information may be kept locally on your machine or available online. A more specialized type of data scraping that requires an internet connection to access online material is called web scraping. To guarantee accuracy and efficiency, automated scripts or scraping frameworks are usually used.

Using a unique web scraping methodology, GroupBWT creates customized data platforms that assist companies in gathering, transforming, and turning difficult data into insights that can be put to use. This guarantees the effectiveness and scalability of their data approach.

Web scraping: what is it?

Extracting publicly accessible internet data and saving it for examination is known as web scraping. Web scraping packages like BeautifulSoup or automated frameworks like Scrapy are frequently used to complete this task. Custom-built solutions, like those provided by GroupBWT, are necessary for more complicated projects to manage sophisticated or high-volume data extraction activities.

Web crawling: what is it?

Web crawling, often referred to as data crawling, is the methodical process of searching the internet for material to index or gather. In addition to looking for more URLs to crawl, crawlers—also known as spider bots—search the web for information the user requires. The following are the main parts of a crawler’s architecture:

- Queue of URLs: A list of websites that need to be viewed and indexed.

- Parsing Logic: algorithms for examining a web page’s structure.

- Respecting Robots.txt: observing the rules established by websites to guarantee adherence and moral data gathering.

When a target website, such as http://example.com, for instance, it will crawl:

- Explore product pages.

- Take out the relevant information, such as pricing, titles, and descriptions.

- Give the web scraper this information, and it will download it for additional examination.

Crawling vs. Scraping: Key Distinctions

Take into consideration the following to have a better understanding of the distinctions between web scraping and web crawling:

Web Crawling: Navigating through pages and indexing material is known as web crawling. It involves finding fresh information and URLs.

Web Scraping is the process of removing particular information from web pages that are discovered via crawling.

The two methods are frequently combined. Whereas scrapers just extract the information that is required, crawlers locate and collect URLs. Together, they provide a potent method for gathering data.

Technical Considerations for Web Scraping and Crawling

Selecting the appropriate tools and tactics for your project might be made easier if you are aware of the underlying mechanics.

The Operation of Web Crawlers

Web crawlers effectively examine and index websites using algorithms like depth-first search (DFS) and breadth-first search (BFS). They download the HTML information for additional analysis after clicking on links on a page. Crawlers follow the guidelines in a website’s robots.txt file to prevent overwhelming the server. These guidelines specify which parts of the website should be blocked and which can be crawled. To prevent the same material from being indexed more than once, web crawlers also use deduplication.

The Operation of Web Scrapers

Web scrapers extract the necessary data by parsing HTML text. They look for certain tags or components that provide the required information using libraries like BeautifulSoup. Another powerful framework that automates this procedure and enables effective, large-scale data extraction is Scrapy. By focusing on intricate structures or applying cutting-edge data processing methods, custom solutions can further enhance the scraping process.

Considering the Law and Ethics

Although web crawling and scraping are effective technologies, they have moral and legal obligations. Here are several important rules:

- Honor Robots.txt: Make sure you always review and follow the guidelines provided in a website’s robots.txt file.

- Prevent Overloading Servers: To prevent the website from experiencing performance problems, be careful about how often you submit requests.

- Privacy and Conformity: Make sure that, particularly when managing personal data, your data-gathering procedures abide by regulations such as the GDPR.

- Permission and Use: To prevent legal issues, abide by a website’s terms of service if they forbid scraping.

You may make sure your data-gathering operations are morally and practically sound by adhering to these guidelines.

The Benefits of Personalized Web Scraping Services

For simple tasks, off-the-shelf web scraping technologies can be helpful, but custom-built solutions have several benefits:

- Flexibility and Customization: Personalized solutions may be made to precisely extract the data you want, even from dynamic or complicated websites.

- Performance Optimization: Because custom scrapers are designed to be quick and effective, gathering large amounts of data takes less time.

- Scalability: Custom platforms may expand with your data demands to handle bigger datasets and more frequent data changes.

- Security and Reliability: By implementing security measures with a customized setup, you may safeguard your data and prevent target websites from detecting or blocking it.

With a focus on developing custom web scraping and crawling solutions, GroupBWT makes sure that companies get the data they want at the right time without sacrificing effectiveness or compliance.

Real-World Uses for Web Scraping

In many different business domains, web scraping is crucial. Here are a few real-world examples:

- Pricing and E-commerce Strategy: To improve their pricing strategy, businesses keep an eye on what their rivals are charging. Real-time price tracking and analysis are made possible via web scraping.

- Lead Generation and Market Research: Companies utilize scraping to collect market data, examine trends, and produce leads. Customer sentiment can be discovered, for instance, by scraping customer reviews.

- Product Development: By gathering information on product descriptions, stock levels, and trends from e-commerce website scraping, businesses may remain ahead of the competition.

- Brand Monitoring and Public Relations: Web scraping aids in tracking online reputation and identifying brand references. Additionally, it may be used to spot advertisement fakes or enhance ad performance.

- Financial Data Collection: Web scraping is a technique used by financial firms to get current market data for improved risk management and investment strategies.

In conclusion

Developing a successful data-gathering strategy requires an understanding of the distinctions between web crawling and web scraping . While online scraping is about extracting and organizing such content for analysis, web crawling is about finding and indexing content. When combined, these technologies provide organizations with the ability to make data-driven choices and stay ahead of the competition.

But not every data solution is made equally. When compared to generic tools, custom platforms such as those created by GroupBWT offer greater flexibility, performance, and scalability. Businesses can make sure their data collecting is effective and compliant by investing in a customized strategy.