In 2025, web scraping has matured from a tactical hack into a strategic, high-stakes operation. It’s no longer just about bypassing CAPTCHAs or rotating proxies—it’s about building resilient, compliant systems that can reliably extract data at scale without violating data security protocols or triggering legal risk.

The regulatory landscape has caught up fast. Today’s scraping strategies must align with frameworks like the General Data Protection Regulation (GDPR), the Digital Services Act (DSA), and the California Consumer Privacy Act (CCPA). Misinterpreting robots.txt is no longer a technical oversight—it’s a compliance violation that could land your company in court. Just ask any team navigating the shifting interpretation of the Computer Fraud and Abuse Act (CFAA) in the U.S.

And while the legal barriers mount, the technical ones haven’t eased. Sites change structure frequently, fingerprinting gets more aggressive, and stealth automation tools must mimic human behavior down to session memory and timing patterns. Scaling your scraper from 10,000 to 10 million pages? That’s no longer a script—it’s a system. And that system is likely powered by microservices, not monolithic codebases.

Challenges in Web Scraping in 2025

Most teams still treat scraping as a technical task—one crawler, one script, one page. But the reality is: when API access is locked and legal compliance is mandatory, that approach breaks fast.

According to Mordor Intelligence, the global web scraping market is now worth $1.03 billion, with projections to reach $2.00 billion by 2030, growing at 14.2% CAGR. The shift? It’s not just about scraping websites. It’s about building resilient, observable systems that extract market data legally, reliably, and at scale.

The growth is led by sectors like finance, e-commerce, and advertising, where internal teams rely on scraped data for pricing strategy, product monitoring, and AI model training. Financial services alone now account for 30% of total spending. Cloud scraping dominates (68%), and demand for compliance-grade pipelines is replacing basic proxy scripts.

If your current stack is still patching selectors manually or bypassing robots.txt without logging, you’re not just at risk—you’re behind the market curve.

From Scripts to Systems: Why Microservices Now Power Enterprise Scraping

Scraper breakage rates have climbed sharply. In some industries, 10–15% of crawlers now require weekly fixes due to DOM shifts, fingerprinting, or endpoint throttling. Maintenance becomes a full-time operation before scale even begins.

One Reddit user put it simply:

“Bypassing anti-bot mechanisms is the most difficult aspect.” — r/webscraping

That’s only the first hurdle. Now, add regional consent frameworks, legal liability from robots.txt violations, shifting compliance rules (such as GDPR, DSA, and the California Privacy Rights Act), and the growing backlash against AI crawlers.

These aren’t just technical blockers—they’re operational liabilities. Scraping is now a compliance issue, a performance issue, and a trust issue.

This article unpacks every real-world web scraping challenge teams face today. No buzzwords. No recycled lists. Just what’s breaking and how to fix it—with architectural, legal, and operational clarity.

Core Web Scraping Challenges: An Unvarnished Breakdown

Anti-bot Walls: Where Most Scrapers Die First

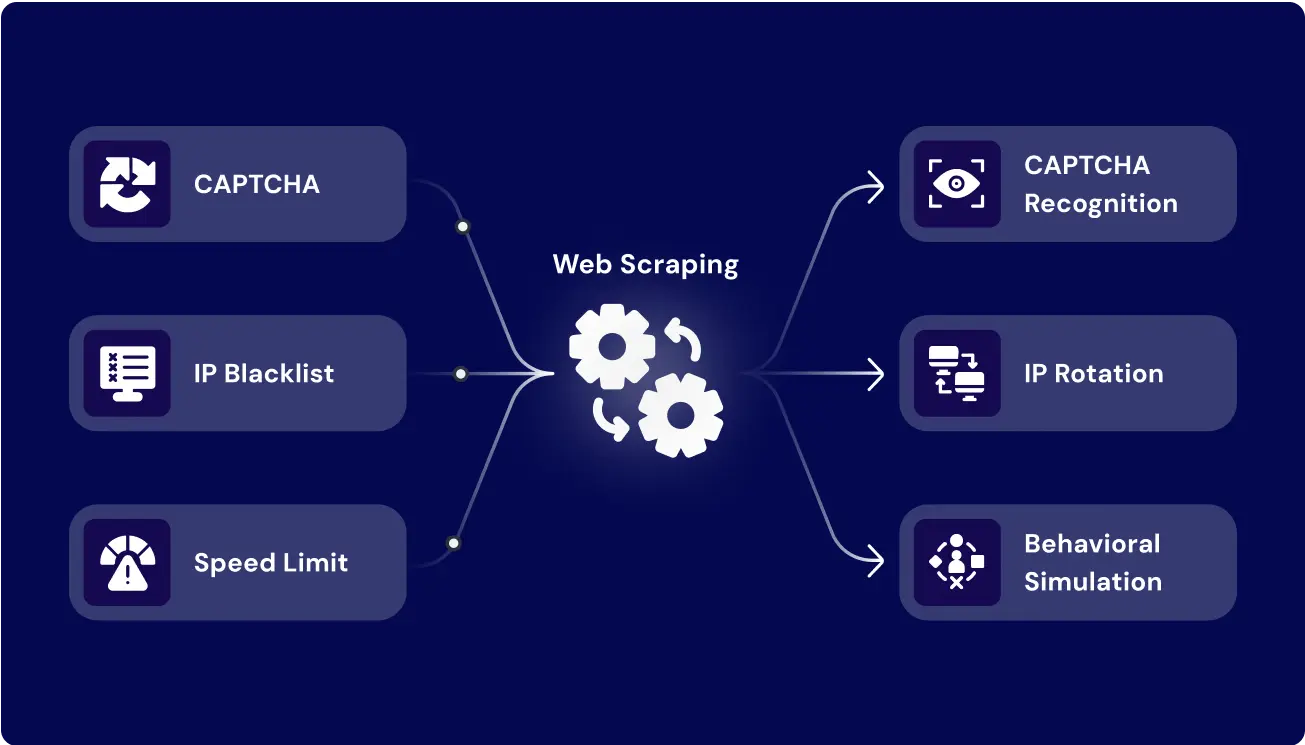

A device fingerprint or machine fingerprint, behavior analysis, TLS fingerprints, and header validation—2025 detection methods go far beyond IPs and cookies.

CAPTCHAs have evolved, too. We’re seeing bot defenses use hidden traps—like elements only visible to bots—to quietly detect and block scrapers without alerting the user.

This is now one of the most critical challenges in web scraping: even a well-structured scraper will be flagged before it hits the data layer.

No single trick works forever. But teams that stay resilient use:

- Rotating identities: device fingerprint spoofing, rotating browser versions, user agent obfuscation.

- Stealth headless browsers: Puppeteer or Playwright with custom behaviors mimicking humans.

- Session memory replication: mimicking the behavior of real logged-in users—like remembering clicks or scrolls—to make scrapers look more human and avoid detection.

In some workflows, human-in-the-loop CAPTCHA solving is embedded via outsourced clickstream teams.

When one fintech aggregator switched from static IP proxies to a smart identity layer, their data delivery success jumped from 42% to 93%, even on heavily defended domains.

This web scraping challenge isn’t going away. But treating it as an arms race—and using an evolving defensive stack—keeps your pipeline stable and compliant.

DOM Drift and Dynamic Rendering: The Hidden Breaker

Let’s say you’ve bypassed all anti-bot systems.

Now you face something worse: every site structure shifts slightly… often silently.

- A class name disappears.

- A container becomes a shadow DOM.

- A button now loads content via AJAX.

- Pagination switches from numbered links to infinite scroll.

These invisible shifts break static selectors instantly, causing hours of undetected data loss.

It’s one of the most insidious web scraping challenges in production setups. The system isn’t blocked; it just scrapes nothing useful.

Move beyond hardcoded selectors:

- Contextual scraping: Look for semantic cues, not rigid XPaths.

- Schema monitoring: Track version diffs over time and alert on page structure shifts.

- Self-healing scrapers: Automatically test fallback selectors based on historical patterns.

In one high-frequency scraping case for a B2B ecommerce site, implementing version-aware selectors reduced downtime by 73% over two months, even as the frontend deployed 14 hotfixes.

The key: expect DOM drift and build for change. If your logic assumes static HTML, you’ve already lost.

Scaling Logic Across Complex Catalogs

It’s one thing to scrape a single product page. But what happens when you have nested categories, dynamic filters, paginated SKUs, JS-loaded reviews, and region-specific layouts?

Scraping 10 pages = proof of concept.

Scraping 10 million pages = architectural problem.

This is a web scraping challenge that almost every data ops lead underestimates. What looks stable in QA collapses under production load. The issues compound:

- Recursive loops from broken pagination.

- Filters are injecting new endpoints via JS, changing on every scroll.

- Geo-specific content makes selectors non-transferable across locales.

Scalability demands modular logic, not monoliths.

- Hierarchical scraper design: Crawl paths, product extractors, reviews = separated logic.

- Dynamic pagination handling: Timeout-aware infinite scroll scripts + link-based fallbacks.

- Request queuing & throttling: Distributed crawling with concurrency guards.

One enterprise team extracting real-time competitor data from 14 marketplaces modularized their logic into 8 job-specific micro-extractors. Result? 14x fewer job failures, even as the site structure changed 9 times in a quarter.

That’s the difference between code and infrastructure.

Most common web scraping challenges aren’t technical—they’re architectural. At scale, logic fragility turns into data chaos.

Data Drift, Duplication, and Sampling Bias

Scraping success isn’t just getting data—it’s getting the right data, at the right time, without duplication or bias.

And here’s the real kicker: bad data still looks good on the surface. You won’t notice until your product recs are off, your ML model skews toward outliers, or your pricing analysis is distorted.

Common issues:

- Duplicated entities across URLs or languages.

- Outdated stock levels are causing incorrect listings.

- Missing fields due to dynamic rendering or geo-blocks.

- Sampling bias—scraping only what loads fastest or from specific regions.

You need verification layers and QA, just like any other data pipeline.

- Deduplication logic: Hash-based entity comparison or canonicalization.

- Verification passes: Multiple timestamps, sources, or test crawls.

- Schema validation: Check for nulls, missing attributes, or suspicious uniformity.

One marketplace analytics platform reduced downstream model error by 21%, simply by adding a 2-pass scraper and field QA validator to flag anomalies in the source HTML.

These are web scraping challenges and solutions that teams only learn after breakage hits production. Build for QA upfront—or prepare to debug in the dark.

Compliance, Consent, and the Robots.txt Problem

Let’s be clear: scraping isn’t illegal. But unauthorized scraping can become a compliance liability—fast.

From HiQ Labs v. LinkedIn in the U.S. to GDPR-based scraping cases in the EU, the legal landscape is shifting. And robots.txt is no longer treated as a polite suggestion.

Risks include:

- Scraping personal data without legal basis (GDPR fines).

- Violating terms of service on B2B platforms.

- Cross-border scraping without jurisdictional routing.

- Robots.txt parsing skipped entirely in legacy pipelines.

Scraping workflows need built-in legal observability:

- Consent-aware routing: Domains flagged by jurisdiction + purpose.

- Logging + audit trail: Every endpoint hit, every domain visited, every schema extracted.

- Legal review guardrails: Stop extraction if flagged by legal tags or new compliance rules.

GroupBWT’s compliance layer was deployed in a multi-jurisdictional retail project spanning EU and LATAM markets. With policy-based routing and endpoint logs, the team passed two GDPR audits without penalty—while competitors were shut down mid-campaign.

The challenges of web scraping are legal exposure points. Scraping at scale without observability is no longer an option.

Legal Risk Isn’t Theoretical

HiQ v. LinkedIn and the Robots.txt Dilemma

In 2019, the court ruled in favor of HiQ, saying public data could be scraped. But in 2022, LinkedIn appealed. And now, in 2025, it’s clear: robots.txt is being enforced like a contract, especially under GDPR and the Digital Services Act (DSA).

Some countries view scraping data behind a login—even publicly accessible—as a violation of terms. Others say intent matters: Are you scraping for AI training? Competitive analysis? Bulk resale?

This is no longer a grey area. It’s one of the fastest-evolving challenges in web scraping, and courts are finally catching up.

Solution

Build scraping pipelines that can:

- Parse robots.txt and flag blocked paths.

- Log user-agent headers and respect opt-out policies.

- Route by jurisdiction, e.g., block scraping if no GDPR basis is present.

Outcome

A SaaS analytics firm scraped job boards in 11 countries, but blocked German and French boards due to robots.txt and consent flags. They retained 80% of the data, but avoided $250k in potential fines.

Ignoring robots.txt in 2025 isn’t brave—it’s reckless. Treat it as part of your compliance stack.

Cloudflare, AI Crawlers, and the Default Denial Era

In July 2025, Cloudflare began blocking AI-based scraping by default—labeling it a violation of trust. Stealth crawlers like Perplexity have triggered industry backlash, and now many enterprise platforms preemptively deny any bot traffic, even from legitimate sources.

This affects:

- Public-facing datasets.

- LLM training crawlers.

- Price comparison tools flagged as competitors.

What used to be a gray area is now a blacklist. You can’t “scrape politely” anymore. The stack knows who you are—and bans accordingly.

Compliance-grade scraping setups now require:

- User-agent governance: Registered IDs with opt-in.

- Access request logs: Create a paper trail of scraping sessions.

- CAPTCHA fallback handling: Use human verification services for solving CAPTCHAs when automated methods fail.

A US retailer blocked three major scraping engines within 48 hours of deployment. One B2B pricing intel tool used authenticated proxies, country-specific user agents, and Cloudflare-aware delays to stay under the radar—and maintain uptime.

In the new web scraping challenges 2025 landscape, intent is not enough. Reputation matters. If your crawler is labeled hostile, you may never see the site again.

Solutions for Web Scraping Challenges

Use this reference matrix to understand the symptom, signal, and solution for each web scraping challenge your team may face.

Quick-Reference Table for Fixing Real Breakpoints

| Challenge | Signal | Solution |

| IP bans & blocks | 403 errors, CAPTCHA walls | Proxy rotation, headless browsers |

| Dynamic DOM changes | Missing fields, broken selectors | Auto-healing selectors, schema monitoring |

| Infinite scroll issues | Incomplete listings, stuck pagination | Scroll-aware logic, timeout scripts |

| Data duplication | Repeated entries across pages | Entity hashes, deduplication layer |

| Sampling bias | Skewed data, ML model drift | Multi-pass crawlers, diversity filters |

| robots.txt violations | Legal warnings, platform takedowns | Consent routing, rules parsing |

| Cloudflare lockouts | Hard denial, full-site bans | Identity-aware agents, access scheduling |

| Scaling failure | Timeouts, memory overload | Modular scrapers, queue throttling |

| Outdated content | Price/stocks wrong or missing | Timestamp validation, cache bypass |

| Stealth AI bans | Bot blacklisted, visibility loss | Human session simulation, disclosure logic |

Note: These aren’t theory—they’ve been tested across live projects scraping marketplaces, public directories, travel aggregators, and e-commerce platforms.

When Your Scraper Breaks Because the Architecture Can’t Scale

If your scraping pipeline keeps breaking, the root cause might not be your tools—but the architecture behind them. Here’s how to tell when it’s time to rethink your entire system.

If your team is:

- Reactively patching broken selectors every week

- Working without jurisdictional logic

- Scraping more than 500k pages/month without a modular design

- Lacking session logs and legal flags

…then it’s not a scraper problem. It’s a system design flaw.

The real web scraping challenge is building systems that don’t fall apart under scrutiny, scale, or regulation.

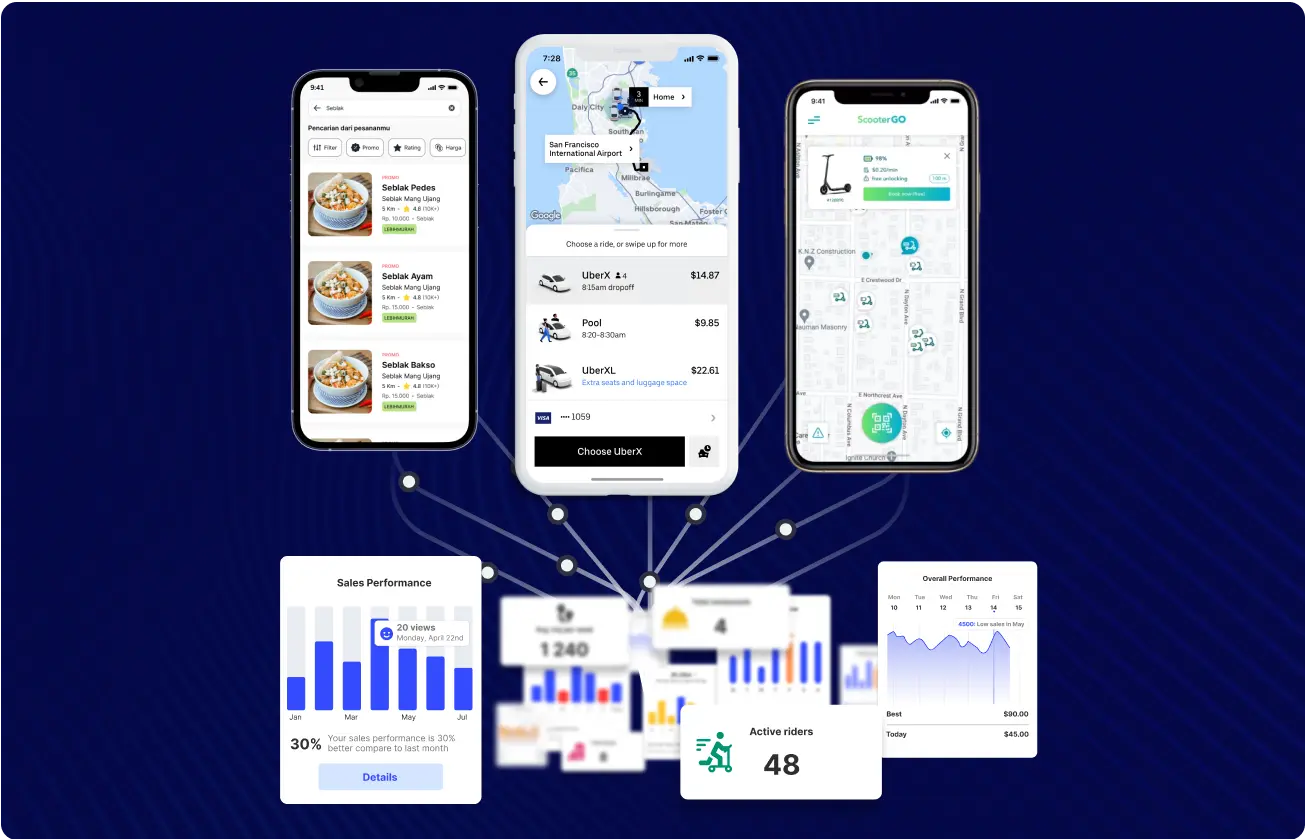

Web Scraping Use Cases: Explore Projects & Solutions

| Related Insight | Why It Matters |

| Web Scraping at Scale for Market Insights in Automotive | Scalable crawling logic applied to competitive automotive pricing and supply monitoring across multiple portals. |

| Scraping Delivery Pricing for Competitive Intelligence | Real-time data extraction from logistics and delivery apps to fuel pricing strategies and delivery SLAs. |

| Real-Time Hotel Rate Scraping | Used for dynamic pricing models, hospitality investment insights, and tracking short-term rental competition. |

| Enterprise Data Lake for External Market Signals | Centralizes external data (web + open + partner feeds) into a clean, compliant, and analytics-ready architecture. |

| Consumer Review Insights for Product Strategy | Uses user reviews scraped across channels to inform positioning, feature prioritization, and brand perception. |

| Mortgage Rate Data Extraction | High-frequency rate scraping powers real estate and mortgage marketplaces with fresh, verified financial data. |

| Digital Shelf Analytics | Tracks product presence, pricing, visibility, and competitors across large eCommerce platforms. |

| Micromobility Data Scraping | Live data on scooter/bike availability helps optimize urban logistics and mobility mapping platforms. |

| Real-Time Telecom Research | Gathers B2B telecom offers, device availability, and pricing for use in analytics dashboards and BI tools. |

| Data Extraction for Beauty Industry Benchmarking | Extracts inventory, pricing, and product insights from Sephora/Rossmann/Boots-style platforms. |

If you’re:

- Fighting weekly breakage from DOM drift

- Struggling to stay compliant across jurisdictions

- Losing time to outdated scraping infrastructure

- Scaling past 500k pages with zero QA or logging

Then it’s time to rethink your pipeline—before the next scraper failure costs you trust, compliance, or performance.

Build Scraping Systems That Don’t Break Under Pressure

Let’s talk about how to make your scraping operation resilient, compliant, and future-proof.

FAQ

-

Is web scraping legal in 2025?

Yes—but with boundaries. In the U.S., scraping publicly available content is often legal unless it’s gated behind authentication. However, intent and usage now matter more than ever.

In the EU, scraping must comply with GDPR, the Digital Services Act, and jurisdictional routing logic. Even publicly visible personal data can trigger liability if processed unlawfully.

Best practice: Treat robots.txt as a consent signal. Build legal observability into your pipelines.

-

How can I avoid getting blocked by Cloudflare or bot defense platforms?

Cloudflare, Akamai, and AWS Shield now block scrapers based on TLS fingerprinting, behavioral signals, and bot reputation, not just IPs.

What works in 2025:

- Stealth headless browsers (e.g., Playwright with device spoofing)

- Session replication to mimic real users

- CAPTCHA fallback with human-in-the-loop solutions

- Country-specific IP pools + identity-aware traffic shaping

Bottom line: Scraping is now an identity game, not a proxy game.

-

Can I ignore robots.txt if the data is public?

Scraping public data doesn’t override compliance.

In 2025, robots.txt is increasingly seen as a binding compliance artifact, not just a courtesy. Platforms and regulators—especially under GDPR and the Digital Services Act—treat violations seriously.

What can go wrong:

- IP blocks from major platforms

- Legal claims based on unlawful processing

- Failures in compliance audits

Best practice: Treat robots.txt as a consent signal—never skip it without legal review.

-

How do I scale scraping without losing data quality?

Scaling exposes fragility. Bad selectors, timing issues, or region-specific rendering will corrupt your dataset silently.

To scale scraping cleanly:

- Use microservices (products vs reviews vs images = separate logic)

- Add schema validation, null checks, and fallback scrapers

- Crawl with pagination-aware, timeout-resilient scripts

- Verify fields across multiple timestamps to reduce sampling bias

Without validation layers, scaling equals compounding error.

-

What are the most common scraping mistakes even senior teams make?

After auditing 100+ enterprise setups, these 5 mistakes show up again and again:

- No logging, QA, or request-level traceability

- Rigid, hardcoded selectors with zero fallback

- Lack of robots.txt or legal compliance logic

- Monolithic scraper scripts (no modular design)

- No schema validation before ingestion

These are not edge cases—they’re why scraping breaks in production.