Analysts expect the mobile application market to reach USD 626.4 billion by 2030. Most of that value flows through internal mobile Application Programming Interfaces (APIs) that stay hidden behind binary interfaces, encryption, and behavioral protections.

Your web scrapers now work in a hostile environment. Anti-bot systems such as Cloudflare and Datadome evolve faster than HTML parsers and expose less real commercial logic every quarter. Many teams still treat web scraping as “good enough”. They rely on delayed, incomplete price and inventory views while competitors read the real signals from mobile apps. As a dedicated mobile app scraping company, we see that this gap results in lost margin, incorrect bids, and mispriced promotions.

Mobile apps now carry the signals that drive profit:

- Real-time prices, surcharges, and fees per route or slot.

- Stock and availability per store, zone, or warehouse.

- Promotions and incentives per cohort, region, or postcode.

Mobile Application Market, 2025–2030

This mobile app scraping guide helps technical and product leaders decide when to use a custom mobile interception pipeline rather than web scraping or official APIs. You will learn:

- Architecture: how to intercept mobile traffic when official APIs limit access.

- Bypass: how engineers handle Secure Sockets Layer (SSL) pinning, token generation, and device checks.

- Return On Investment (ROI): what scooter, food delivery, and ride-hailing teams gained from interception.

- Risk: which legal and technical risks stay on your side and how to reduce them.

If your key decisions depend on what real customers see in mobile apps, you need direct access to internal mobile APIs instead of surface web signals. This requires a shift in strategy to scrape mobile app data directly from the source.

Mobile App Scraping Definition in This Guide

Understanding exactly how to scrape data from mobile app sources is crucial for modern data strategies.

Mobile app scraping means:

- Automated collection of structured responses from mobile app traffic.

- Capture of internal API calls, JSON, Protobuf, and other serialized formats.

- Use of controlled physical or emulated devices and interception proxies.

Our engineers:

- Work with real or emulated devices that we fully control.

- Route traffic through hardened Man-In-The-Middle (MITM) proxies.

- Decode and normalize responses into schemas that fit your data platform.

Our engineers do not:

- Ship any tooling to end users.

- Install code on customer devices.

- Collect private user data or credentials outside the agreed scope.

This approach differs from classic web scraping, which targets HTML in browser environments and depends on brittle page markup. It is a more robust method of scraping mobile apps that avoids the instability of frontend changes.

Why API And Web Scraping Strategies Fail

Public APIs and traditional web scraping still help in narrow tasks. They fail whenever you need a full commercial context at scale and in near real time. These limitations are among the most common web scraping challenges faced by enterprise teams today.

Public APIs often:

- Expose limited fields that follow the provider roadmap, not your data model.

- Apply strict rate limits and partner rules.

- Omit signals such as surge multipliers, internal availability codes, or promotion logic.

Classic web scrapers often:

- Depend on HTML structures that change without notice.

- Trigger protection systems during traffic spikes.

- Demand constant parser repair and manual supervision.

Many Chief Technology Officers now share a practical view. Public web scraping becomes technically and legally fragile as anti-bot systems evolve. For high-value operational data, a controlled mobile interception pipeline often becomes the only sustainable path. This is why reliable mobile app scraping is becoming the standard for operational intelligence.

Data Access Approaches Side By Side

Use this comparison as a decision aid during early scoping.

| Approach | Main Strength | Main Constraint |

| Official Public API | Clean, versioned structure with stable contracts | Narrow scope and strict rate and usage limits |

| Classic Web Scraping | Broad but unstable view from page layouts | Frequent breakage and growing protection pressure |

| Mobile Traffic Interception | Rich internal commercial logic in near real time | Higher complexity and careful legal review |

Additional dimensions you should consider:

- Signal coverage: interception reads the same internal endpoints that serve real customers.

- Change resilience: APIs provide the highest resilience due to versioning, while interception sits between APIs and web scraping in terms of stability.

- Legal exposure: public APIs often align with Terms of Service (ToS); interception requires a case-by-case review.

- Engineering overhead: interception projects demand strong reverse engineering and operations skills.

- Typical owner: central data or data platform teams usually own interception, not growth teams.

When To Choose Mobile Traffic Interception

Choose interception when at least one of these statements is true, and revenue sits at risk:

- The mobile app exposes data that never appears on the public website.

- Public APIs lack the fields that drive pricing, routing, or promotion logic.

- Anti-bot systems make web scraping unstable or too costly to maintain.

- Wrong or delayed external data affects revenue, bids, or regulatory reports.

Keep APIs where they fit well. Keep web scraping where protection remains light, and latency tolerance is high. Bring interception in to ensure the business has accurate, live app signals to protect margins. When you need to scrape data from mobile app environments securely, interception is the superior choice.

Standard Mobile Interception Workflow At GroupBWT

Our engineers follow a repeatable workflow. This workflow keeps interception projects predictable, measurable, and auditable. We operate as a premier web scraping as a service provider, ensuring every step is managed professionally.

-

Scope And Constraints

Our team and your stakeholders:

- Define decisions that depend on external mobile data.

- List required entities and attributes such as routes, stores, zones, prices, inventory, and promotions.

- Select target apps, platforms, and regions.

- Align on legal scope and constraints together with your legal counsel.

- Output: a clear scope document and risk profile for the project.

-

Traffic Analysis And Mapping

Our engineers:

- Configure an MITM proxy and install its certificate on controlled devices.

- Inspect traffic between the app and backend services.

- Identify internal API endpoints, headers, and payloads.

- Detect the first technical barrier: plain visibility or SSL pinning.

If traffic appears in clear form, the next step becomes automation. If the connection fails during the handshake, the app enforces SSL pinning or custom certificate logic. This analysis is critical for both iOS app scraping and android app scraping workflows.

-

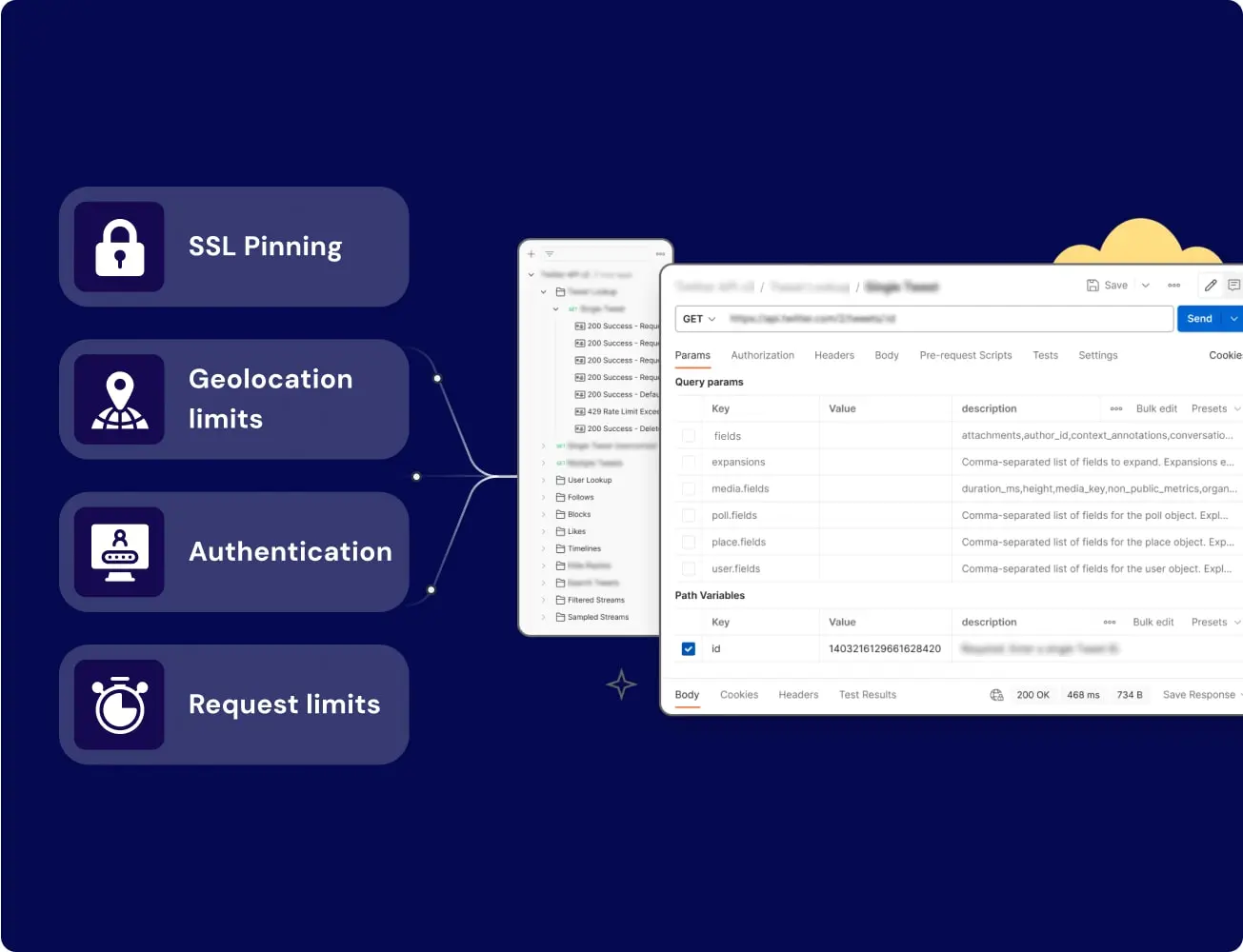

SSL Pinning Bypass

SSL pinning is a security mechanism that restricts the application to trust only a specific, pre-defined server certificate or public key hardcoded within the app. By ignoring the device’s system-level trust store, the app rejects any unknown certificates, causing standard MITM proxies to fail. To scrape mobile app content hidden behind these layers, sophisticated bypass techniques are required.

Our reverse engineering team:

- Decompiles the app with tools such as JADX.

- Locates certificate pinning checks and trust manager logic.

- Uses Frida or Xposed-based hooks to intercept certificate validation.

- Injects runtime scripts that enable the app to accept our interception certificate in controlled environments.

Output: readable HTTPS traffic that flows through the MITM proxy without any change to user devices.

-

Token Reconstruction And Deobfuscation

Modern apps generate request tokens that depend on several factors:

- Session time and expiry rules.

- Inspect traffic between the app and backend services.

- Device identifiers and app instance data.

- Location, language, and region

Our engineers:

- Identify token generation functions inside the app code.

- Trace calls and parameters that feed each token.

- Rebuild token generation logic inside our scraping infrastructure.

- Produce valid signatures offline for each request type.

Replaying a request without the correct token logic usually results in an HTTP 403 response. Replicating token logic keeps requests aligned with backend expectations, which is essential for consistent mobile app data scraping.

-

Emulation And Rate Limit Management

Providers monitor behavior, not only tokens. To mitigate this, we employ advanced rotating proxies for web scraping, specifically configured for mobile protocols.

Our engineers:

- Rotate residential IP addresses across regions.

- Manage device fingerprints, session cookies, and headers.

- Shape traffic patterns with jitter and pauses that match normal user behavior.

- Separate exploratory traffic from production traffic.

Output: stable sessions that deliver coverage without drawing unnecessary attention.

-

Parsing, Normalization, And Delivery

The data engineering team:

- Converts responses into normalized schemas that match your warehouse or lake.

- Ensures consistent identifiers for stores, zones, routes, and items.

- Manages deduplication and time alignment for time series.

- Delivers data as streaming feeds or batch loads into your Business Intelligence (BI) stack and Machine Learning (ML) models. This structured approach enables effective data mining and data visualization downstream.

Output: analytics-ready datasets instead of raw intercepted logs

-

Monitoring, Change Handling, And Support

Our operations and Site Reliability Engineering (SRE) teams:

- Track error rates, coverage per store or zone, and schema stability.

- Detect app and API changes early.

- Roll updated bypass scripts and parser code through Continuous Integration and Continuous Delivery (CI/CD) pipelines.

- Provide clear impact summaries when changes occur.

This approach treats interception as a long-lived data product rather than a disposable script. It ensures that your mobile app scraping data pipeline remains robust over time.

Protection Layers And Residual Risk

Each protection layer raises risk and complexity. You need a clear view before you approve any project or attach revenue targets to it.

Protection Versus Technique Matrix

| Protection Layer | What You See In Traffic Or Behavior | Typical Bypass Technique |

| No Special Protection | Readable HTTP(S) inside the proxy | Direct HTTP requests |

| SSL Pinning | Handshake failures or empty sessions | Frida or Xposed-based certificate hooks |

| Token Rotation | HTTP 403 on replayed requests | Code analysis and token reimplementation |

| Geo-Blocking | Region-specific denial messages | Regional devices and residential proxies |

| Device Binding | Access allowed only on specific device types | Device farms and careful profile management |

| Behavioral Detection | Bans during constant or extreme activity | Human-like flows and deliberate traffic shaping |

Residual risk levels by layer:

- No special protection: low residual risk.

- SSL Pinning and geo-blocking: medium residual risk, sensitive to updates and policy changes.

- Token rotation and device binding: high residual risk, logic and rules may change without notice.

- Behavioral Detection: medium to high residual risk, depends on provider tolerance and pattern design.

Our engineers keep residual risk explicit in design documents. Some environments never reach low risk because of device binding and sophisticated behavioral detection. Decision owners need that

view before they approve the scope and budgets.

Engineering Case Studies And ROI

These examples illustrate how interception affects real operations and financial outcomes, making them powerful examples of competitive analysis and benchmarking.

Case A: E-Scooter Fleet Optimization

Operations lead at a micromobility company.

The challenge: The team needed real-time visibility into fleet density and usage hotspots across competing networks. Existing data came from fragmented web views and manual checks.

Our implementation:

- Built an interception pipeline for competitor scooter apps to scrape mobile app data regarding fleet positions.

- Captured vehicle locations, statuses, and battery levels per zone.

- Normalized geospatial data into grid cells that the client models already used.

The result:

- Identified more than 15 high-demand zones that the client previously ignored.

- Improved scooter placement and rotation.

- Increased rentals by 20 percent.

- Raised revenue by 18 percent within three months.

Case B: Food Delivery Pricing Intelligence

Pricing and category owner at a delivery platform.

The challenge: The team had to monitor Uber Eats across 1,200 locations in the United Kingdom and France. They needed a reliable basis for delivery fees and promotion decisions, requiring deep competitive intelligence analysis and data sources.

Our implementation:

- Monitored around 15,000 restaurants inside the app using advanced scraping mobile app data techniques.

- Captured more than 500 active promotions and fee structures.

- Built a time series of delivery conditions per postcode and time window.

The result:

- Enabled responses to competitor price changes within 48 hours.

- Allowed the team to run controlled experiments instead of reactive changes.

- Increased customer satisfaction scores by around 15 percent.

Case C: Ride-Hailing Supply Analytics

Fleet and strategy lead at a ride-hailing operator.

The challenge: The team needed early signals of car shortages and surge-pricing events.

Our implementation:

- Built a high-frequency interception of availability and estimated fare endpoints.

- Mapped supply and demand per neighborhood and time block using the best scraping mobile app methods.

The result:

- Revealed that about 30 percent of neighborhoods faced shortages during peak hours.

- Informed fleet and incentive decisions in critical zones.

- Improved availability by 18 percent in target areas.

Compliance And Risk Mitigation

Compliance and risk management sit at the core of every interception project. Engineering alone does not close this gap. Many clients ask, “is web scraping legal in US?” and how these laws apply to mobile environments.

Technical Risk Controls

Our engineers:

- Use residential IP rotation from multiple providers to avoid simple blocklists.

- Align Transport Layer Security (TLS) fingerprints with real mobile clients, including JA3 profiles.

- Shape request rates with random delays to approximate real usage.

- Separate test environments from production environments to limit impact.

Legal And Policy Alignment

Our process:

- Encourages clients to review Terms of Service for each target with their legal counsel.

- Documents that indicate which activities remain acceptable and which cross agreed boundaries.

- Defines rules for storage, access, and retention of captured traffic.

- Records these rules in configuration and operational runbooks.

Our teams do not offer generic legal advice. Your counsel owns the final decisions on what remains acceptable in your jurisdictions. We then implement technical controls that match those decisions.

Failure Scenarios You Must Plan For

You should treat the following scenarios as certain over a long enough horizon:

- Target providers change app or API behavior, breaking interception.

- Protection systems trigger bans for some accounts or devices.

- Regulatory changes or internal policy updates restrict project scope.

Our mitigation strategy:

- Maintains parallel account and device pools to contain bans.

- Monitors coverage and flags signal gaps early.

- Provides impact reports for each major change.

- Supports re-scoping or controlled shutdown when legal or business conditions change.

Practical Artefacts For Your Team

Interception Feasibility Checklist

If you answer “yes” to at least three points, your team likely needs a custom interception pipeline instead of more web scraping:

- Does the target mobile app expose data that never appears on the public website?

- Do you need to capture dynamic prices, surge events, or promotional changes in near-real time?

- Does the app require geolocation to display relevant content?

- Do official APIs restrict access, cost too much, or fail to exist for your use case?

- Does your current scraping stack break after minor changes to protection or layout?

- Would incorrect or delayed external data affect revenue, bidding, or regulatory reports?

Quick Test For SSL Pinning

Your internal team can follow this simple plan to see if you can scrape mobile app traffic easily:

- Configure a known MITM proxy and install its certificate on a controlled device.

- Route all device traffic through the proxy.

- Run expected flows in the target app.

- Check whether the proxy shows readable HTTPS traffic.

- Assume SSL pinning or custom trust logic if traffic stays encrypted or connections fail.

This test often becomes the first task in our engagements.

FAQ

-

How to scrape mobile app data effectively?

Mobile app scraping starts by intercepting network traffic through a proxy on a real device or emulator to observe how the app communicates with its backend. You analyze the captured API requests and responses to identify endpoints, parameters, authentication headers, and pagination logic, then automate the extraction using controlled clients such as Appium and direct HTTP calls. Where apps enforce certificate pinning or payload encoding, you must implement runtime instrumentation or protocol-level decryption before automation becomes viable.

-

Is Mobile App Scraping Legal For Competitive Intelligence?

Short answer: it depends on the jurisdiction, Terms of Service, and project design.

Our teams:

- Focus on public, anonymized information that does not identify individual users.

- Avoid personal data unless you own the relationship and provide clear consent models.

- Ask your legal counsel to review ToS, regional regulations, and risk appetite before scale.

We then implement technical controls that reflect those decisions.

-

How Do You Handle Frequent App Updates?

App teams update binaries, protections, and internal APIs regularly.

Our teams:

- Monitor schema changes and error patterns.

- Re-run deobfuscation and token mapping when responses change.

- Maintain a “shadow API map” that tracks internal endpoints across versions.

This process keeps interception aligned with the current app behavior rather than relying on a single snapshot. This adaptability is key to successful mobile app data scraping.

-

How Does Interception Compare To Official APIs In Practice?

Official APIs provide a clean structure and high stability, but a limited scope.

Interception:

- Uses the same internal endpoints that the app uses for real customers.

- Delivers richer context for pricing, inventory, and routing decisions.

- Demands stronger engineering and clearer risk management.

Many teams start with APIs where available and layer mobile app scraping only for missing or high-value signals.

-

How Many Devices And IP Ranges Do We Need?

Typical mid-size projects per country require:

- At least three device profiles or devices per target app.

- Two or more residential IP providers for resilience.

- Precise segmentation between testing and production traffic.

Larger marketplaces or high-risk environments may need more diversity. We model this during the readiness phase so decision makers can link costs to expected coverage and business impact.

-

What tools are best for data scraping mobile app sources?

The best tools depend on the app’s security. For basic scraping data from mobile apps, tools like Appium or standard MITM proxies work well. However, for apps with SSL pinning, you need advanced tools like Frida, Xposed, and JADX to successfully scrape mobile app data traffic. Understanding how to scrape data from mobile app sources often requires a mix of static analysis and runtime instrumentation tools.