Magento powers over 130,000 global e-commerce sites, particularly among enterprise B2B sellers. But unlike platforms with standardized feeds, Magento’s open architecture creates fragmented product structures—what you see as a buyer may not match what’s exposed in an admin panel or export.

Prices, stock levels, and product variants are rendered dynamically, shaped by scripts, regions, and logic your API doesn’t expose. This GroupBWT guide shows you how to do Magento web scraping the right way, ensuring your business decisions reflect what’s truly on the page.

Magento Use Among B2B Leaders

According to MGT Commerce’s 2025 Q1 Market Report, Magento holds 8% of the global eCommerce market, with strong traction in Europe and North America. It’s the third most-used platform globally, particularly in enterprise settings.

But usage alone doesn’t tell the whole story. Most Magento stores operate outside of standard feeds. Product data often appears through conditional logic, regional rendering, and dynamic scripts—exactly where traditional scraping fails.

In IDC’s MarketScape B2B Digital Commerce Assessment, top eCommerce platforms were compared based on enterprise suitability for $500M+ GMV businesses. The report evaluated customization, flexibility, and control—areas where Magento consistently ranks high.

| Platform | Global Share (2024) | Strength in B2B | Notes |

| Shopify | ~25% | Low | SaaS-first, limited backend logic |

| WooCommerce | ~23% | Medium | Strong in SME, low in enterprise |

| Magento | ~8% | High | Custom logic, multi-site, complex pricing |

| Salesforce | ~6% | High | Strong integrations, high cost barrier |

| SAP Commerce | ~4% | High | Common in industrial B2B setups |

| Oracle CX | ~2% | Medium–High | Legacy systems, complex installs |

Magento powers large catalogs with complex SKU logic, which is why B2B sellers adopt it despite the higher technical lift.

Digital Commerce Now Dominates Sales

According to the McKinsey B2B Pulse 2024, 34% of B2B revenue now flows through self-service digital platforms. That makes accurate product visibility not a bonus, but a requirement.

Magento’s flexibility enables this kind of digital control, but only if data visibility keeps pace with real-time site behavior. If you’re working from export files or stale product feeds, you miss:

- Regional price differences

- Variant-specific stock levels

- Scripted discount logic

- Real-time changes during promotions

Scraping Magento Closes Data Gaps

With data scraping Magento pages directly, you bypass broken feeds and export limitations. You track real prices, product variants, and availability, as your buyers see it. This high-fidelity product tracking is a core element of effective ecommerce data scraping strategies.

This is especially relevant given Statista’s B2B eCommerce Market Report, which values the market at $20.4 trillion, with Asia-Pacific holding 78% of global volume. These are regions where Magento adoption overlaps with high expectations for catalog accuracy, structured listings, and localized pricing.

Magento gives B2B sellers the flexibility to support complex product catalogs—but it doesn’t deliver clean, structured data by default. To track competitor listings, manage price intelligence, or ensure catalog parity across markets, you need to scrape Magento sites directly.

This section laid out the market footprint. Next, we’ll break down exactly what product data you can scrape and where it hides on a Magento-powered site.

What to Scrape From Magento

Magento storefronts rarely expose full product data through feeds or APIs. Most critical fields—like variant-specific prices, conditional stock levels, or localized content—are rendered on the page and shaped by front-end logic. With Magento web scraping, you collect the version that buyers see, not just what admins export.

Scrape Prices from Live Pages

Magento supports multiple pricing models: tiered, per-region, volume-based, and customer-segmented. But these often load dynamically through AJAX or are inserted via JavaScript after the page renders. If your system collects only base pricing, it will miss:

- Regional price differences (e.g., € vs. $)

- Special offers tied to cookies or cart behavior

- Quantity discounts that trigger at certain thresholds

- Prices loaded into modals or quick views

For example, a product may list “$29.99” for US visitors but “€24.99” for EU buyers, with discounts applied only after selecting quantity >3. Only scraping the full page surface captures these live conditions.

Extract Full Product Descriptions

Many Magento sites use layout tabs, accordions, or nested components to organize details. This includes dimensions, ingredients, instructions, and care information—none of which appear in admin-exported feeds or via API.

Data scraping Magento allows you to:

- Parse product descriptions spread across hidden tabs

- Collect metadata (SKU, brand, model number, barcode)

- Normalize long-form and structured data into usable fields/li>

- Monitor changes to the description structure across versions

Without this, product matching for comparison, marketplace syncing, or internal taxonomy suffers, resulting in false duplicates or missed listings.

Track Variant Stock and Status

Magento often hides variant-level inventory and availability inside script-bound containers—elements rendered dynamically via JavaScript frameworks like RequireJS, jQuery, or Vue.js.

hanges to size, color, or configuration may asynchronously trigger new stock levels, which don’t appear in the static HTML or admin exports—only after interaction. This makes such data invisible without executing scripts.

Scraping lets you map:

- Stock per size or color

- Out-of-stock conditions not flagged in structured markup

- Hidden alerts like “only 3 left” or “ships in 7 days”

Selectors to watch:

Look for dynamically injected content inside elements like:

- .swatch-attribute-options > div

- div[data-role=’inventory-stock’]

- div.stock.available > span

According to MDPI’s 2025 research on LLM-based attribute extraction, field precision across eCommerce pages improves by 40–70% when systems scrape rendered HTML over static schemas. Magento is a textbook case of this gap. This reliance on advanced pattern recognition is why integrating LLM for web scraping tools is becoming essential for handling complex, unstructured content.

Magento exposes only part of its product data through feeds or APIs. To understand what your buyers—and competitors’ buyers—actually see, you need to scrape the rendered storefront.

Magento scraping restores data parity across regions, layouts, and user types. It ensures your system reflects the current product state, not just a static feed or stale export.

Keep reading to learn how to implement scraping on Magento, as we cover methods, tools, and real-world examples.

Data Scraping Magento: Best Practices

Magento doesn’t hand you structured data. Prices, stock, and descriptions often appear only after scripts run, or when users interact with the page. You can’t rely on admin-side feeds if you want to see what your customers and competitors see.

To fix this, Magento scraping must treat the browser as the source of truth, not the database. Here’s how that works in practice.

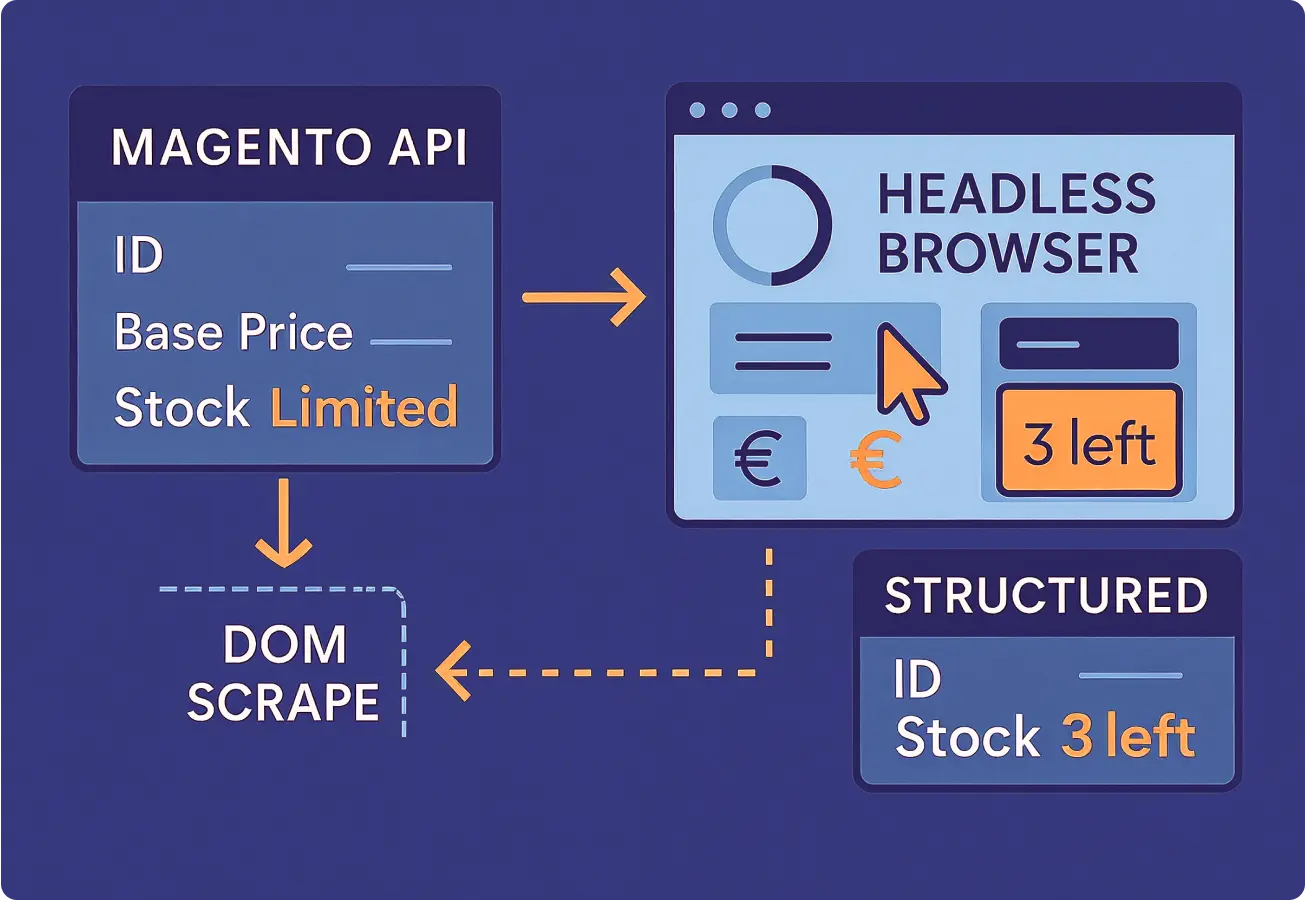

Use Magento API for Base Data

Magento provides a REST API and a GraphQL interface. These are your first checkpoints, but they often return incomplete product information.

Expect to find:

- Basic product fields: name, ID, base price

- Limited stock and attribute metadata

- No access to layout-based pricing or dynamic scripts

- Inconsistent support across themes or versions

The IDC MarketScape 2024 B2B Commerce report confirms what developers already know: Magento’s API structure favors internal use, not public storefront parity. That’s why scraping becomes necessary for full visibility.

Use the API for fallback or fast-access data, but never assume it mirrors what the buyer sees.

Render Pages Using Headless Browser

To collect the real product output—including localized pricing, variant stock, and modal-based descriptions—you need a headless browser.

This means automating a browser like Chromium via tools such as:

- Playwright — modern browser control, supports tab logic and JS-heavy rendering

- Selenium — older but still effective for click-path scraping

- Puppeteer — browser automation from Google’s Node ecosystem

A properly configured session will:

- Wait for full DOM rendering

- Detect content inserted via JavaScript

- Simulate user interaction (e.g., selecting size, clicking tabs)

- Capture post-load change, such as real-time inventory updates and low-stock alerts

Headless methods also let you rotate IPs and pass device headers to avoid anti-scraping detection, especially important on regionalized B2B Magento sites.

Extract Fields from Rendered Output

After the page fully renders, your system must extract the fields with precision. This is not simple tag scraping—it’s field-level targeting inside moving layouts.

To do this reliably:

- Use CSS or XPath selectors to target elements like price, SKU, description

- Normalize text with fallbacks for multiple layouts

- Track changes in DOM structure over time and update selectors accordingly

- Implement schema validation to detect and reject malformed records

This is where most scrapers break—when Magento stores update layout themes, reorder components, or switch tab systems. Instead of tracking tags, track outcomes: what value appears in the price block, what unit appears near stock, what structure wraps the description.

In MDPI’s 2025 study on eCommerce attribute extraction, models trained on rendered output had 63% higher precision than those built on export schemas alone.

Scraping Magento is not about pulling URLs—it’s about recreating how the site builds product pages in real time.

1. Check the API first, but don’t trust it to match the storefront.

2. Use headless browsers to capture scripted data.

3. Parse the final layout with adaptive logic, not fixed templates.

Below are the tools, proxy techniques, and safeguards needed to run Magento scraping at scale, without bans, blocks, or broken logic.

Data Scraping Magento: Tools and Safeguards

Magento sites use JavaScript-heavy themes, variant logic, and dynamic stock modules that break simple scrapers. To extract reliable data, your system needs the right scraping stack, proxy infrastructure, and failure handling logic.

This section breaks down the operational layer behind Magento web scraping—the choices that keep your process stable, accurate, and unblockable.

Choose Tools That Handle Layout Drift

Most Magento pages do not follow a fixed layout. They shift with promotions, update prices via scripts, and wrap content inside components that change without warning. Your scraper must adapt to these shifts.

Use tools that can track real output:

- Playwright: Handles full browser rendering and tab logic

- Scrapy + Splash: Combines fast scheduling with headless support

- Puppeteer: Browser automation built for JS-heavy storefronts

- Airflow or Prefect: For scheduling, retries, and flow control

- JQ / Pandas: For post-extraction field normalization

Avoid tools that scrape static HTML only. They will miss dynamic values like real-time stock and promo logic.

Rotate Proxies and Headers on Every Run

Magento sites may block repeated requests, even if they don’t use aggressive anti-bot systems like Cloudflare. Session headers, device fingerprints, and IP ranges all need to be varied per request.

Your scraping system must:

- Rotate IPs using datacenter or residential proxies (ensure TTL session limits, compliance with proxy ethics, and proper integration best practices)

- Change browser headers: user-agent, language, screen size

- Use session cookies for logged-in or location-specific views

- Set random delays to mimic human interaction

- Retry only after structured backoff timers

Providers like SOAX or Bright Data offer dynamic IP pools with session management. For smaller setups, open proxy lists can work short-term but require active uptime filtering.

According to InstantAPI’s 2025 study on scraping infrastructure, uptime increased by 47% for teams using IP rotation and fingerprint spoofing together—compared to IP-only solutions.

Detect Errors Before They Reach Your Stack

Most scraping errors don’t look like 500 codes. They look like success, with missing or malformed fields.

To prevent silent data loss:

- Set field-level expectations (e.g., “price must be numeric”)

- Track unexpected DOM changes (e.g., new container for size selector) — use DOM diffing tools like DiffDOM or DOM-Compare for snapshot tracking.

- Compare scraped output to prior runs (e.g., “description shrank by 90%”)

- Alert on missing required fields (e.g., no SKU, no image, no stock)

Use schema validation at the edge: if the scraped record doesn’t pass field logic, flag it immediately. Then, inspect the rendered page snapshot to trace layout changes.

This is where many teams fail—not because the scraper breaks, but because it scrapes the wrong thing without telling you.

Scraping Magento at scale is not just about choosing the right browser or script. It’s about building a fault-tolerant system that:

- Adapts to layout changes

- Rotates fingerprints and sessions

- Validates output before pushing into your database

When Magento changes how a product is displayed—whether for a holiday sale, regional currency, or new plugin—you need a scraper that notices and adjusts.

Learn how to make Magento scraping legally compliant—one of the most vital parts—so your process stands up not just technically, but contractually.

Keep Magento Web Scraping Safe

Data scraping Magento isn’t just a technical task—it’s a legal one. The moment you extract prices, product details, or structured text from a live site, you’re operating within data protection and access laws.

This section outlines the legal boundaries of Magento scraping and how to stay within them, especially in Europe, the US, and cross-border data environments.

Understand When Scraping Is Legal

Scraping public data is not automatically illegal, but it’s not always allowed either.

According to ScraperAPI’s 2025 Legal Guide, scraping is generally legal if:

- The data is publicly accessible (not behind a login)

- You don’t bypass authentication mechanisms

- You follow fair use and rate limits

- You do not harvest personal data without a basis

In the US, the HiQ Labs vs. LinkedIn ruling confirmed that accessing public pages is not a CFAA violation. However, this does not give you a blank check, especially in Europe.

Apply GDPR to Product Pages

Magento sites often display user-generated or traceable content: review counts, seller contact info, and occasionally, personally identifiable details.

Under GDPR guidance by Morgan Lewis, any automated access that collects or processes even indirectly personal data requires:

- A valid legal basis (usually “legitimate interest”)

- Purpose limitation: only use data for what was stated

- Storage limitation: keep scraped data only as long as needed

- Data minimization: collect only what’s required

- No profiling unless legally justified

Even if you’re scraping product data, cookies, IPs, and review snippets can trigger GDPR obligations—especially in cross-border use cases.

Respect robots.txt, But Don’t Depend on It

Magento stores often include a robots.txt file to suggest which URLs should be excluded from automated access. This is not a legal requirement, but it can influence how your scraping is interpreted in disputes.

Use robots.txt as a reference, not a rule:

- If access is public, scraping is not “unauthorized” by default

- If the data is behind a login or session wall, you must stop

- If the site blocks bots via rate-limiting or anti-bot tech, you cannot bypass without risk

Stripe’s GDPR and eCommerce Guide emphasizes transparency and lawful basis as the core pillars of data use. That applies to internal scraping just as much as external targeting.

Magento Scraping Compliance Checklist

Use this checklist to assess whether your data scraping Magento process is legally defensible and audit-ready:

| Requirement | Action |

| Public Access Only | Scrape only pages visible without login |

| No Personal Data Collected | Exclude names, emails, and IPs unless legally justified |

| Rate-Limit Respect | Do not overload servers or bypass access control |

| Robots.txt Observed | Follow exclusions unless you have direct consent |

| Legitimate Interest Stated | Document your business use case for data collection |

| Storage Duration Defined | Purge scraped data after its business use expires |

| Consent When Required | Especially for reviews, feedback, or hybrid content |

Magento scraping is lawful when done transparently, within technical boundaries, and with regulatory safeguards in place. You must treat scraping not as a workaround, but as a system activity subject to audit, review, and limitation.

In the next chapter, we’ll look at real-world B2B Magento scraping use cases from GroupBWT practice—showing how enterprises extract product data to support price monitoring, catalog control, and competitive positioning.

Magento Scraping in Practice

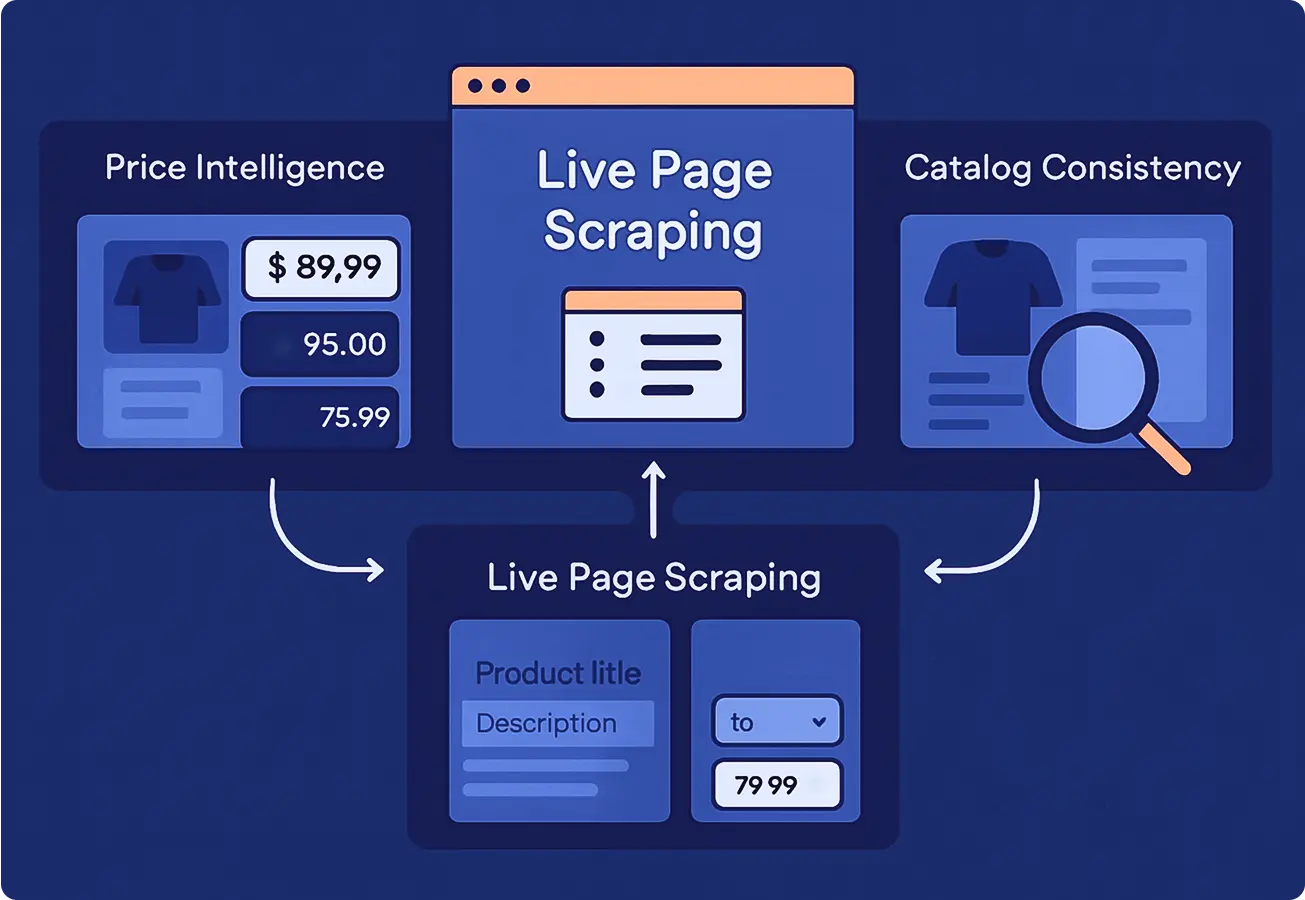

Magento product scraping supports critical business workflows in B2B: price tracking, catalog quality control, and channel oversight. Below are examples from enterprise use cases where scraping the live site—not the backend—enabled better decisions, faster execution, and lower risk.

Detect Undisclosed Price Variants

A global equipment supplier selling through branded resellers found that price tiers were not visible in Magento’s API. Only the base price appeared, while volume discounts and location-based prices were injected client-side.

With product data scraping, the team extracted:

- Regional pricing for B2B partners (e.g., EU vs. US)

- Tiered discounts triggered by cart quantity

- Flash prices are shown only in modals

Pricing intelligence became accurate across 12 regions. This enabled the company to realign discount bands and reduce margin leakage by over 15%.

Monitor Catalog Drift Across Vendors

A home goods brand operating 40+ white-label stores noticed listing inconsistencies. Each storefront was Magento-based but customized separately. Description fields, product titles, and SKUs drifted, leading to mismatches in central databases.

Scraping each store’s live product pages allowed the client to:

- Compare descriptions vs. source content

- Flag unauthorized changes to brand copy

- Identify missing technical specs (e.g., voltage, weight)

They achieved 98.5% catalog uniformity across all partner stores without accessing internal admin panels.

Track Inventory Without Direct Access

A B2B distributor needed to monitor third-party Magento sellers to detect when products were out of stock or had changed availability policies. The sellers did not share structured inventory feeds.

Magento scraping sessions were used to:

- Parse stock levels per variant (color, size)

- Identify “only X left” banners

- Capture delays in shipping availability

With this visibility, the distributor built a stock-risk dashboard and alerted procurement teams to stockouts 2–3 days earlier than before.

These examples show that Magento product scraping is a core visibility layer. Whether tracking prices, protecting brand content, or watching inventory shifts, scraping the actual buyer-facing page is the only reliable source of truth.

The final section outlines the components, logic, and infrastructure needed to turn scraping from a quick fix into a repeatable asset.

Build a Magento Scraping System That Lasts

To keep Magento’s scraping system stable, audit-ready, and actionable, your architecture must cover five layers: extraction, rendering, rotation, validation, and compliance.

Choose a Stable Architecture

The best Magento web scraping system requires each component to handle one job well:

| Layer | Tool or Method | Purpose |

| Rendering | Playwright, Puppeteer | Load and execute JS, interact with UI |

| Extraction | Scrapy, XPath, CSS selectors | Locate and pull values from the layout |

| Rotation | Proxy pools, session headers | Avoid detection and blocking |

| Validation | Schema checks, anomaly detection | Ensure fields are present and correct |

| Orchestration | Airflow, Prefect | Schedule jobs, retries, and snapshots |

The output must pass a schema check before it hits your database. Otherwise, you risk collecting broken fields without knowing.

Magento layouts evolve. Seasonal themes, new plugins, or layout tweaks can silently break your scraper, even if the request succeeds.

Your system should:

- Track DOM diff snapshots (before vs. after changes)

- Alert when field wrappers change (e.g., price class name)

- Store rendered examples for auditing

- Trigger re-validation if critical layout selectors shift or expected elements go missing

This lets your team respond to changes before corrupted data enters downstream systems. This comprehensive approach to extraction and structuring is why dedicated web scraping service providers are necessary for Magento.

Reveal Gaps in Magento Data

Not sure what’s missing—or why your data doesn’t match what buyers see? Let’s audit it together.

In a 30-minute call, we’ll identify where your Magento setup breaks and how to fix it with scraping systems that last. The complexity of Magento’s dynamic content means that extracting all necessary fields often requires integrating advanced processing methods like web scraping with LLM tools.

FAQ

-

How often does Magento storefront data update compared to API feeds?

Magento storefronts update live. APIs don’t. Prices, availability, discounts, and product visibility can change instantly on the frontend—based on session, region, or cart logic—while the API remains static. This delay creates falsehoods for anyone relying on feeds alone.

-

How many Magento B2B websites use dynamic or region-specific pricing?

Most B2B Magento setups use some form of dynamic pricing by customer group, region, or volume. These prices are rendered at runtime and can’t be accessed without loading the page. Scraping the visible storefront is the only way to capture what buyers see.

-

What gets lost if you only use the Magento API?

You lose what matters. The API often omits bundle structures, conditional stock info, tiered prices, shipping thresholds, or live promotions. Scraping the frontend restores this missing context, especially critical for accurate competitor monitoring and catalog intelligence.

-

Is it legal to scrape Magento product data?

Yes, if the data is public and the method is compliant. Product data isn’t protected unless it contains personal or copyrighted material. What matters is how you collect, store, and use it. That means honoring privacy laws, rate limits, and site policies.

-

Why scrape Magento at all if I can export a feed?

Because feeds are partial, static, and delayed. Scraping delivers the exact product view your buyer sees, including discounts, variants, urgency banners, or cross-sell placements. That visibility drives more accurate sourcing, pricing, and market moves. Feeds can’t match that.